Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

New in ME 1.26!

A new item type for narrative feedback that you want to keep the target from seeing. The Confidential Item can be included on forms with other items that are visible to learners.

The option for administrative users to disable initial task creation email notifications on distributions.

A database setting that forces A&E task expiry to 23:59 (configured per distribution type) (assessment_tasks_expiry_end_of_day)

Three database settings that allow a developer to configure how tasks sort on user's A&E tabs, e.g., by event date, then delivery date, then expiration date instead of just by delivery date (assessment_sort_assessor_pending, assessment_sort_assessor_completed, assessment_sort_target_completed)

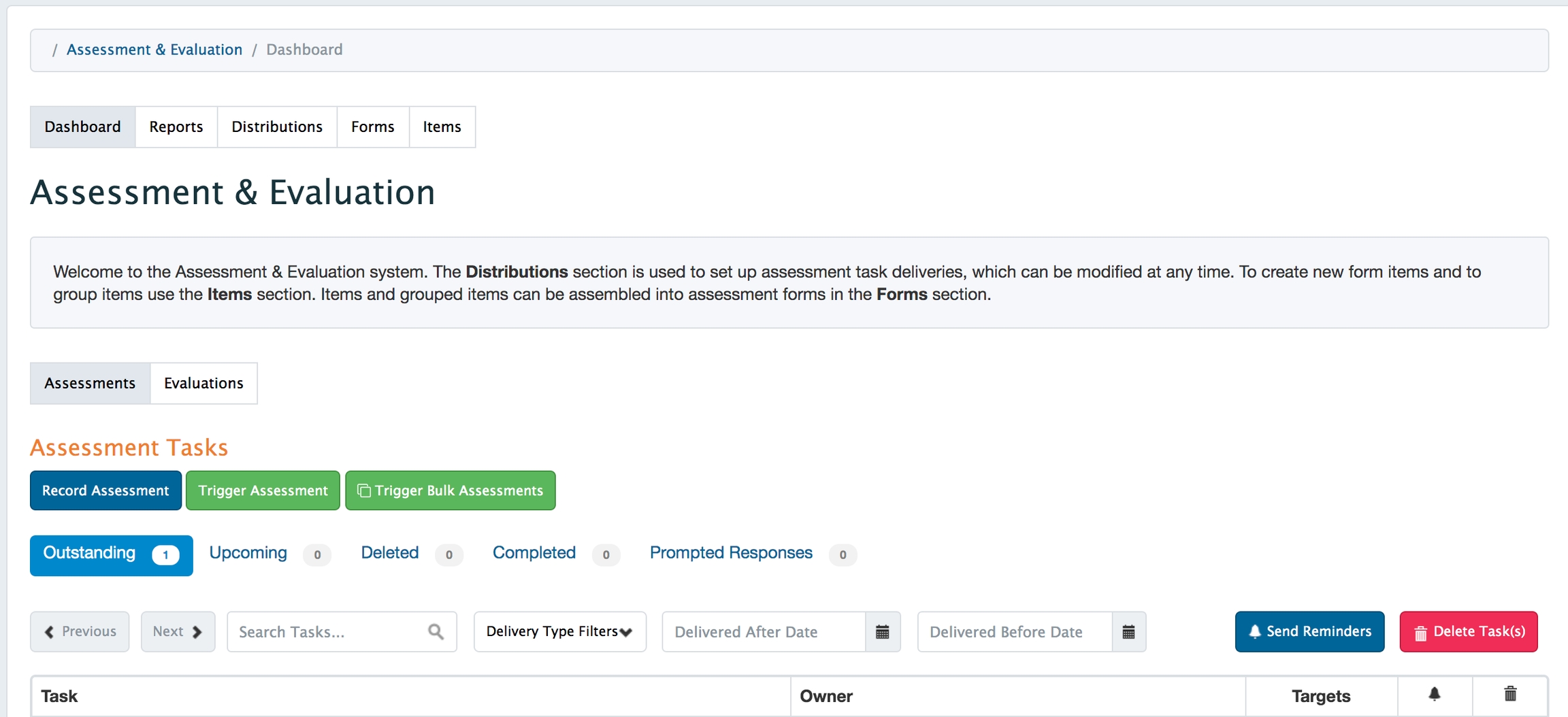

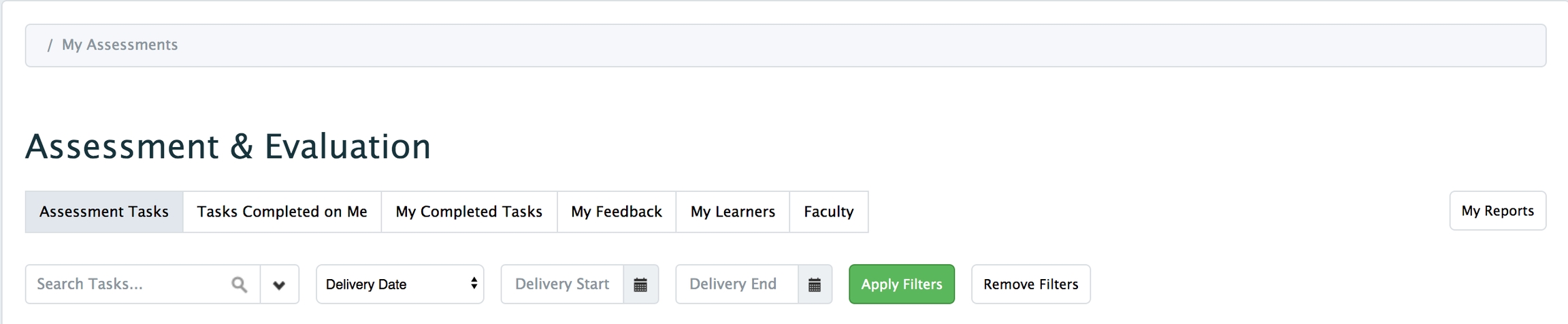

There are multiple tools available to facilitate assessment and evaluation through Elentra. See here for an overview of the multiple tools available and determine which is most appropriate for your needs. The Assessment & Evaluation module can be used to:

Create items and forms to assess learner performance on tasks (e.g., clinical skills and workplace based assessments)

Create items and forms to have learners evaluate courses, faculty, themselves, and other activities

Create items and forms to assess learner performance on gradebook assessments

Note that to allow inline faculty grading using a rubric form or similar, you build a form in Assessment & Evaluation, then attach it to a Gradebook Assessment

The Assessment & Evaluation Module in Elentra is predominantly used to assess learner performance in a clinical environment, and provide multiple user groups with the ability to evaluate faculty, courses/programs, and other activities within the context of your organization. Since the Assessment & Evaluation Module essentially allows you to create forms for people to complete you could even use it to do a pre-course survey, or as a way to collect information from a group about their work plans in a collaborative activity.

To use the Assessment & Evaluation Module users must create items (e.g., questions, prompts, etc.), and create forms (a collection of items). Organizations can optionally allow users to initiate specific forms based on a form workflow (e.g. to allow learners to initiate an assessment on themselves by a faculty member), or organizations can create tasks for individuals to complete using a distribution. A distribution defines who will complete a form, when the form will be completed, and who or what the form is about.

Assessment and Evaluation module users also have a quick way to view their assigned tasks and administrators can monitor the completion of tasks assigned to others.

Reporting exists to view and in some cases export the results of various assessments and evaluations.

The Competency-Based Education module also includes a variety of form templates for use. There is no user interface to configure form templates at this point. For instructions specific to Competency-Based Education, please see the CBE tab.

Database Setting

Use

disable_distribution_assessor_notifications

This goes hand in hand with unauthenticated_internal_assessments and enable_distribution_assessor_summary_notifications. When using unauthenticated distribution assessments, you probably want a single summary email with a link to each task rather than an email for each new task. This disables the per-task reminders.

enable_distribution_assessor_summary_notifications

This adjusts the emails for distribution assessments to have a nightly summary of all new tasks, and then a weekly summary of tasks that are still incomplete.

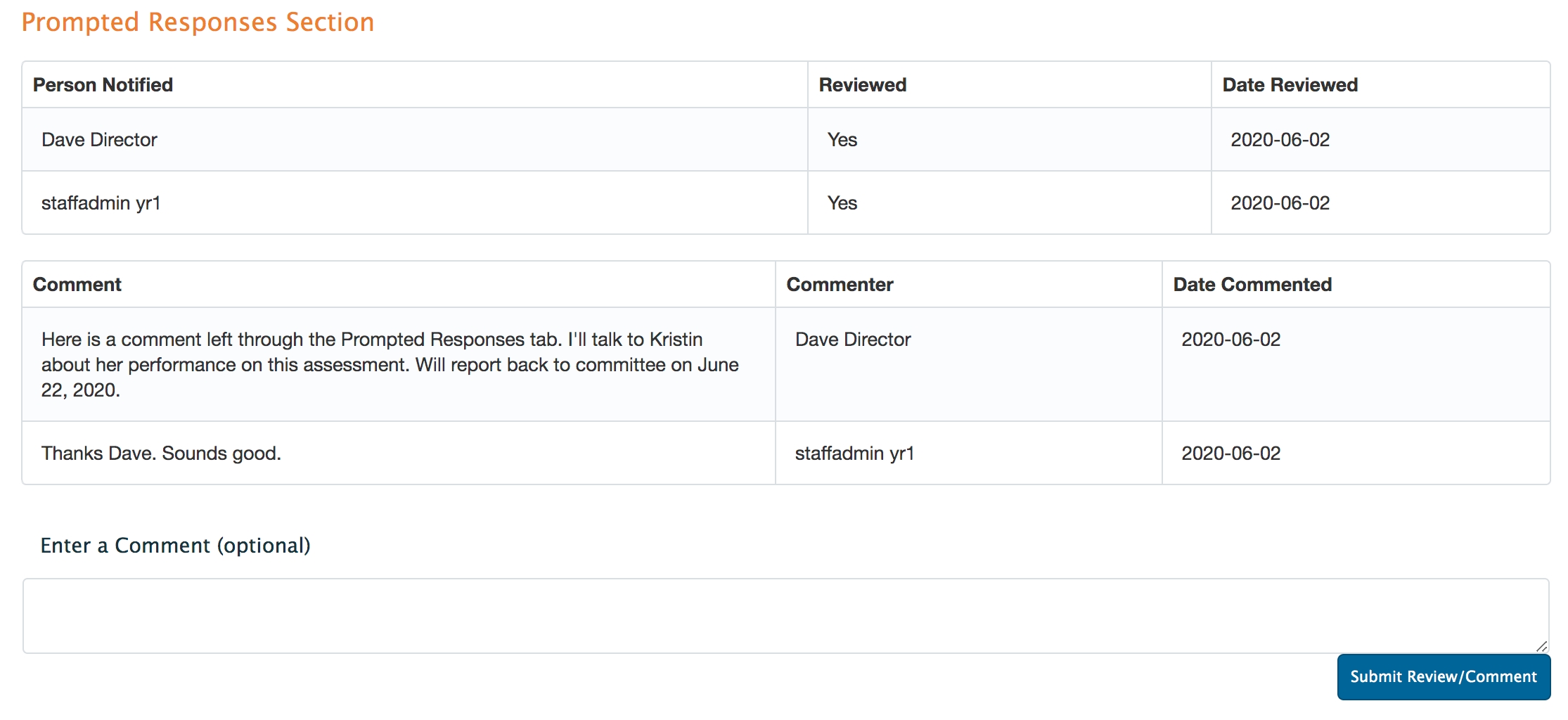

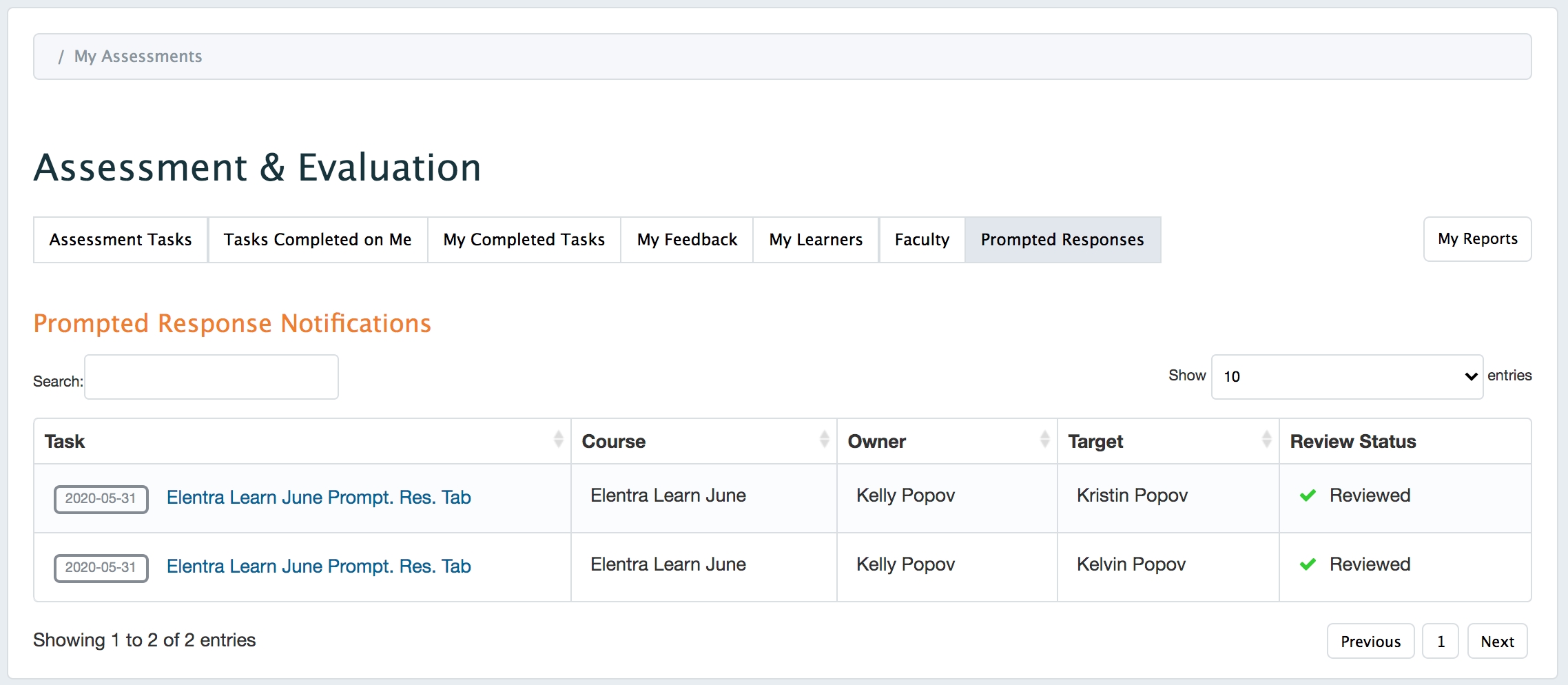

enable_prompted_responses_comments_and_reviews

Controls the visibility of a Prompted Responses tab for staff and faculty users who receive prompted response notifications.

flagging_notifications

include_name_in_flagged_notifications

defines whether or not to include names of users in prompted response notifications in Assessment and Evaluation items

unauthenticated_internal_assessments

Impacts email notifications sent to assessors. If enabled, allows distributed assessments to be completed by internal assessors without logging in via a unique hash provided via email

assessment_tasks_show_all_multiphase_assessments

assessment_delegation_auto_submit_single_target

On by default, controls whether or not a page will auto submit when there is a single target in a delegation task

assessment_display_tasks_from_removed_assessors

when enabled, will display assessment tasks from assessors that have been removed from the distribution

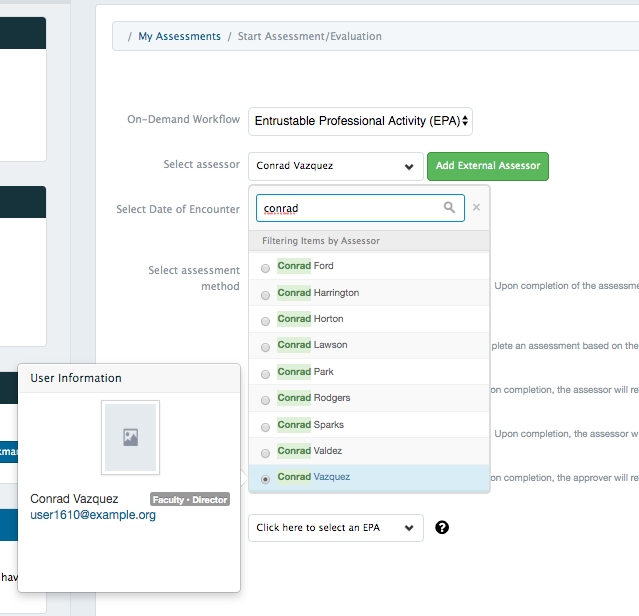

cbme_ondemand_start_assessment

cbme_ondemand_start_assessment_shortcut_button

optional, if selected a short cut icon will be displayed to learner in their cbme dashboard beside corresponding EPAs

cbme_ondemand_start_assessment_add_assessor

optional, if selected you will have the ability to add new assessors. By default these added assessor will be stored in the cbl_external_assessors table. If you want the added assessor to be an internal user you must also merge ME-1434 to get this complete feature

cbme_ondemand_start_assessment_director_as_staff_workflow

toggle whether faculty directors get the staff workflow view. Default is off (0) - all faculty get the faculty workflow view

cbme_ondemand_start_assessment_replace_admin_trigger

to toggle whether the “Trigger Assessment” button on /admin/assessments gets switched over to use the workflow view. Default is off (0).

cbme_ondemand_expiry

Enable to apply an expiry date for tasks generated from on demand workflows.

cbme_ondemand_expiry_offset

If cbme_ondemand_expiry is in use, define the period of time after which an on demand task will expire.

cbme_ondemand_expiry_workflow_shortnames

If cbme_ondemand_expiry is in use, define which workflow types it applies to.

cbme_assessment_form_embargo

For organizations with CBME enabled, the option allows you to hide rubric forms until certain conditions are met.

assessment_triggered_by_target

For adhoc distributions only, controls whether or not a target can initiate a form. Enabled by default.

assessment_triggered_by_assessor

For adhoc distributions only, controls whether or not a target can initiate a form. Enabled by default.

assessment_method_complete_and_confirm_by_pin

For adhoc distributions only, controls the available form completion method. Enabled by default.

assessment_method_send_blank_form

For adhoc distributions only, controls whether or not a target can initiate a form. Enabled by default.

housing_distributions_enabled

clerkship_housing_department_id

evaluation_data_visible

Can be used to restrict users from opening individual, completed evaluation tasks

show_evaluator_data

Can be used to hide names of evaluators from distribution progress reports, the Admin > A&E Dashboard, and reports

disable_target_viewable_release_override

Can be added to the course_settings table or the settings table to turn off the ability for evaluators to optionally release complete evaluations to a target (restricting release option can also be controlled throught distributions if this setting is not applied)

assessment_tasks_expiry_end_of_day

Use this to store a list distribution types were you want the task expirty to be 23:59 on the day selected (inputs: ‘date_range,rotation_schedule,delegation,eventtype,adhoc,reciprocal’) (ME 1.26)

assessment_sort_assessor_pending

Optionally configure how tasks display on a user's My Assessment Tasks tab. Available inputs: a JSON encoded array of arrays, e.g., [{"sort_order":"desc","sort_column":"`event_start`"},{"sort_order":"desc","sort_column":"`task_title`"},{"sort_order":"desc","sort_column":"`task_expiry_date`"}]' (ME 1.26)

assessment_sort_assessor_completed

Optionally configure how tasks display on a user's My Completed Tasks tab (see above for input details) (ME 1.26)

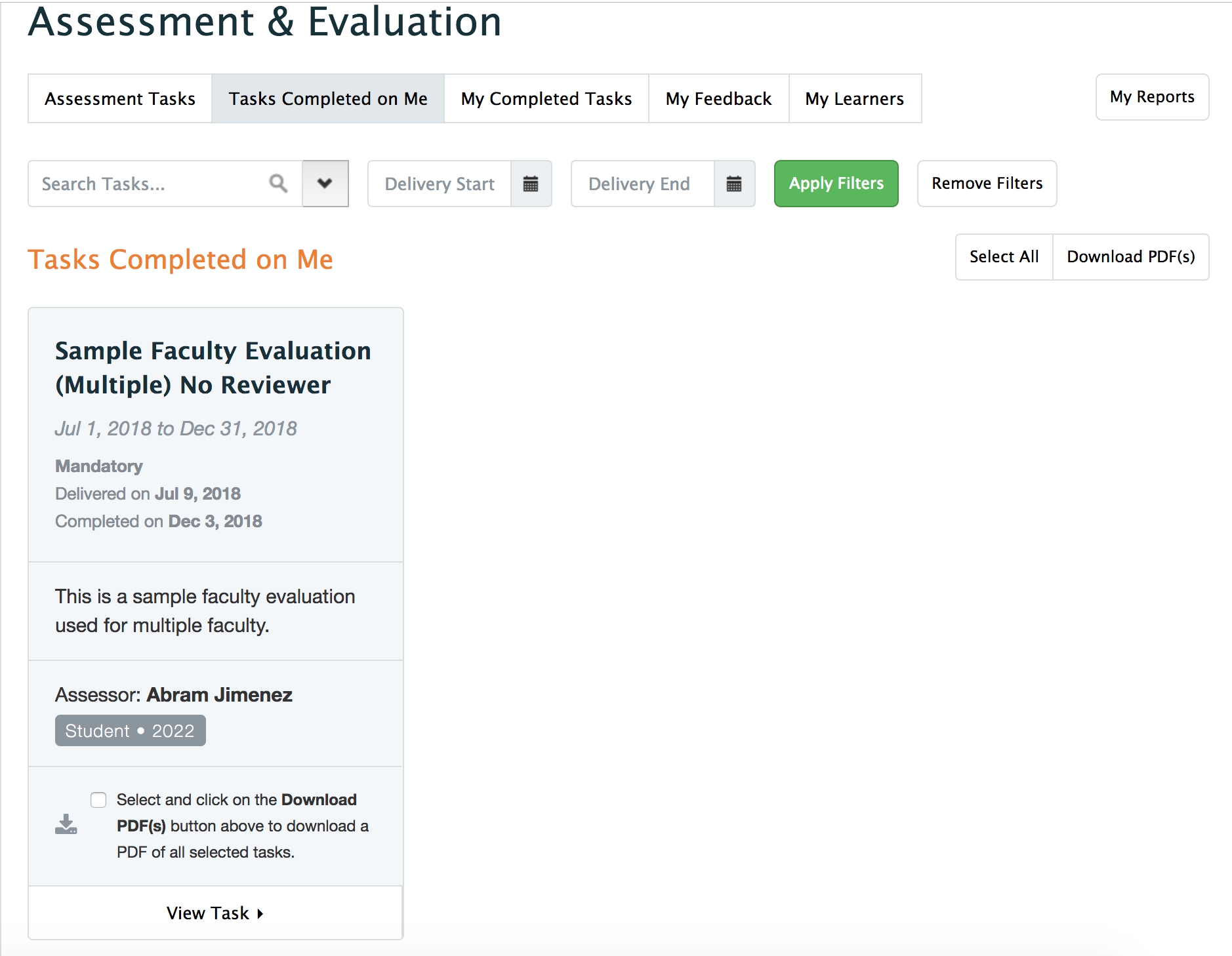

assessment_sort_target_completed

Optionally configure how tasks display on a user's Tasks Completed on Me tab (see above for input details) (ME 1.26)

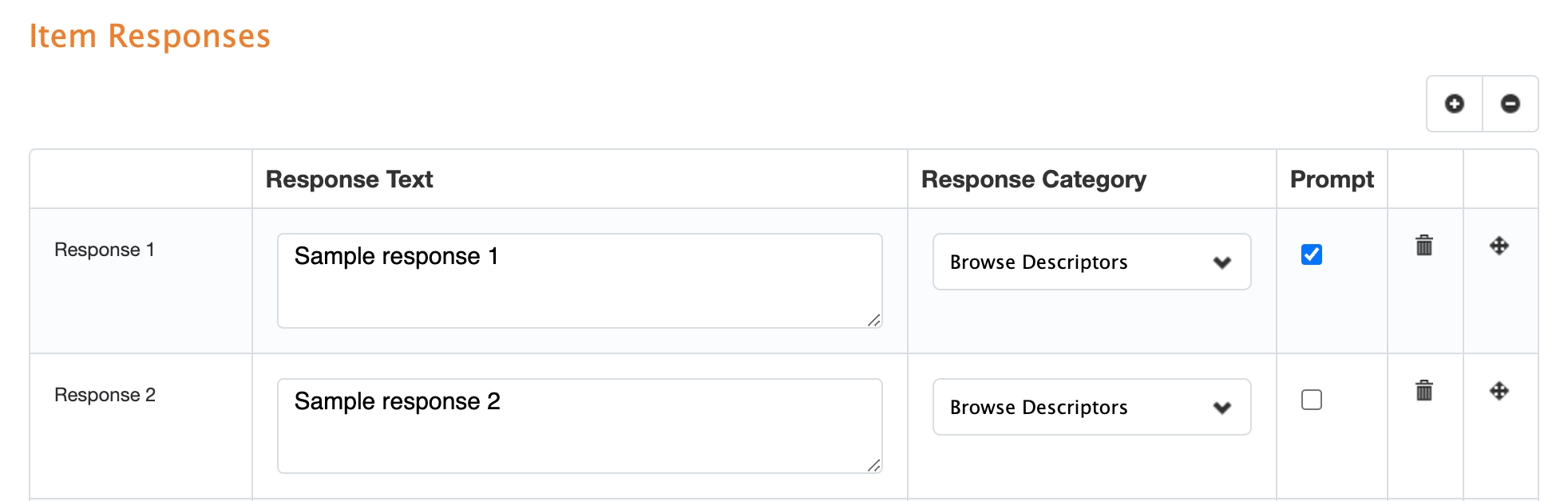

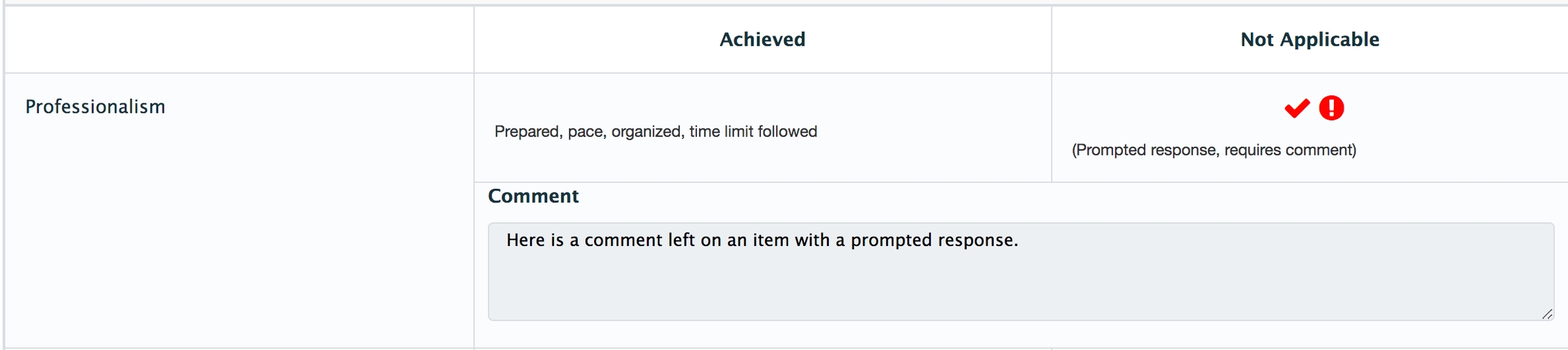

When creating assessment and evaluation items, users will see the option to check a box designating a response option as a Prompt. This sets a flag on the response option that allows Elentra to send email notifications to users when that response option is selected when someone answers that item on a task. Examples of prompted responses could include unsatisfactory performance, patient safety issues, etc.

Prompted response notifications are available in the following scenarios:

For items used on forms that are delivered through distributed tasks

Administrators define the staff and faculty to notify of a prompted response in the Distribution Wizard, Step 5: Results > Notifications and Reminders

Staff and faculty will receive email notifications and can also view Prompted Response information in Elentra in the Prompted Responses tab is enabled

Administrators define whether to notify learners when they are the target in the Course Setup tab (form must be associated with the course)

Note that if a distribution is configured to have a Reviewer, prompted response email notifications will not be delivered to learners until the Reviewer has completed their review and released a task

When Form Templates are used, they include default items in a Concerns section, and a place for Form Feedback. These items are prompted responses by default, however, learners do not have access to view these items, nor will they be notified if one of these items is selected with them as the target. Instead, faculty directors and program coordinators will be notified of this information via email or the Prompted Responses Tab if enabled.

Automated notifications for prompted responses on items other than Concerns and Feedback sections of Form Templates for on-demand forms is not yet supported in Elentra.

A form is a collection of items used to assess a learner, or to evaluate a faculty member, event, rotation, course or anything else in your organisation. Forms can be created for specific courses, or for use across an entire organization. When building a form administrators can optionally indicate a form workflow which allows Elentra users to initiate a form on-demand (e.g. for the purposes of clinical assessment).

This help section is primarily about creating and managing forms outside the context of Elentra's Competency-Based Education tools. See here for information about creating CBE-specific forms.

If you are creating a form to be attached to a gradebook assessment please note that not all item types are supported because there is no structure to weight them on the form posted to the gradebook. Do not use date selector, numeric, or autocomplete (multiple responses) items. When creating a form to use with a gradebook assessment it is recommended that you only use multiple choice, dropdown selector, rubric (grouped item only), and free text items. Please see additional details about form behavior in gradebook in the Gradebook section.

Elentra supports several types of forms.

Generic Form

Users can add any items to this form type and it will be immediately available for us.

Standard Rotation Evaluation Form

You will require developer assistance to add this form to your organization.

Only use this form type if you are also using the Clinical Experience rotation scheduler and you use an identical form to evaluate all rotations within a course.

This form can have preset items that appear on the form each time one is created. There is no user interface to build the templated items and a developer needs to do so.

When a new form is made, the templated items (if any exist) will be automatically added to it. Users can then optionally add additional items. After adding items, the form must be published before it can be used.

Forms must be permissioned to a course to be used, and each course can only have one active form at a time. If a new form is created it will overwrite the existing form.

Each course must have its own form permissioned to it. (If forms are identical for all courses, you can create one form, copy it multiple times and adjust the course permissions.)

This form can be automatically distributed based on a rotation schedule (set by administrators when building rotations) OR can be made available to be accessed by learners on demand (use the on-demand workflow for this option).

Standard Faculty Evaluation Form

You will require developer assistance to add this form to your organization.

Only use this form type if you use an identical form to evaluate all faculty within a course.

This form can have preset items that appear on the form each time one is created. There is no user interface to build the templated items and a developer needs to do so.

When a new form is made, the templated items (if any exist) will be automatically added to it. Users can then optionally add additional items. After adding items, the form must be published before it can be used.

Forms must be permissioned to a course to be used, and each course can only have one active form at a time. If a new form is created it will overwrite the existing form.

This form can be made available for learners to initiate on demand using on-demand workflows.

Rubric Forms (available only with CBE enabled)

Rubric forms can be used to assess learners and provide lots of flexibility to administrators to build the form with the items desired.

As long as at least one item on a rubric form is mapped to a curriculum tag, and the corresponding tag set is configured to be triggerable, learners and faculty will be able to access the form on demand.

Results of completed rubric forms are included on a learner's CBE dashboard assuming the mapped tag set is configured to show on the dashboard.

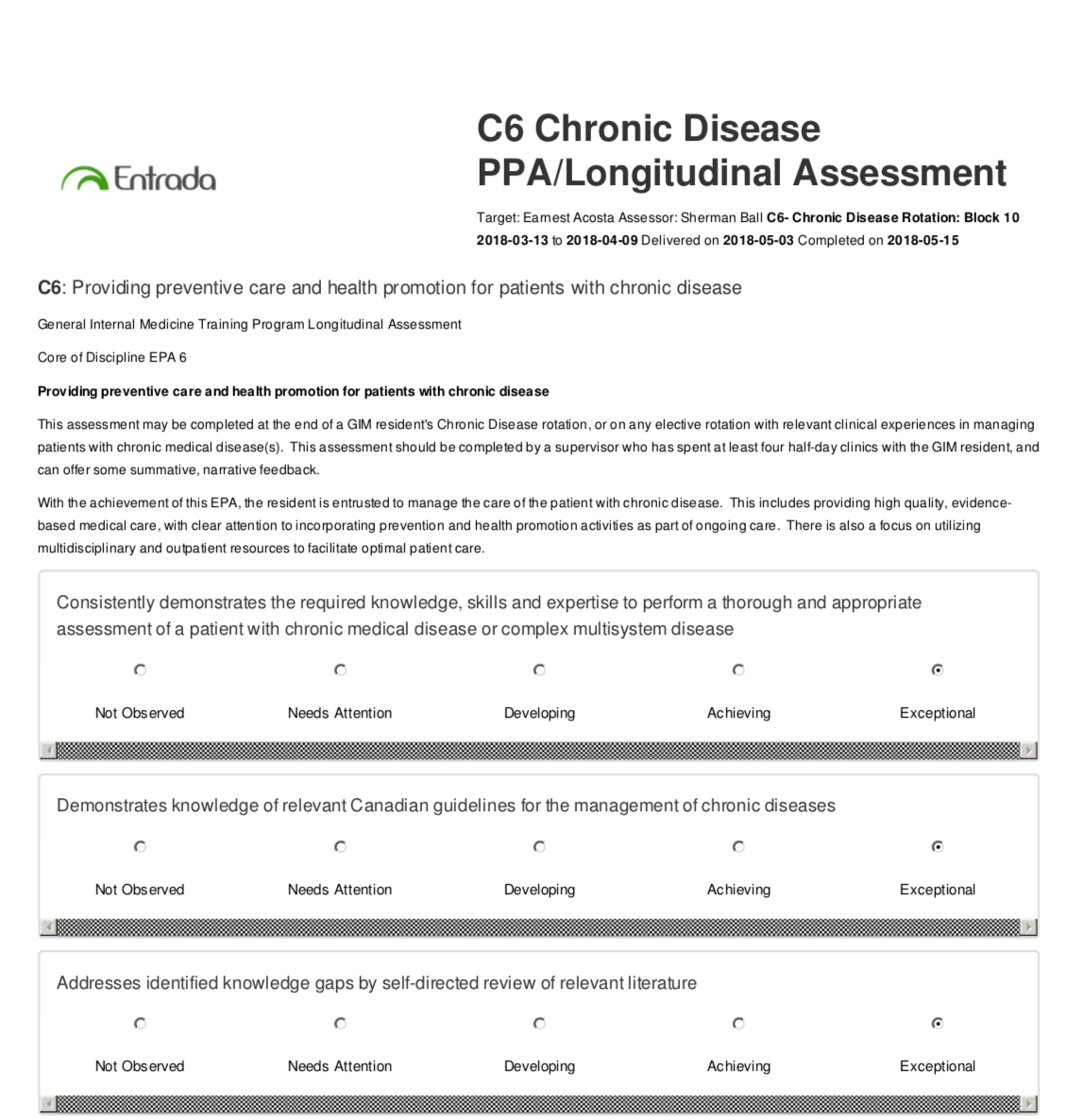

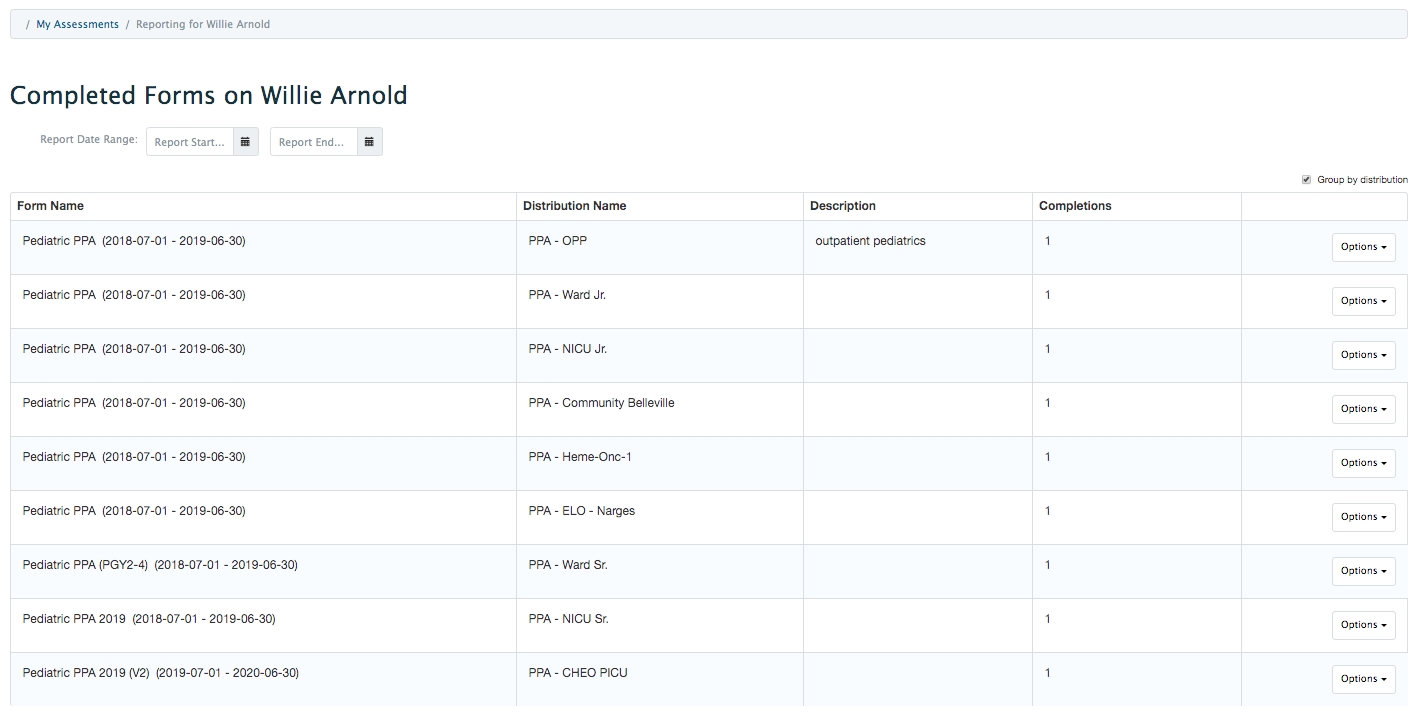

Periodic Performance Assessment Forms (available only with CBE enabled)

A Periodic Performance Assessment (PPA) Form is designed to capture longitudinal, holistic performance trends. It functions very similarly ot a rubric form and offers flexibility in terms of the items that can be added to it.

As long as at least one item on a PPA form is mapped to a curriculum tag, and the corresponding tag set is configured to be triggerable, learners and faculty will be able to access the form on demand.

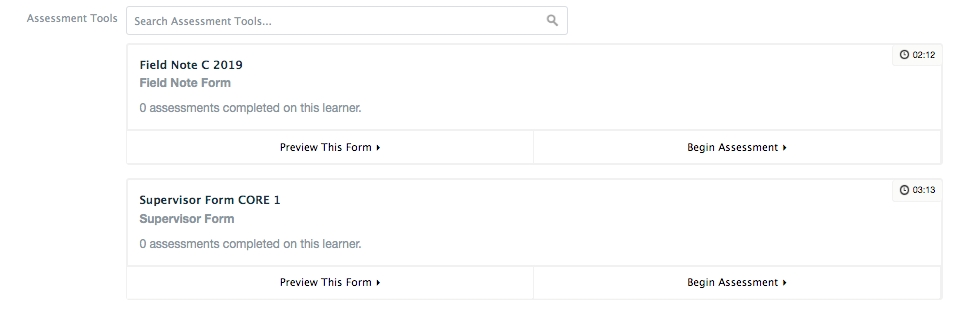

Field Note Form (available only with CBE enabled)

A field note form template is used to give a learners narrative feedback about their performance.

Supervisor Form Template (available only with CBE enabled)

A supervisor form is used to give a learner feedback on a specific curriculum tag (e.g., an EPA) and can be initiated by a learner or supervisor. Once a curriculum tag is selected, the form displays the relevant child tags (e.g., milestones) to be assessed on an automatically generated rubric. A supervisor can indicate a learner’s progress for each curriculum tag that was observed and can provide a global entrustment rating. Comments can be made optional, prompted or mandatory in each section of the form.

Procedure Form Template (available only with CBE enabled)

A procedure form is an assessment tool that can be used to provide feedback on a learner’s completion of a specific procedural skill. Once a procedure is selected, specific criteria will be displayed. A procedure form can be initiated by a learner or faculty.

There are prerequisites to using a procedure form. A course must have uploaded procedures in their Contextual Variables AND have uploaded procedure criteria for each procedure.

Smart Tag Form (available only with CBE enabled)

Smart tag forms are template-based forms which are built and published without curriculum tags attached to them.

Curriculum tags are attached based on the selections made when triggering assessments using a smart tag form.

The assessment and evaluation module provides a way for learners to be assessed, especially in clinical learning environments, and a way for all users to evaluate courses/programs, faculty, learning events, etc. Any forms used for assessment and evaluation require form items (e.g., questions, prompts, etc.).

If you are creating a form to be attached to a gradebook assessment please note that not all item types are supported because there is no structure to weight them on the form posted to the gradebook. When creating a form to use with a gradebook assessment it is recommended that you only use multiple choice, dropdown selector, rubric (grouped item only), and free text item. Do not use date selector, numeric, or autocomplete (multiple responses) items.

Note that you can copy existing items which may save time. To copy an existing item, click on the item and click 'Copy Item' which is beside the Save button.

Navigate to Admin>Assessment & Evaluation.

Click 'Items'.

Click 'Add A New Item'.

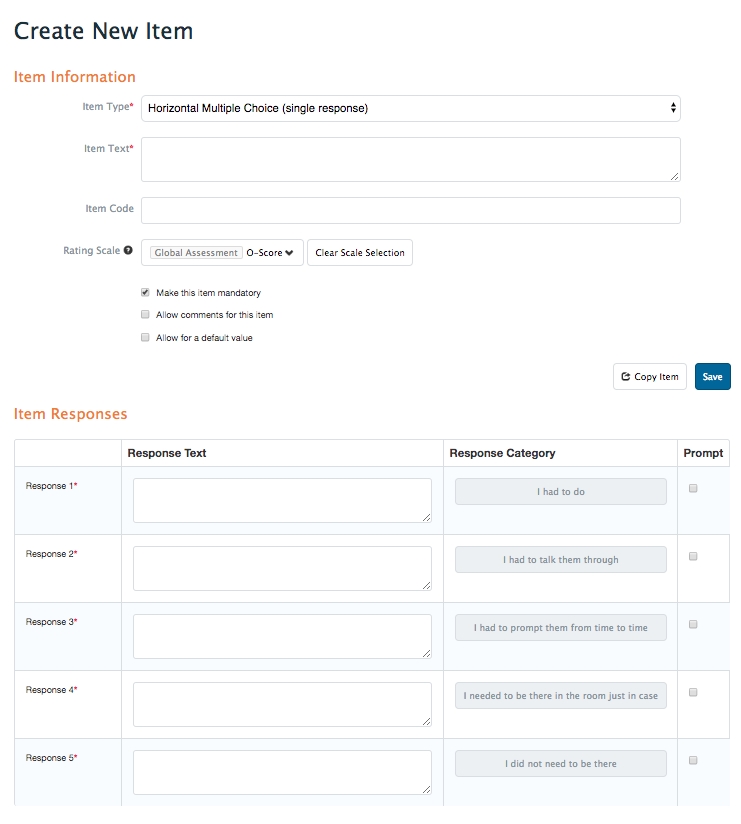

Complete the required information, noting that different item types will require different information. Common item details are below: Item Type: This list shows the item types supported by Elentra. Item codes will display when you use a list view of items and A complete list of item types is provided below. Item Text: This is what will show up on a form this item is added to. When you view items in the detail view you'll also see the item text. Item Code: This is an optional field. Item codes do display when you view items in a list and they are searchable. Some organizations apply their own coding system to their items, but another use case might be if you are importing items from another tool or vendor and they have a coding system you want to match. Rating Scale: Rating scales can be configured through the Scales tab within Assessment & Evaluation. First select a scale type and then select the specific scale. Selecting a rating scale will prepopulate the response categories for this item. In some item types you will also be required to add response text (e.g. multiple choice items) and that text will show up on the actual form. In other question types you may rely on just the response categories. Mandatory: Click this checkbox if this item should be mandatory on any forms it is added to. Allow comments: Click this checkbox to enable comments to be added when a user responds to this item. If enabled, you have several options to control commenting.

Comments are optional will allow optional commenting for any response given on this item.

Require comments for any response will require a comment for any response given on this item.

Require comments for a prompted response means that for any response where you check off the box in the Prompt column, a user will be required to comment if they select that response.

Allow for a default value: If you check this box you will be able to select a default response that will prepopulate a response when this item is used on any form. Set a default response by clicking on the appropriate response line in the Default column.

Depending on the question type, add or remove answer response options using the plus and minus icons.

Depending on the question type, reorder the answer response options by clicking on the crossed arrows and dragging the answer response option into the desired order.

Add curriculum tags to this item as needed.

If you have access to multiple courses/programs, first use the course/program selector to choose the appropriate course/program which will limited the available curriculum tags to those assigned to the course/program. Click the down arrow beside the course selector and search for the course by beginning to type the course name. Click the circle beside the course name.

Click through the hierarchy of tags as needed until you can select the one(s) appropriate for the item.

As you add curriculum tags, what you select will be listed under the Associated Curriculum Tags section.

Scroll back up and click 'Save'.

Horizontal Multiple Choice (single response): Answer options will display horizontally on the form and the user can select one answer. Response text required; response category optional. Response descriptors provide another data point so you can potentially report on them in the future. They are metadata in MC questions whereas in a rubric they are displayed. Horizontal MC will let you assign the same response descriptors to multiple responses.

Vertical Multiple Choice (single response): Answer options will display in a vertical list on the form and the user can select one answer. Response text required; response category optional.

Drop Down (single response): answer options will display in a dropdown menu. Response text required; response category optional.

Horizontal Multiple Choice (multiple responses): Answer options will display horizontally on the form and the user can select two or more answers. Response text required; response category optional.

Vertical Multiple Choice (multiple responses): Answer options will display in a vertical list on the form and the user can select two or more answers. Response text required; response category optional.

Drop Down (multiple responses): Answer options will display in a dropdown list that remains open and allows users to select multiple responses using the control or command and enter/return keys.

Free Text Comments: Use this item type to ask an open ended question requiring a written response. (In ME 1.11 and lower you can not map a free text comment to a curriculum tag set.)

Date Selector: Use this item type to ask a question to which the response is a specific date (e.g. What was the date of this encounter?)

Numeric Field: Use this item type to ask a question to which the response is a numeric value (e.g. How tall are you?)

Rubric Attribute (single response): Use this to create an item that relies on response categories as answer options. If you enter text in the response text area it will not show up to the user unless you create a grouped item. If you create a grouped item remember you need to use the same scale across all items to be grouped together. If you want a rubric item to display response text, create a grouped item with just one item included.

Scale Item (single response): Use this to create an item that relies on response categories as answer options. If you enter text in the response text area it will not show up to the user unless you create a grouped item. If you create a grouped item remember you need to use the same scale across all items to be grouped together.

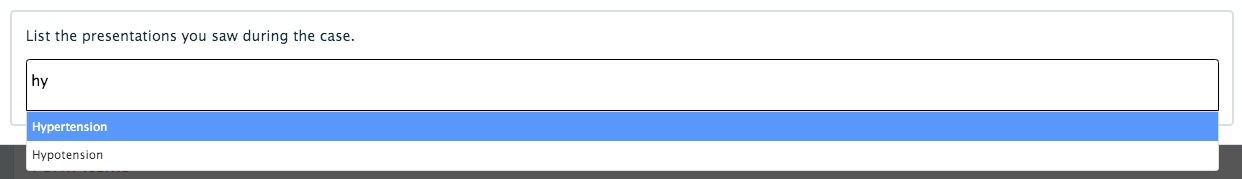

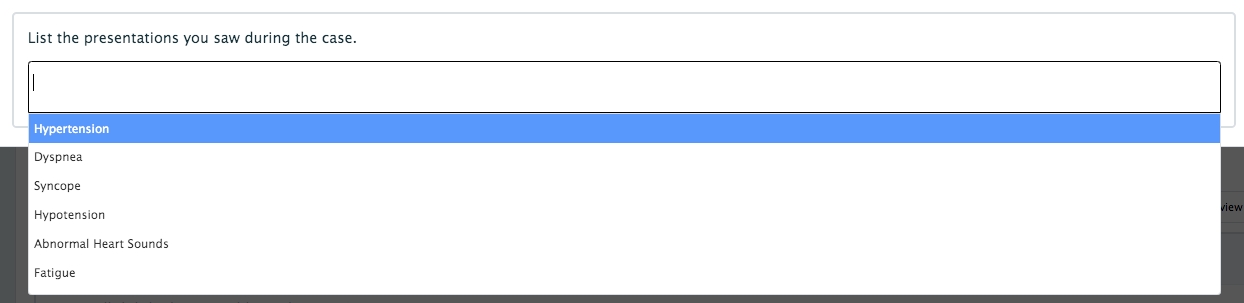

Autocomplete (multiple responses): Use this item to allow users to search the option list in an autocomplete fashion and then make multiple selections. The response options will display in the order they were added to the item and when a user begins to type a response, the list of options will be filtered. The user can select more than one response as needed.

Field Note: This item type was developed specifically for post-graduate family medicine prior to the current work on they dynamic competency based education tools.

To begin you select a curriculum tag set.

For a tag you want to be available to be assessed on a form, you add 4 response descriptors (e.g., Excellent, Needs Improvement) and corresponding level of competency descriptions.

If you have a hierarchical curriculum tag set you can develop items for the bottom/most granular tags in the set.

When you create an assessment form, you can add the relevant curriculum tag set to the form. When individuals use the form they can navigate through the tag set and select the appropriate tag to assess.

Rubric Numeric Validator

This item helps support validated numeric grouped items. You do not need to create any specific items using this item type; rubric numeric validator items can be configured using a checkbox on the grouped item screen (more detail below).

Dynamic Item Placeholder

Do not use this item type when building your own forms. It is a placeholder for form items that are generated and replace this one when forms are published. This type does not render as anything and carries no other information.

Confidential Free Text Comment

Introduced in Elentra ME 1.26, this item type is designed to collect narrative comments that will not be visible to the target of an assessment/evaluation even if the form or a self-report is released to the target for viewing. Please note that in some reports, the target will see the item prompt, but will not be able to see the item response. In other words, the target will know that information was collected, just not what the specific response was.

Confidential item responses will display in some Assessment & Evaluation Administrator reports (e.g. Form Responses Report, Assessment Data Extract)

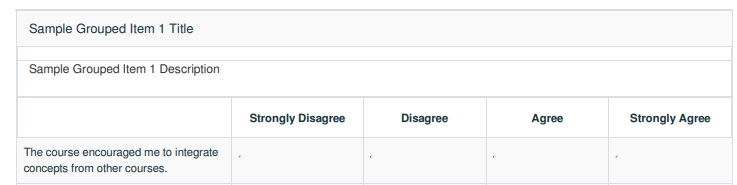

Creating a grouped item allows you to group items and guarantee that they appear together on forms. If you use the rubric attribute or scale item item types, creating a grouped item will create a rubric with common response categories (e.g. developing, achieved) and specific response text for each field (e.g. performed a physical exam with 1-2 prompts from supervisor, independently performed a physical exam). There is also the ability to Copy a Grouped Item which is next to the Create & Attach a New Item and Attach Existing Item(s) buttons. You can choose to create a new item linkage to keep all items as grouped or to create new individual items from the original grouped item.

Navigate to Admin>Assessment & Evaluation.

Click on the Items tab.

Click on the Grouped Items sub-tab.

Click 'Add A New Grouped Item'.

Provide a grouped item name and select a rating scale type and then a rating scale. All items in the group will have the same response categories assigned to them, as configured through the rating scale. (Rating scales can be set up through the Scales tab in Admin>Assessment and Evaluation.)

Click 'Add Grouped Item'.

Complete the required information, noting the following: Title: This will display when you view a list of grouped items. Description: This field is optional; note that the grouped item description will display below the grouped item title on forms produced for users (see image below).

Grouped Item Code: Optional.

Validate numeric items: Check this box if you plan to use multiple numeric items that add up to a total you want to validate. (See more detail below.)

Rating Scale: This may already be set based on creating the grouped question.

Permissions: Adding a group, course, or individual here will give those users access the the grouped item.

To add items to a grouped item you can either create and attach a new item or add existing items (click the appropriate button).

If attaching existing items, use the search bar and filters to find items. You will only be shown items that match the rating scale parameters you've selected. Click the checkbox beside a question (in list view) or beside the pencil icon (in detail view) and click 'Attach Selected'. Because an existing item may already be in use on another form, in some cases you will not be able to modify the response descriptors for that item.

If creating and attaching items, follow the instructions above for creating items. The rating scale for your new items will be set to match the rating scale of the grouped item. After creating one item, you can repeat the steps to create and attach as many items as needed.

Click 'Save'.

To edit an item click on the pencil icon. Bear in mind that an existing item may already be in use on another form.

To delete an item from a grouped item, click on the trashcan icon.

To reorder the items in the grouped item, click on the crossed arrows and drag the item into the appropriate location.

When you have added all required items to the grouped item, click 'Save'.

Click 'Grouped Items' at the top of the screen to return to the list of grouped items.

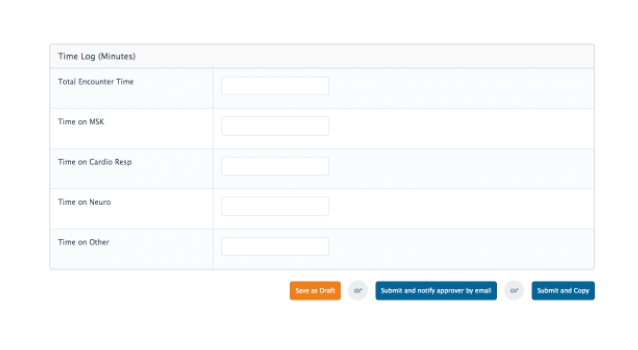

If you want to include a grouped item on a form that allows the form user to distribute points/minutes/other to different items, and have a sum that doesn't exceed a total value (dictated by admin. or by user) you can check off the Validate numeric items box.

In the example below the user enters their total encounter time (e.g., 180 minutes) and then divides those minutes between the different categories (e.g., 60 MSK, 30 Cardio Resp, 90 Neuro). The item is validated meaning that it won't let the user enter numbers for each item that don't total the first value entered.

When creating a grouped item, check off the validate numeric items box.

Numeric validator title: Give the validator (i.e., the total value Elentra will check items against) a title. This will display as the first item in the grouped item.

Numeric validation type:

I will define the total: This lets the item creator define the total for every time this item is used.

Numeric total: Enter the total to be used with this item.

The user completing the form will define the total: This will allow the user to create their own total each time they use this item.

Add items to the grouped item. Note, the items you add should be numeric items.

Use the item on a form as you normally would. Remember you may need to provide instruction to you users on how to complete the item.

When users create items, they automatically have permission to access and use those items. Users can optionally give permission for other individuals and coursess to access items. As much as possible, we recommend permissioning items to courses to reduce future workload to reassign items to individuals as you experience staff changes.

Navigate to Admin>Assessment and Evaluation.

Click 'Items'.

From list view, click on any item to open it. From grid view, click the pencil icon to edit an item.

Give permission to an individual or course by first selecting the appropriate title from the dropdown menu, and then beginning to type in the search bar. Click on the desired name from the list that appears below the search bar.

If you give permission to a course, anyone listed on as a course contact on the setup page AND with access to the Assessment and Evaluation module will have access to the item.

Currently, permissioning an item to an organization only allows medtech:admin users to access it. For that reason, permissioning items to courses is likely more useful.

After you've added all permissions, you can return to the list of all items by clicking 'Items'.

Toggle between list view and detail view using the icons beside the search bar.

In detail view, see the details of an existing item by clicking on the eye icon.

In detail view, edit an existing question by clicking on the pencil.

To delete items, check off the tick box beside a question (list view) or beside the pencil icon (detail view) and click 'Delete Items'.

From an Edit Item page you can click on a link to view the forms that use an item or the grouped item an item is included in.

When viewing items in list view, the third column shows the number of answer options the item has. Clicking on it takes you to the item, and by clicking again you can see all the forms that use this item.

From the Items tab type into the search box to begin to find questions.

You can apply a variety of filters to refine your search for existing items.

To select a filter, click on the down arrow beside the search box. Select the filter type you want to use, click on it, and then begin to type what you want to find or continue clicking to drill down and find the required filter field. Filter options will pop up based on your search terms or what you’ve clicked through and you can check off the filters you want to apply. Apply multiple filters to further refine your search.

If you're working with a filter with multiple hierarchies, use the breadcrumbs in the left corner of the filter list to go back and add additional filters.

When you’ve added sufficient filters, scroll down and click Apply Filters to see your results.

To remove individual filters from your search, click on the down arrow beside the search field, click a filter type and click on the small x beside each filter you want to remove. Scroll down and click ‘Apply Filters’ to apply your revised selections.

To remove all filters from your search, click on the down arrow beside the search field, click a filter type, scroll down, and click on ‘Clear All’ at the bottom of the filter search window.

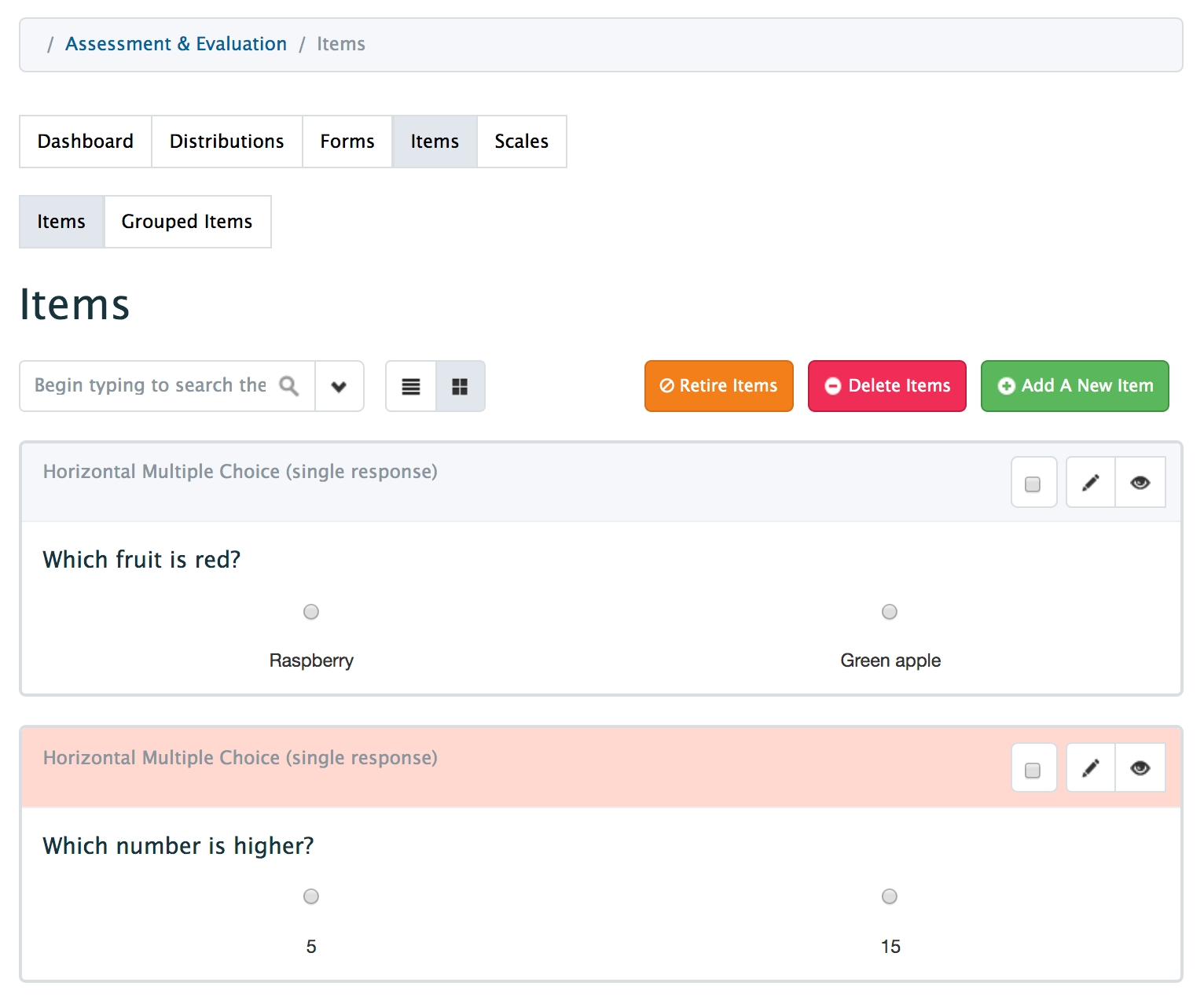

Retiring items allows a user to manage archived and future or currently active items effectively. When users create forms they will not see retire items, however retired items will still be included in all relevant reports. Retired items will continue show in the item bank, but will have their item type highlighted red.

Click the tick box on an item card.

Click the orange Retire Items button.

Confirm your action by clicking 'Retire'.

The recently retired item will have the item type bar highlighted in red.

Deleting an item will remove it from the list of Assessment and Evaluation Items.

Click the tick box on an item card.

Click the red Delete Items button.

Confirm your action by clicking 'Delete'.

The deleted item will no longer show on the list of items.

Please note that there is no way through the user interface to recover an accidentally deleted item. If you have deleted something by mistake you will need help from a developer to correct the mistake.

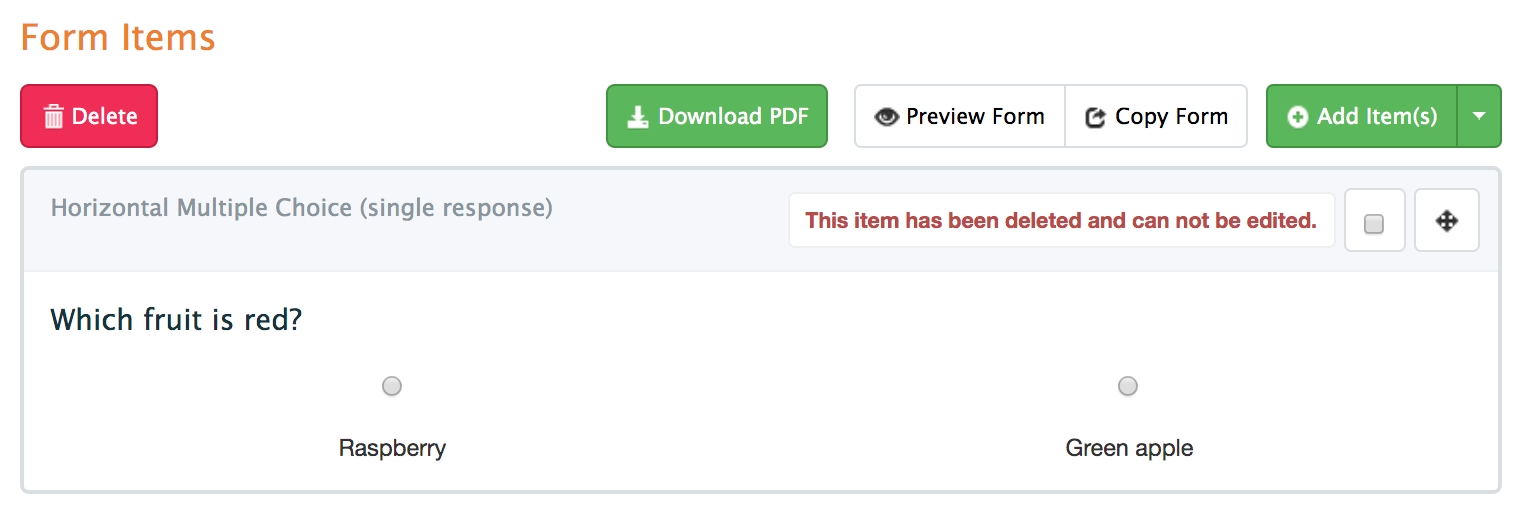

Deleted items will remain on forms that already include that item.

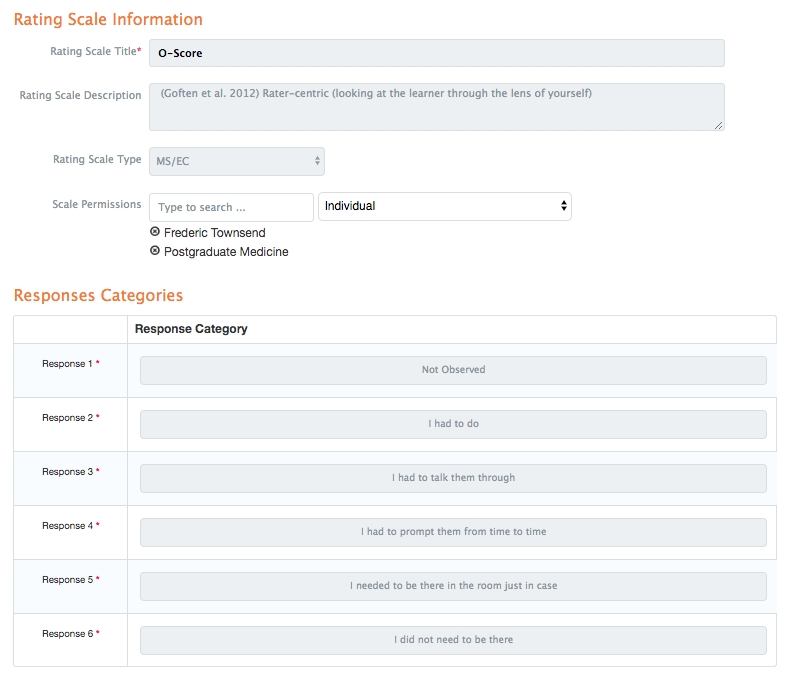

When you create assessment and evaluation items you will have the option of applying rating scales to certain item types; creating rating scales promotes consistency across items and can be a time saver for the administrative staff creating items and forms.

You must be a medtech:admin user to manage rating scales.

For additional detail about rating scales in CBE, including how to add default text to appear with global entrustment items, please see .

Default

Used on Assessment and Evaluation items

Dashboard

Used with Competency-Based Education when displaying assessment information on the Learner Dashboard

Global Assessment

Used on Competency-Based Education forms and form templates

MS/EC (Milestone/Enabling Competency)

Used on Competency-Based Education Supervisor Form Templates

Please note that if you have created a second organization within your Elentra installation, you will need a developer's help to add the rating scale types to your organization.

Navigate to Admin>Assessment and Evaluation.

Click 'Scales' from the A&E tabs list. Any existing rating scales will be displayed.

Click the green 'Add Rating Scale' button.

Complete the required information, noting the following:

Title: Title is required and is what users will see when they build items and add scales so make it clear.

Description: This is optional and is not often seen though the platform.

Rating Scale Type: This defines the type of rating scale you are creating. Later, if you add rating scales to items, or add standard scales to form templates, you will first have to select a scale type. There is no user interface to configure rating scale types.

In a default Elentra installation you'll likely just see a default scale type. In installations with CBE enabled you'll see global rating and milestone/enabling competency scales.

Response Categories

Add or remove response categories by clicking the plus and minus icons.

For each response category, select a descriptor (these are configured through the assessment response categories). Note that you can search for descriptors by beginning to type the descriptor in the search box.

Response Colour - use this when you build a Dashboard scale

Response Character - use this when you build a Dashboard scale

Response Preview - this displays what the icons will look like on the Learner Dashboard

To edit an existing rating scale click on the scale title, make changes as needed, and click 'Save'.

To delete a rating scale click the checkbox beside the rating scale and click 'Delete Rating Scale'.

Note that once a rating scale is in use, you are unable to delete it through the user interface. This is to prevent any changes to previously obtained assessment and evaluation results.

Once scales are created, they will become visible options when creating items and using some form templates.

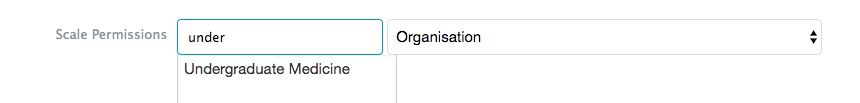

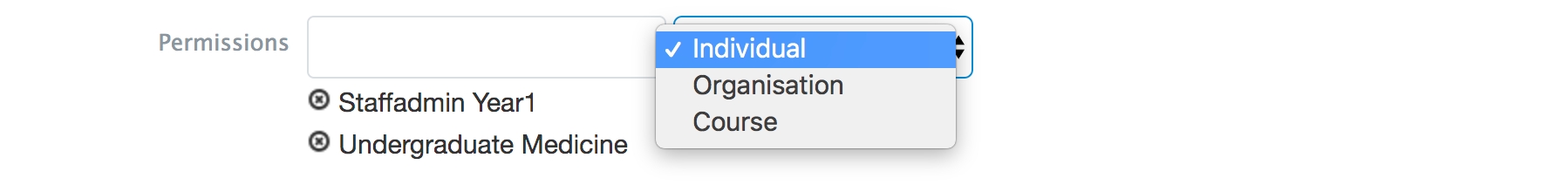

Setting permissions for a scale dictates which users will be able to access a scale when they create assessment and evaluation items. For example, if you set a scale's permissions to Undergraduate Medicine, all users with access to Admin > A & E in the undergraduate organisation will be able to use the scale when creating items. If you set a scale's permissions to several individual users, only those users will be able to access the scale when creating items.

You must create a scale before you can edit the permissions for it. After a scale is created you will automatically be redirected to the edit page.

In the Rating Scale Information section, look for the Scale Permissions heading.

Select Individual, Organisation, or Course from the dropdown options.

Type in the search bar to find the appropriate entity.

Click on the entity name to add it to the permission list.

Add as many permissions as required.

Scroll down and click 'Save'.

Please note that some rating scale values will be ignored in the Weighted CSV Report. Values that will be ignored are:

n/a

not applicable

not observed

did not attend

please select

An overview of Distributions and their management

New in ME 1.26!

The option for administrative users to disable initial task creation email notifications on distributions.

A database setting to make task expirty 23:59 for specified distribution types (assessment_tasks_expiry_end_of_day)

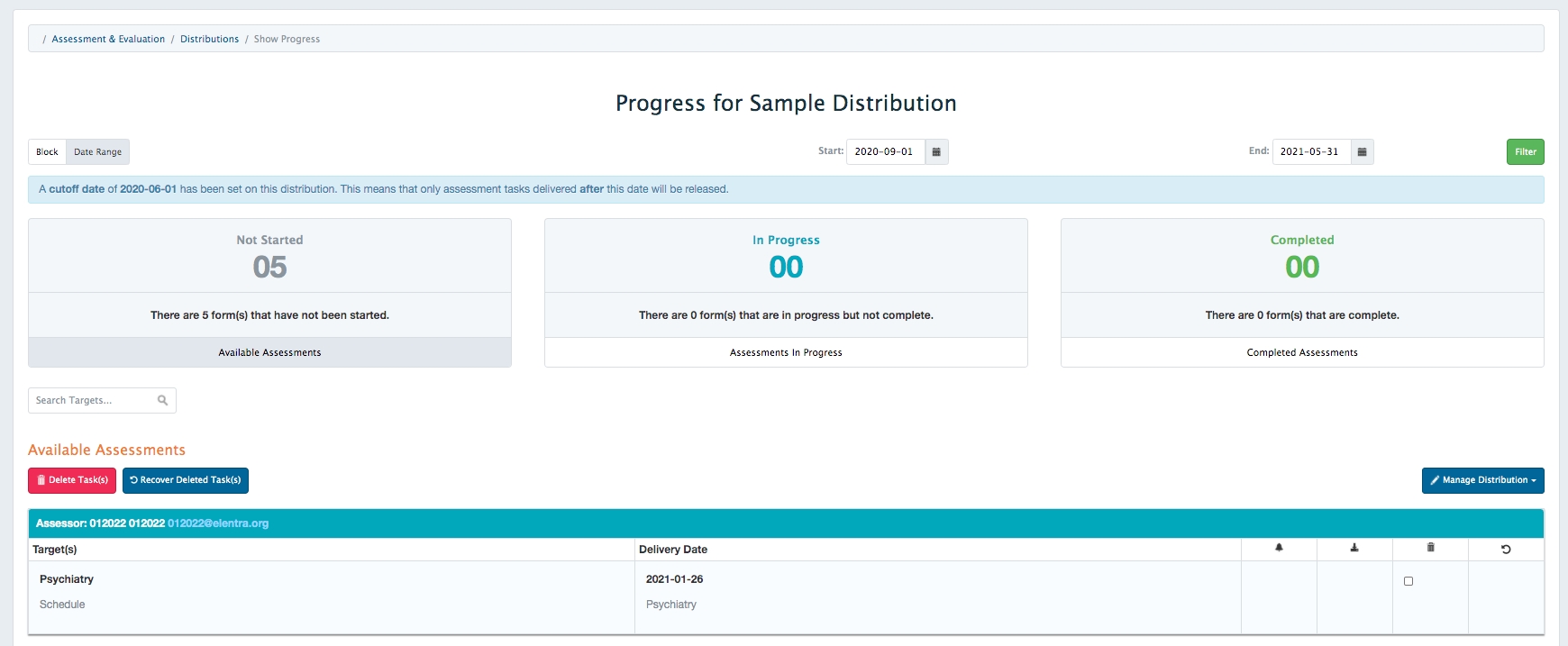

A distribution defines who will complete a task using what form, who or what the task is about, and when the task will be delivered and completed. Usually, administrative staff manage distributions to send tasks to learners, faculty, event participants, etc.

In most cases, when a distribution sends a task, users receive an email notification that a task has been created for them. They can also access tasks from their Assessment and Evaluation badge.

You can watch a recording about Distributions at (login required).

You must have one of the following permission levels to access this feature:

Medtech:Admin

Staff:Admin

Staff:PCoor (assigned to a course/program)

Faculty:Director (assigned to a course/program)

Distribution: defines who will complete a task using what form, who/what the task is about, and when the task should be delivered and completed

Assessor/Evaluator: The person completing a form/task

Target: The person, course, experience or other that the form/task is about

Distribution method: How the form/task is assigned to people (e.g. based on a date range, rotation schedule, event schedule, etc.)

Distribution wizard: Walks users through creating any distribution.

Rotation Schedule: Use a rotation schedule distribution to send forms based on a rotation schedule. This could include assessments of learners participating in a rotation, evaluation by learners of a specific rotation site or experience, etc.

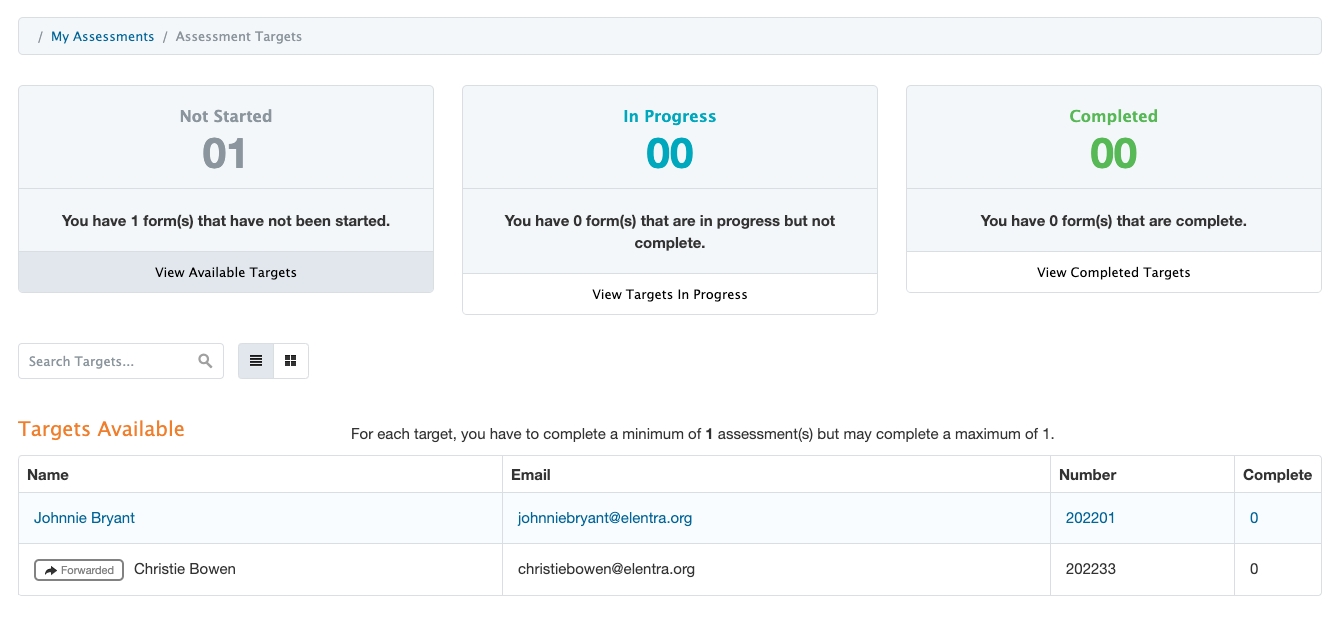

Delegation: Use a delegation to schedule a distribution to be sent to an intermediate person who will later forward the tasks to the appropriate assessor/evaluator. For example, if you want to set up distributions in September, but it is unknown who learners will work with in January because a clinic schedule hasn't been set yet, you could send the distribution to a delegator to forward once the clinic schedule is known.

Learning Event Schedule: Use a learning event schedule distribution when you want to have participants evaluate an event, when you want participants to evaluate the faculty teaching particular types of events, or when you want faculty to assess the learners participating in certain types of events.

Date Range: Use a date range to create the most generic type. You could use this for faculty or course evaluations, or for assessment of learners at any point.

Ad Hoc: Used for assessments only, allows user to trigger an assessment when needed (differs from on demand workflows in that the distribution controls some things like the potential list of assessors)

Reciprocal: Allows you to link two distributions so that one generates tasks based on the other (e.g., distribution 1 asks faculty to assess learners; once Faculty A has assessed Learner B, distribution 2 can be configured to get Learner B to evaluate Faculty A).

For use cases where certain evaluations and/or assessments need to be confidential in the sense that the assessor should be hidden, Elentra includes a feature when building forms to hide the names of assessors/evaluators. This could apply to things like faculty evaluations and especially rotation evaluations.

One thing to be aware of if using the Confidentiality option on forms is that once the name of the assessor/evaluator is changed to Confidential, it will be impossible for an administrator to monitor a distribution and see who has/has not completed what. If you typically monitor distributions or use task completion to populate some aspect of a course gradebook (e.g. a professionalism score), you may not want to use the Confidentiality option.

At the bottom of the Form Information section you'll see a checkbox to enable form confidentiality.

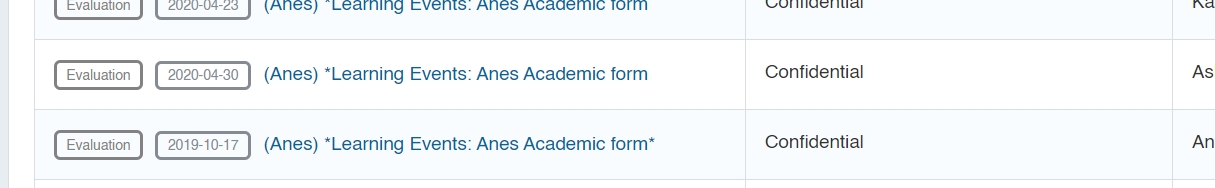

Enabling this feature changes the way the assessor is displayed in many areas across the platform. For example, on the Admin Assessment and Evaluation page, any assessment/evaluation that is tied to a form with the setting enabled will have the assessor marked as confidential:

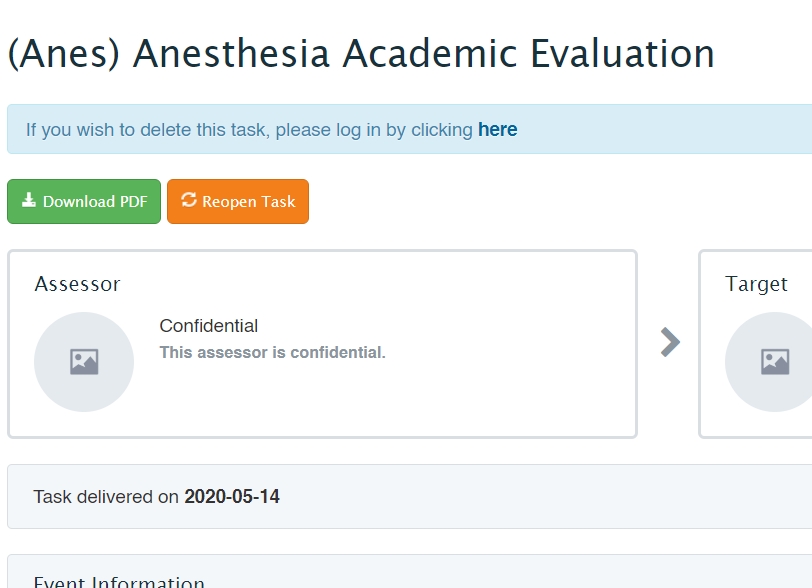

Clicking on one of these assessments will also have the assessor hidden within the assessor card:

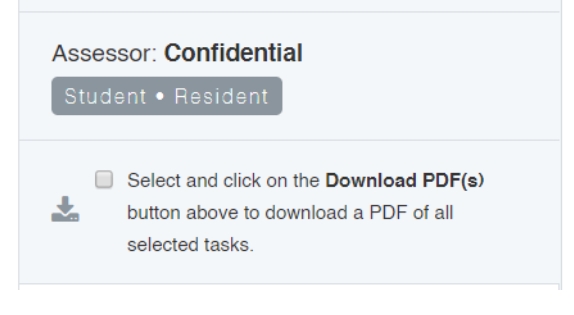

Assessment cards will also hide the name, even if it is released to the target in the case of faculty evaluations:

Reports have also been updated so that whenever the assessor name is returned for a form with the setting enabled, “Confidential” will be shown instead. The same occurs if you try to download an assessment as a PDF.

The wording used throughout the feature can easily be replaced in the translation file for customization.

If you are in an organisation that is not using Elentra's Competency-Based Education tools, the form templates tab is unlikely to be something you will ever use.

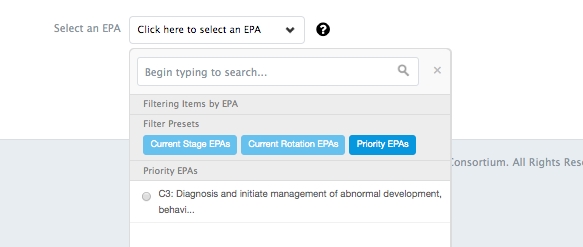

Form templates allow users to make forms more consistent and in the case of the Competency-Based Education module, allow users to configure the requirements for multiple forms through one user interface. Administrative staff specify EPAs and milestones, contextual variables, and rating scales to be used on assessment forms for clinical environments, and these forms get created after a form template is published. See for more information.

Form templates are built off of something called a form blueprint and there is currently no way through the user interface to configure a form blueprint. If you want different form templates from those in a default installation of Elentra, you'll need a developer's help.

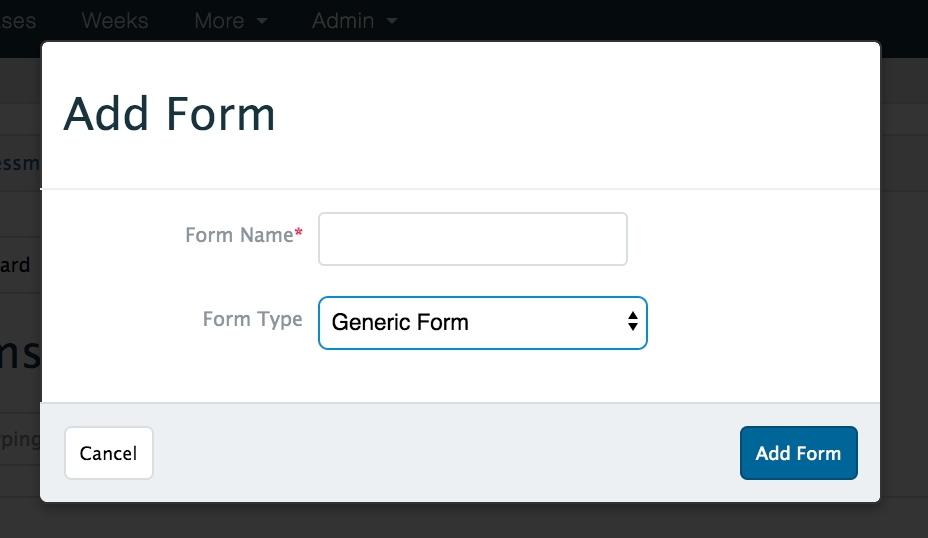

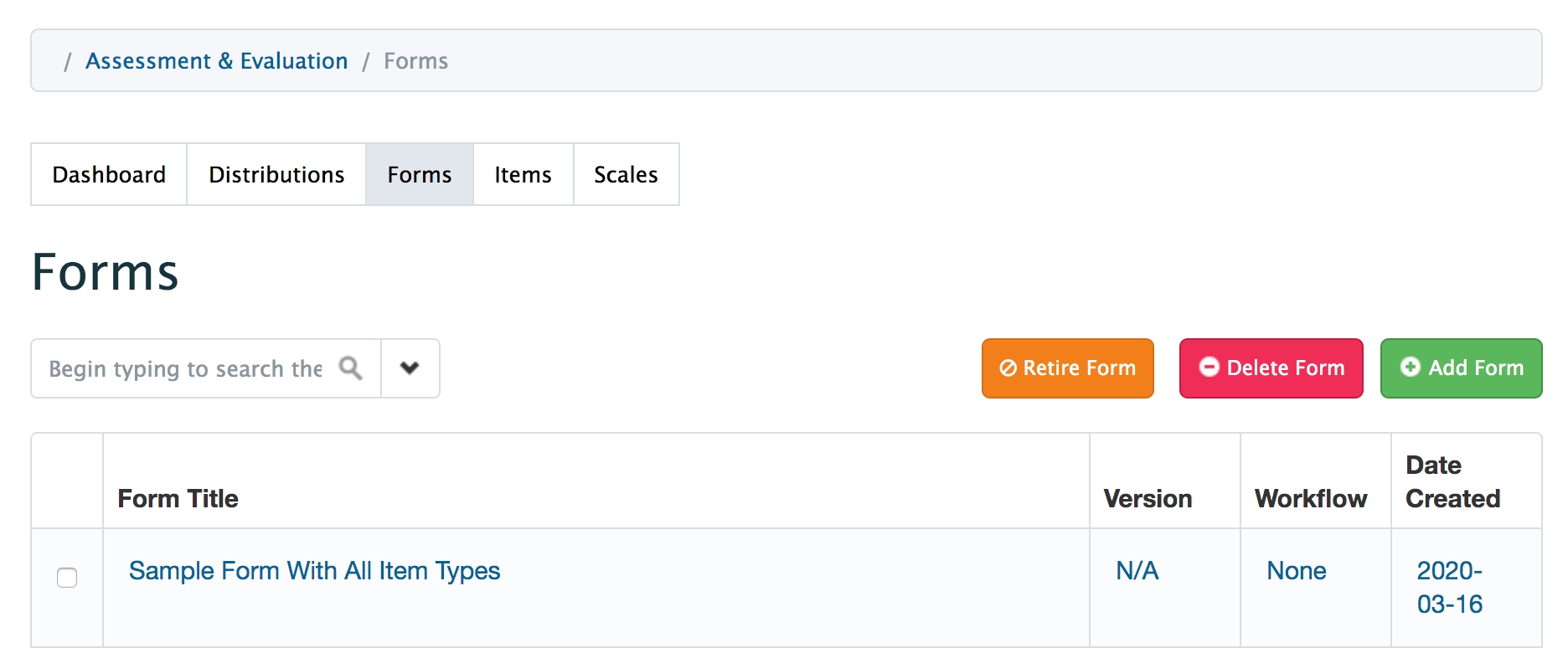

Navigate to Admin>Assessment & Evaluation.

Click 'Forms'.

Click 'Add Form'.

Provide a form title and select a form type (if applicable). See list of form types above.

Depending on the form type selected, you may be required to identify a course/program.

Click 'Add Form'.

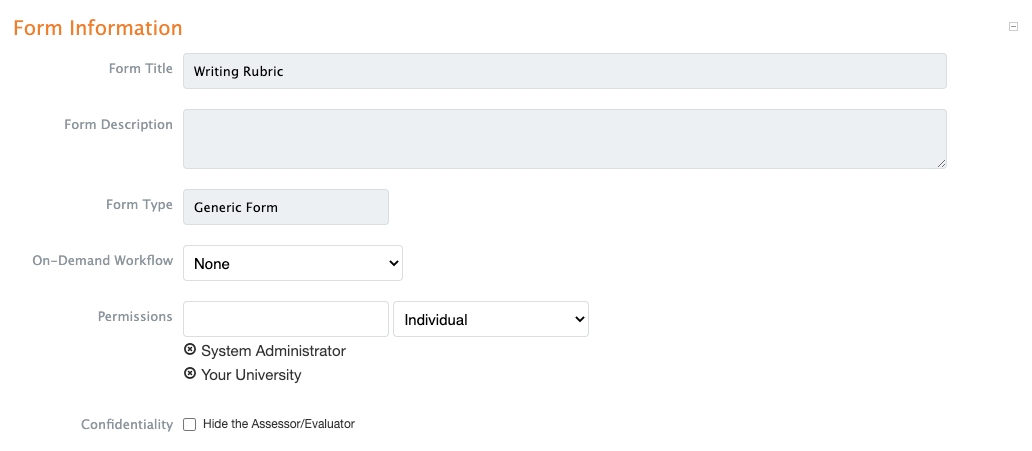

Form Title: This will be set based on the previous step although you can edit the title if needed.

Form Description: Optional. This will display to users when the form is accessed.

Form Type: This will be set based on the previous step.

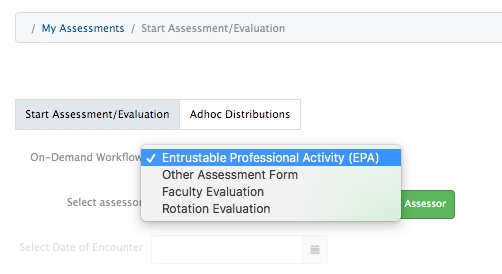

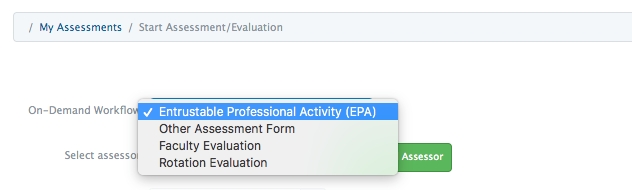

On Demand Workflow: If your organization uses workflows like EPA or Other Assessment (i.e. allows users to initiate specific forms on-demand), make the appropriate selection. Set to None if you do not want users to access this form on-demand.

Set form permissions to give other users access to this form. You can optionally give permissions to individuals, a course or an organisation.

Form Permissions Tips:

Any individual given permission to the form will be able to edit it until it is used in a distribution.

We strongly recommend permissioning forms to at least a course so that staffing changes are simplified. If you do this, any user who is a course contact for that course and who also has permission to access Admin > Assessment & Evaluation, will be able to access the form and include it in a distribution if needed.

If Jane Doe is the only person with access to a form and she retires, you'll need to manually reassign all her forms to a new user. If the form is permissioned to a course, any course contact with access to Assessment & Evaluation will be able to access the form.

Currently, permissioning a form to an organization only allows medtech:admin users to access it. As such we recommend relying mostly on course permissions.

Please note that forms using a workflow (e.g. EPA, Other Assessment, Rotation Evaulation or Faculty Evaluation) must be permissioned to the appropriate course.

Standard Rotation Evaluation and Standard Faculty Evaluation forms must also be permissioned to a course.

Confidentiality

Check this box if you'd like completed tasks using this form to replace the assessor/evaluator name with "Confidential." This can be useful for things like course or faculty evaluations.

One thing to be aware of if using the Confidentiality option on forms is that once the name of the assessor/evaluator is changed to Confidential, it will be impossible for an admin. to monitor a distribution and see who has/has not completed what. If you typically monitor distributions or use task completion to populate some aspect of a course gradebook (e.g. a professionalism score), you may not want to use the Confidentiality option.

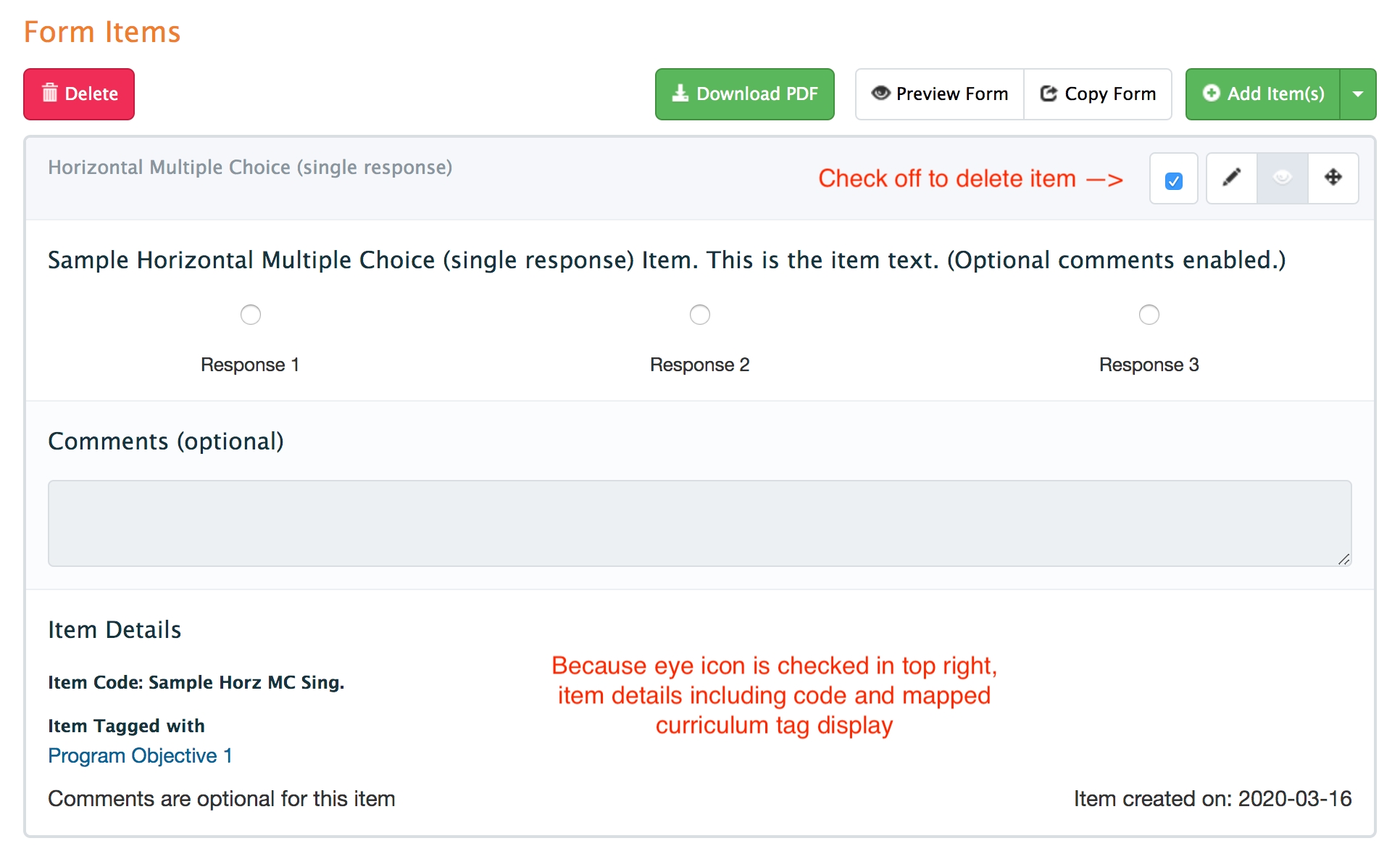

Click 'Add Item(s)' to add existing items.

Note that you can also add grouped items, free text (e.g., to provide instructions), or a curriculum tag set to your form. To add any of these, click on the down arrow beside 'Add Items'. If you choose to add Free Text or a Curriculum Tag Set, please note that you must save your choices within the item box using the small 'Save Required' button.

Note: Adding a Curriculum Tag Set is a very specific tool that supports field notes for use in family medicine. Most users should ignore this option.

Search for existing items and tick off the check boxes, then click 'Attach Selected' to apply your choices.

To create new items while creating your form, click Add Items and then click Create & Attach a New Item. When you complete your new item and save it, you will be returned to the form you in the process of building.

Save the form when you have added all the relevant items.

To preview your form, by clicking on the eye icon/Preview Form button.

To download a copy of the form, use the Download PDF button.

To delete items on a form, tick off the box on the item card and then click the red Delete button on the left.

To rearrange items on a form, click the crossed arrow icon on the item card and drag the item to where you want it to be.

To edit an item, click on the pencil icon on the item card. Note that an item already in use on a form that has been distributed will not be able to be edited. Instead you must copy and attach a new version of the item to edit and use it.

To quickly view the details of an item, click on the eye icon on the item card.

Navigate to Admin>Assessment & Evaluation.

Click 'Forms'.

Use the search bar to look for the form you want to copy. Click the down arrow beside the search bar to apply filters to refine your search results.

Click on the name of the form you want to copy.

Click 'Copy Form' and provide a new name for the copied form.

Click 'Copy Form'.

Edit the form as needed (e.g., add additional items, change permission, etc.).

If you edit an item on a form and that item is in use on other forms, you will affect all of the associated forms. You can optionally view all forms that include the item.

For grouped items you can optionally copy and attach the grouped item to the form allowing you to change it as needed.

Create new item linkage

Create new items

For single items you can optionally copy the item to edit it. This will create a brand new item with no connection/link to the item it is copied from.

Click 'Save'.

Retiring a form means it will remain available in existing distributions, and reports, but will not be available for any new distribution.

Navigate to Admin>Assessment & Evaluation.

Click 'Forms'.

Use the search bar to look for the form you want to retire. Click the down arrow beside the search bar to apply filters to refine your search results.

Tick off the box beside the form name (you can select multiple forms to retire at once), and then click the orange Retire Form button.

You will be prompted to confirm your action. Click 'Retire'.

Retired forms will display with a red highlight around them.

Deleting a form means that all pending and in-progress tasks that used that form will not have a form associated with them and will display an error message stating that the form has been deleted when an assessor/evaluator tries to access the form.

Navigate to Admin>Assessment & Evaluation.

Click 'Forms'.

Use the search bar to look for the form you want to delete. Click the down arrow beside the search bar to apply filters to refine your search results.

Tick off the box beside the form name (you can select multiple forms to delete at once), and then click the red Delete Form button.

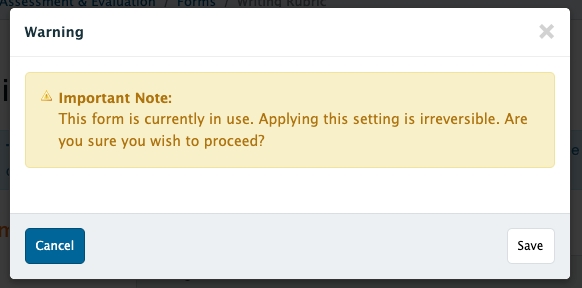

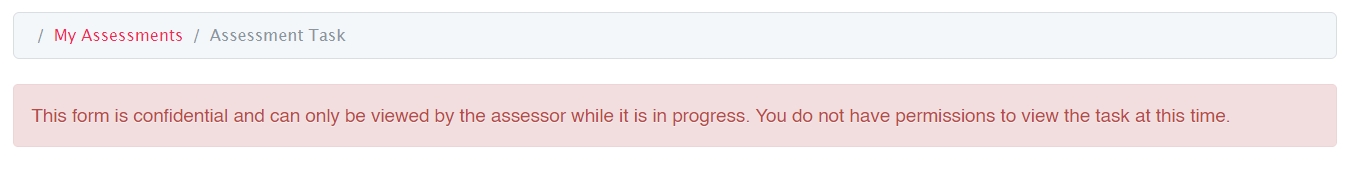

If a form is not in use, this checkbox can be enabled/disabled at will. For forms that are in use, this setting can ONLY be enabled (a developer can disable it in the DB). For this reason, admins will be prompted by a warning box confirming their choice:

In the case where tasks can have multiple targets, if someone who is not the assessor attempts to view any of the tasks while at least one is pending. This is done because if there are multiple targets, you can switch between them on the assessment page.

See more detail .

A date range distribution allows you to send a form to the appropriate assessors/evaluators in a specific date range.

Navigate to Admin>Assessment and Evaluation.

Click 'Distributions' above the Assessment and Evaluation heading.

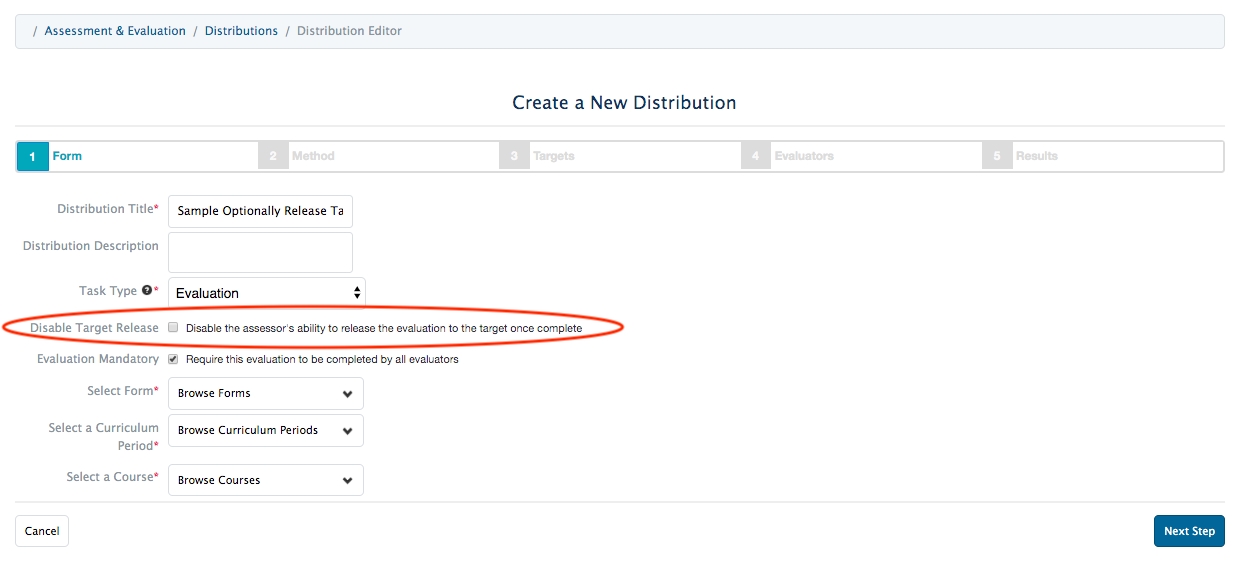

Click 'Add New Distribution'.

Distribution Title: Provide a title. This will display on the list of Distributions that curriculum coordinators and program coordinators can view.

Distribution Description: Description is optional.

Task Type: Hover over the question mark for more detail about how Elentra qualifies assessments versus evaluations. If a distribution is to assess learners, it's an assessment. If it is to evaluate courses, faculty, learning events, etc. it is an evaluation. Notice that the language on Step 4 will change if you switch your task type, as will other steps of the wizard.

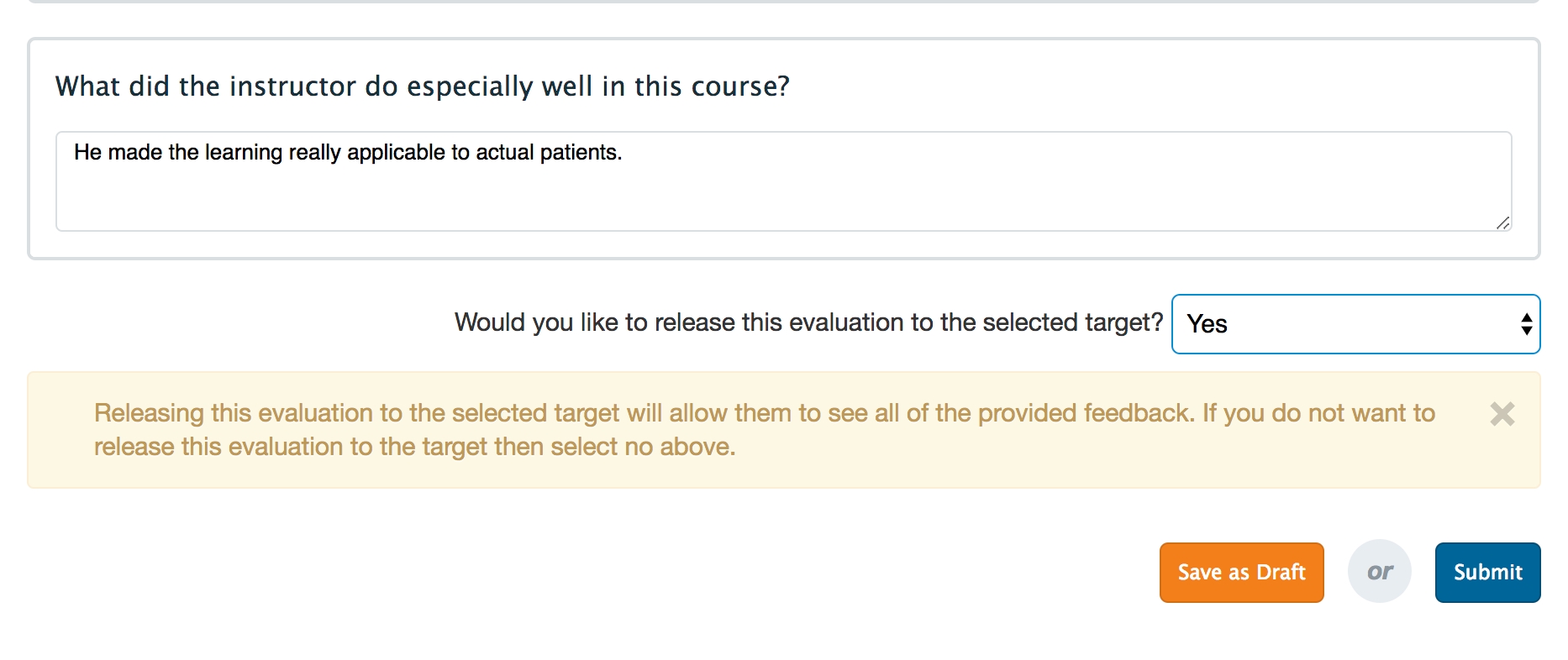

Disable Target Release: This will only display for Evaluations. If you do not want evaluators to be able to release the form to the target upon completion, check this box. If left unchecked, evaluators will be able to release the form to the target upon completion.

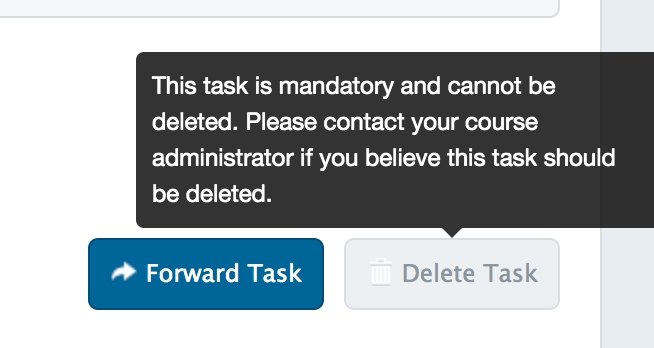

Assessment/Evaluation Mandatory: This will be checked off by default. Mandatory tasks can't be deleted by assessors/evaluators.

Disable Reminders: Check this box to exclude tasks from this distribution from reminder notification emails. This option will only display if specific database settings are enabled. If you check this box, assessors/evaluators will still be sent an initial task creation notification, but weekly reminder summary emails will be disabled.

Select Form: The form you want to distribute must already exist and you must have permission to access it; pick the appropriate form.

Select a Curriculum Period: The curriculum period you select will impact the list of available learners and associated faculty.

Select a Course: The course you select will impact the list of available learners and associated faculty.

Click 'Next Step'

Distribution Method: Select 'Date Range Distribution' from the dropdown menu.

Start Date: This is the beginning of the period the form is meant to reflect.

End Date: This is the end of the period the form is meant to reflect.

Delivery Date: This is the date the task will be generated and delivered to the assessors/evaluators. (The delivery date will default to be the same as the start date.)

Task Expiry Date: Optional. Set the date on which the tasks generated by the distribution will automatically expire (i.e. disappear from the assessor's task list and will no longer be available to complete). This tool was updated in ME 1.22 so that tasks expire at 11:59 pm on the day you select.

Warning Notification: If you choose to use the Task Expiry option, you'll also be able set an automatic warning notification if desired. This will send an email to assessors a specific number of days and hours before the task expires.

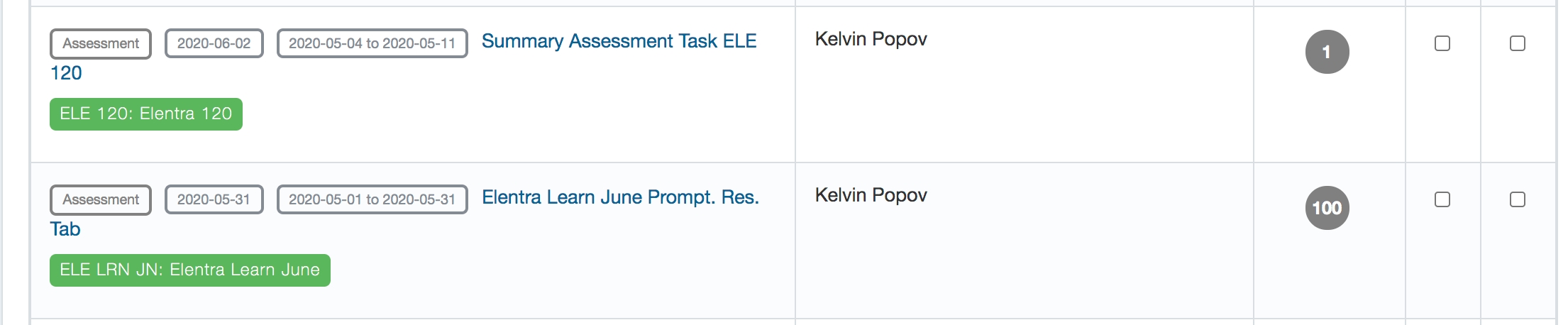

Delay Task Creation: This option relates specifically to creating a summary assessment task distribution. This allows you to create a distribution that will pull in and display completed tasks from other distributions. In effect, the assessor of the distribution you create will be able to see items from previously completed tasks and take them into account when completing their own task.

Select a distribution (note that you will only see distributions to which you have access).

Define how many tasks must be completed in the linked distribution(s) before the new distribution will take effect.

Enter a fallback date. This date represents when your distribution will create tasks whether or not the minimum number of tasks have been completed in the linked distribution.

You can adjust the fallback date on the fly and the distribution will adjust accordingly to only generate tasks as appropriate (previously created tasks will still remain).

If you set a fallback date that falls before the release date, the fallback date will be ignored.

The target is who or what the form is about.

Assessments/Evaluations delivered for: Use this area to specify the targets of the form.

For Assessments your options include: targets are the assessors (self-assessment), and learners (

For Evaluations your options include: faculty members, courses, course units (if in use), individuals regardless of role, external targets, and rotation schedules.

Select Targets: Here you can specify your targets using the dropdown selector.

CBME Options: This option applies only to schools using Elentra for CBME. Ignore it and leave it set to non CBME learners if you are not using CBME. If you are a CBME school, this allows you to apply the distribution to all learners, non CBME learners, or only CBME learners as required.

Target Attempt Options: Specify how many times an assessor can assess each target, OR whether the assessor can select which targets to assess and complete a specific number (e.g. assessor will be sent a list of 20 targets, they have to complete at least 10 and no more than 15 assessments but can select which targets they assess).

If you select the latter, you can define whether the assessor can assess the same target multiple times. Check off the box if they can.

Click 'Next Step'

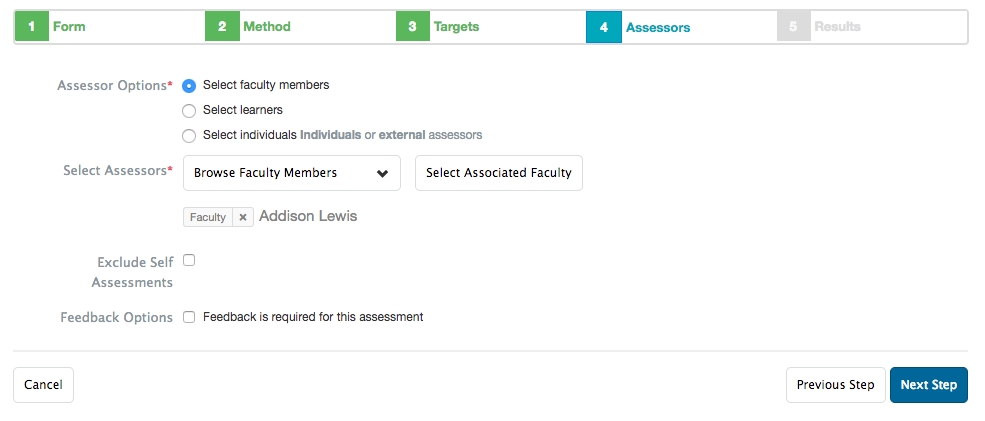

The assessors are the people who will complete the form.

There are three options:

Select faculty members

Browse faculty and click on the required names to add them as assessors

Select Associated Faculty will add the names of all faculty listed as course contacts on the course setup page

Exclude self-assessments: If checked, this will prevent the assessor from completing a self-assessment

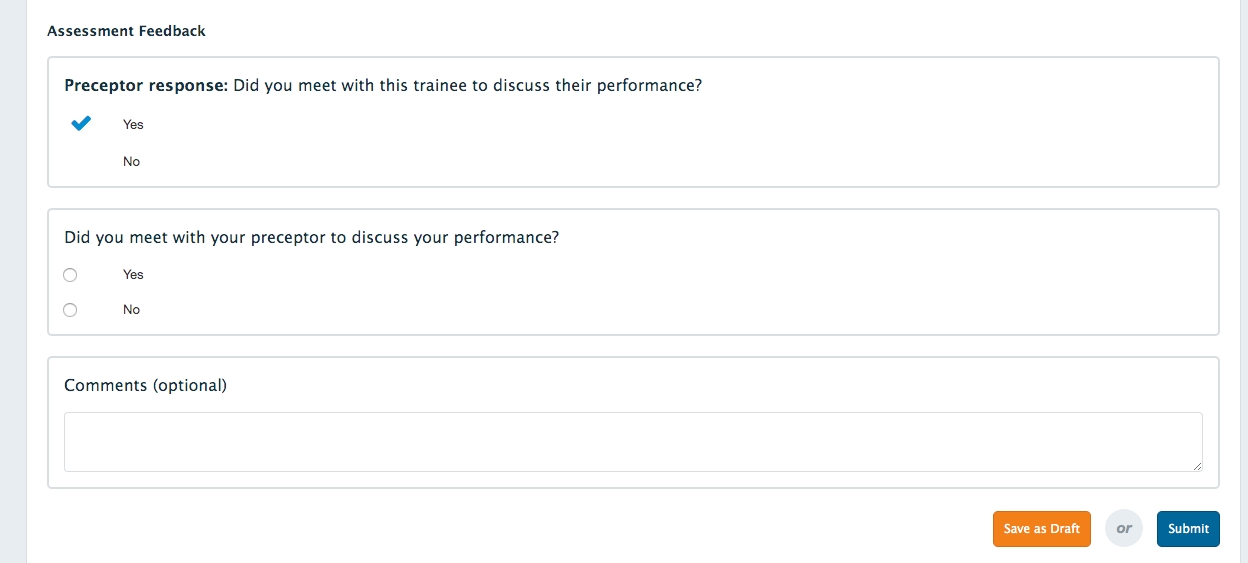

Feedback Options: This will add a default item to the distribution asking if the faculty member met with the trainee to discuss their assessment.

Select learners

Click Browse Assessors and click on the appropriate option to add learners as assessors

Exclude self-assessments: If checked, this will prevent the assessor from completing a self-assessment

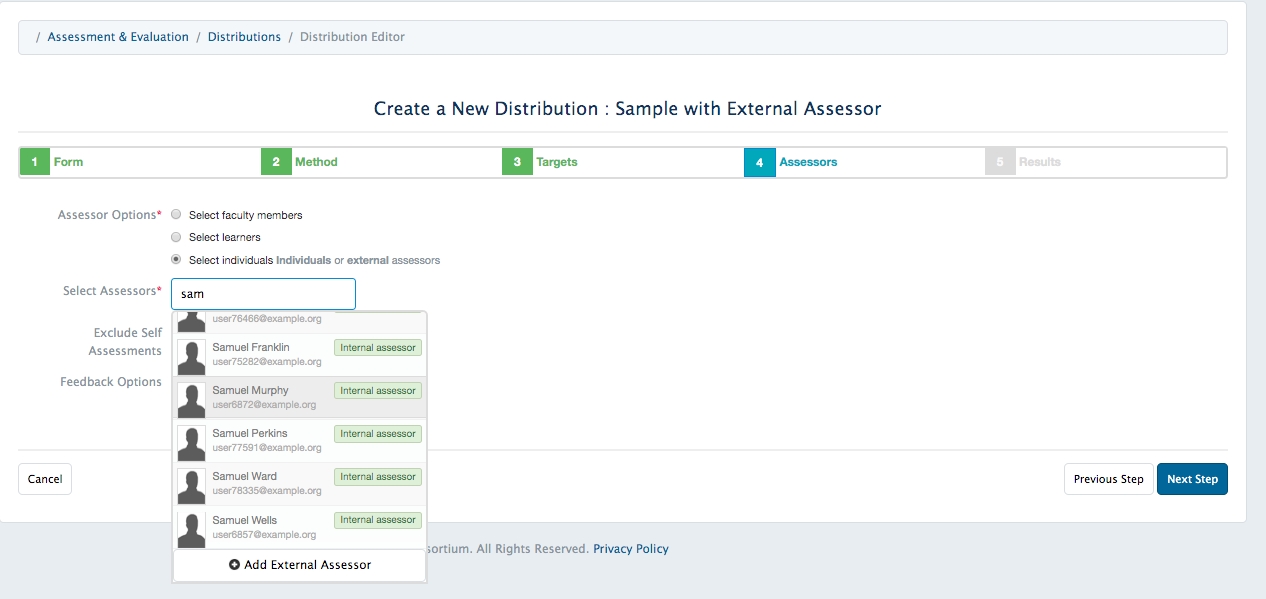

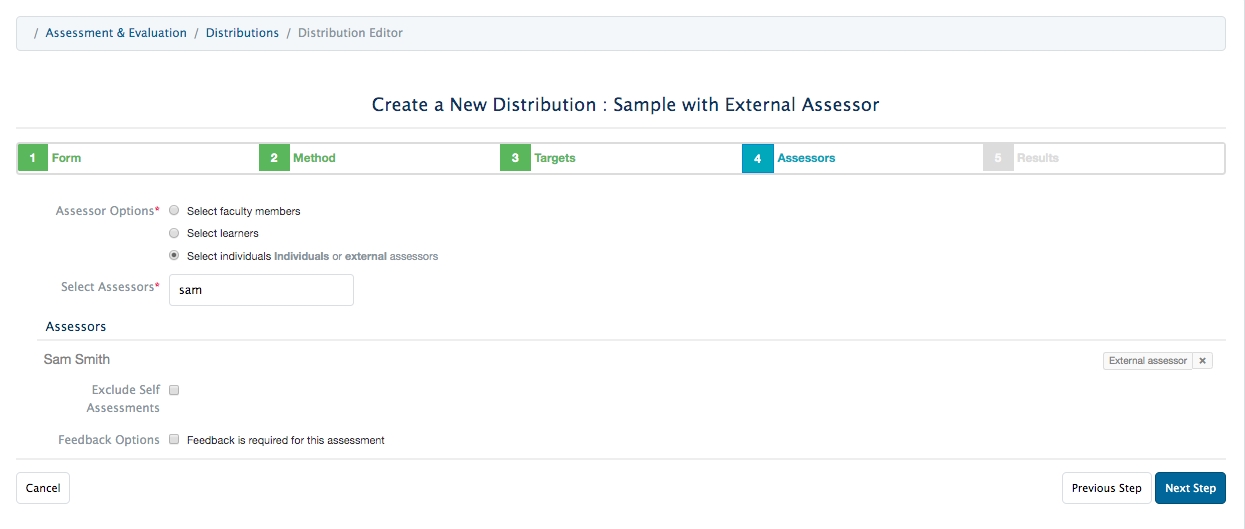

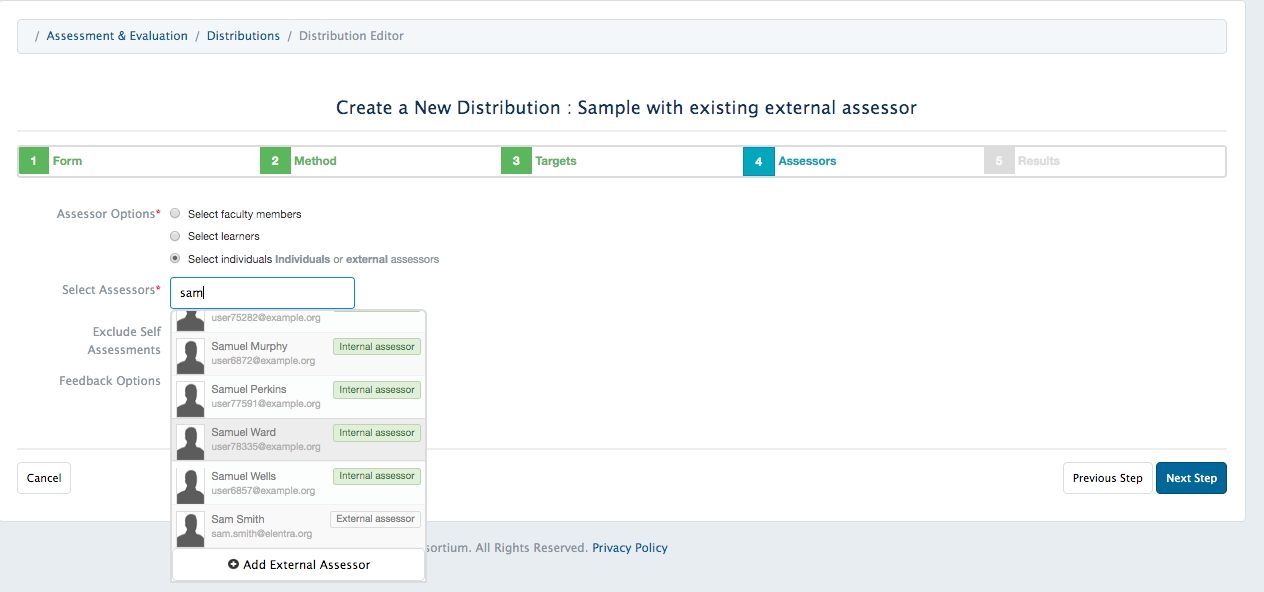

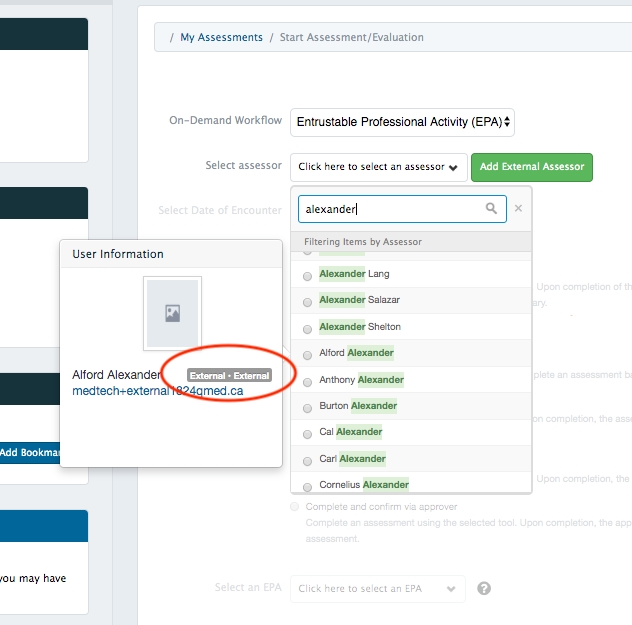

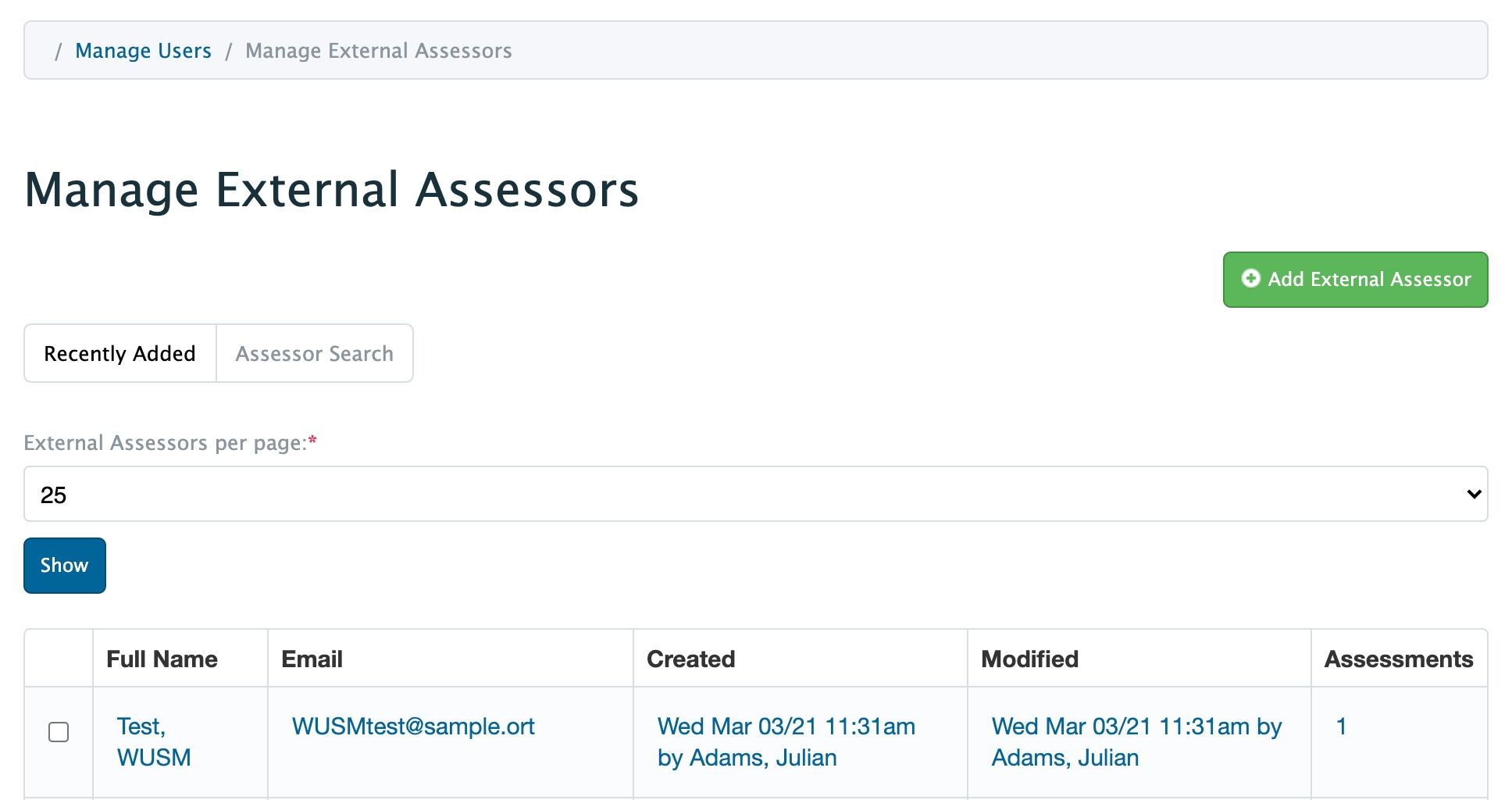

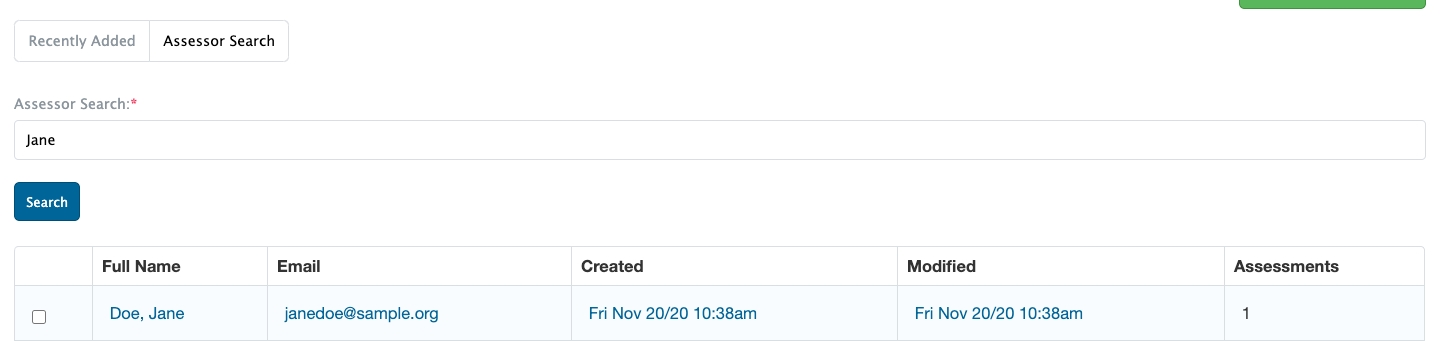

Select individuals external to the installation of Elentra

This allows you to add external assessors to a distribution

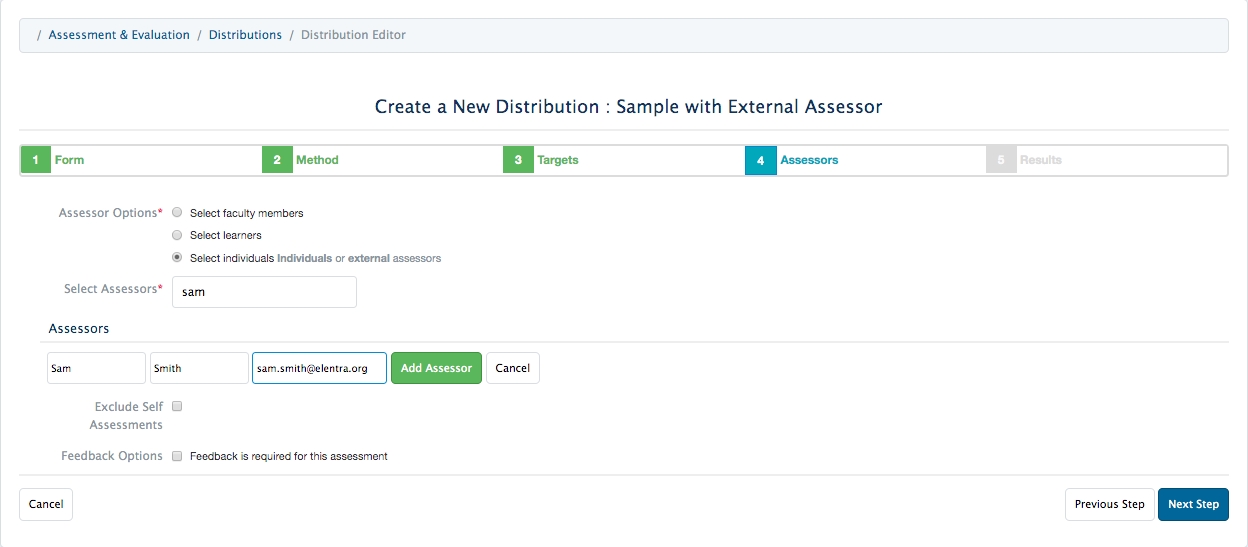

Begin to type an email, if the user already exists you'll see them displayed in the dropdown menu. To create a new external assessor, scroll to the bottom of the list and click 'Add External Assessor'

Provide first and last name, and email address for the external assessor and click 'Add Assessor'

Feedback Options: This will add a default item to the distribution asking if the faculty member met with the trainee to discuss their assessment.

Exclude Self Assessments: Check this to stop learners from completing a self-assessment

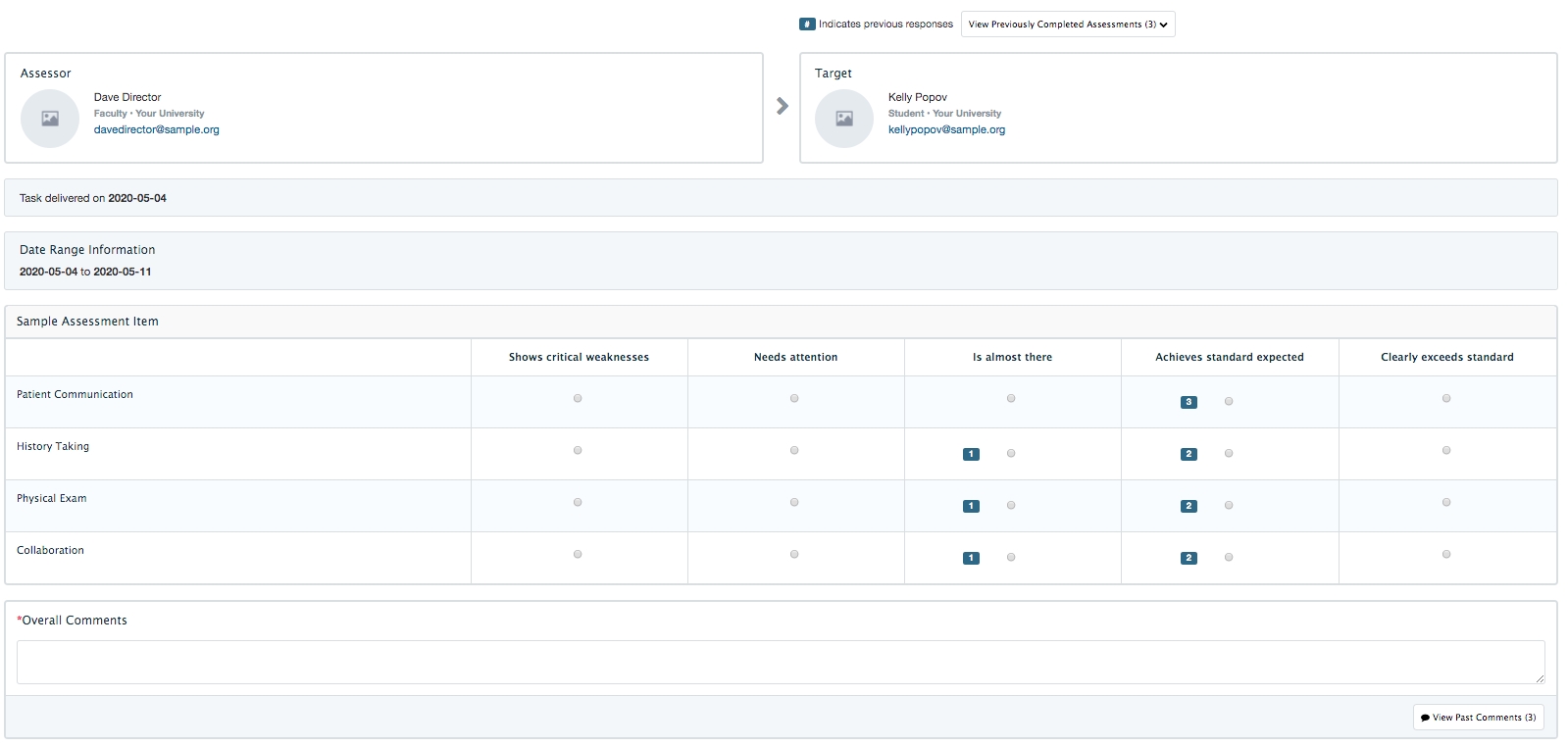

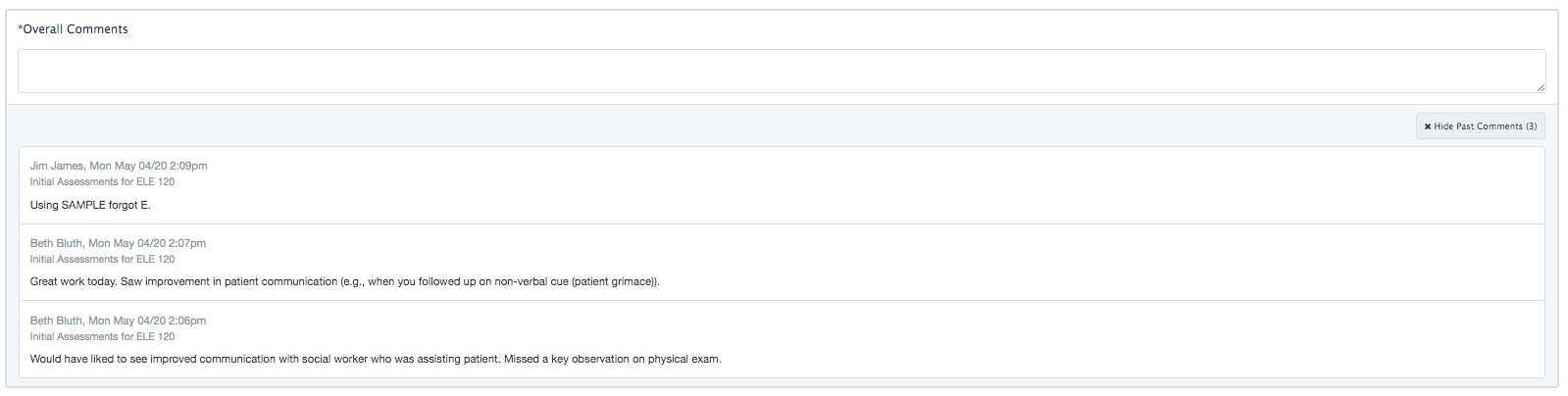

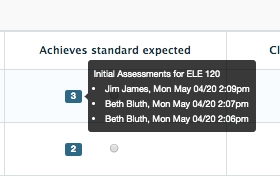

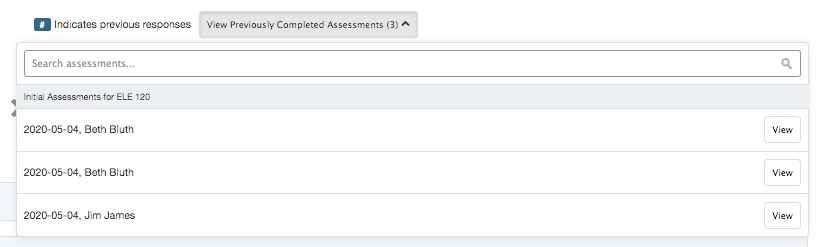

Give access to the results of previous assessments: This relates to Elentra's ability to provide a summary assessment task to users. If enabled, tasks generated by this distribution will link to tasks completed in the listed distributions. When users complete the summary assessment task they will be able to view tasks completed in the other distributions. For any items that are used on both forms, results from previously completed tasks will be aggregated for the assessor to view.

Click to add the relevant distribution(s).

Click 'Next Step'

You can immediately save your distribution at this point and it will generate the required tasks, but there is additional setup you can configure if desired.

Authorship: This allows you to add individual authors, or set a course or organization as the author. This may be useful if you have multiple users who manage distributions or frequent staffing changes. Adding someone as an author will allow them to more quickly access the distribution from their distribution list.

Distributions are automatically accessible to all users with staff:admin group and role permissions.

Adding a course permission will make the distribution show, without filters applied, to program coordinators and faculty directors associated with the course.

Adding an org. permission will make the distribution accessible to anyone with administrative access to Assessment & Evaluation. (Note that most users will need to apply filters to access the distribution.)

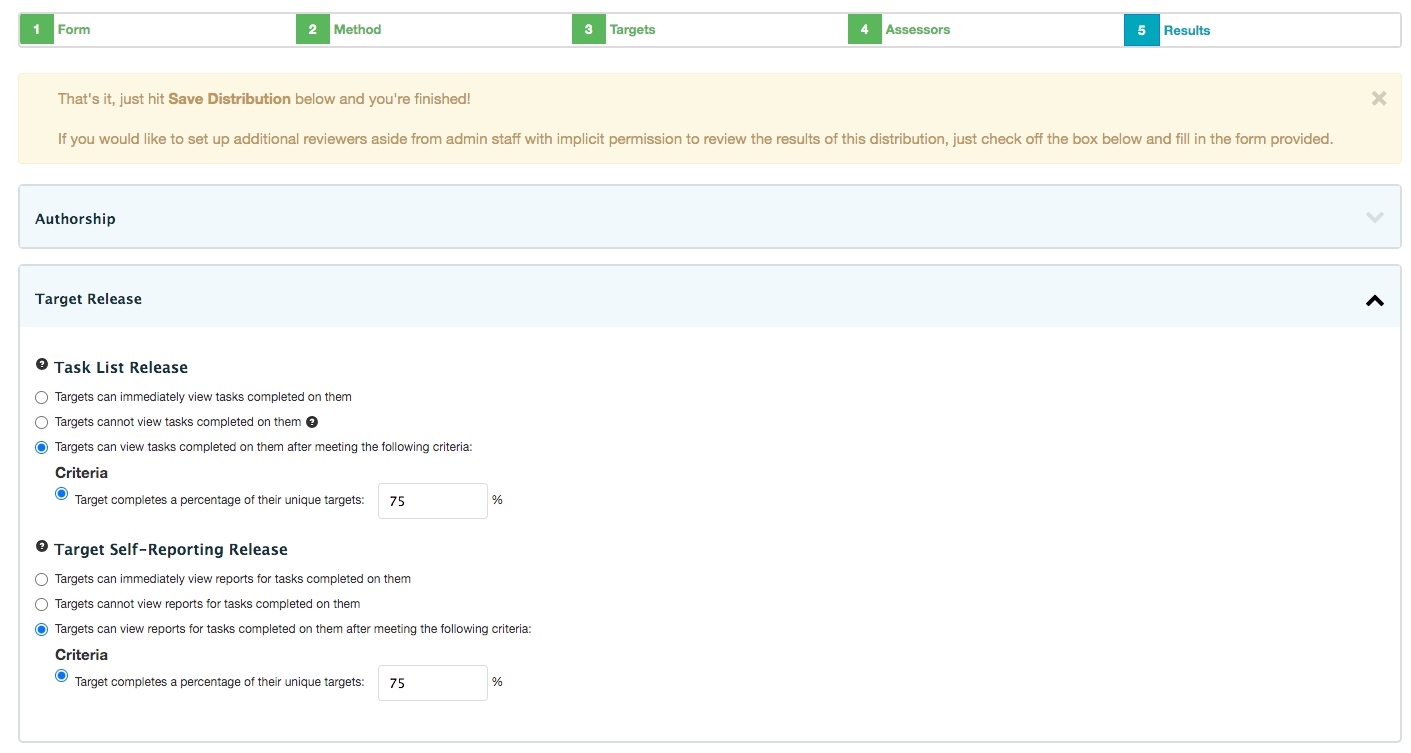

Target Release: These options allow you to specify whether the targets of the distribution can see the results of completed forms.

Task List Release:

"Targets can view tasks completed on them after meeting the following criteria" can be useful to promote completion of tasks and is often used in the context of peer assessments. Targets will only see tasks completed on them after they have completed the minimum percentage of their tasks set by you.

Target Self-Reporting Release: This controls whether targets can run reports for this distribution (i.e. to generate an aggregated report of all responses). When users access their own A+E they will see a My Reports button. This will allow them to access any reports available to them.

Target Self-Reporting Options: This allows you to specify whether or not comments included in reports are anonymous or identifiable. (This will only be applied if you have set reports to be accessible to the targets.)

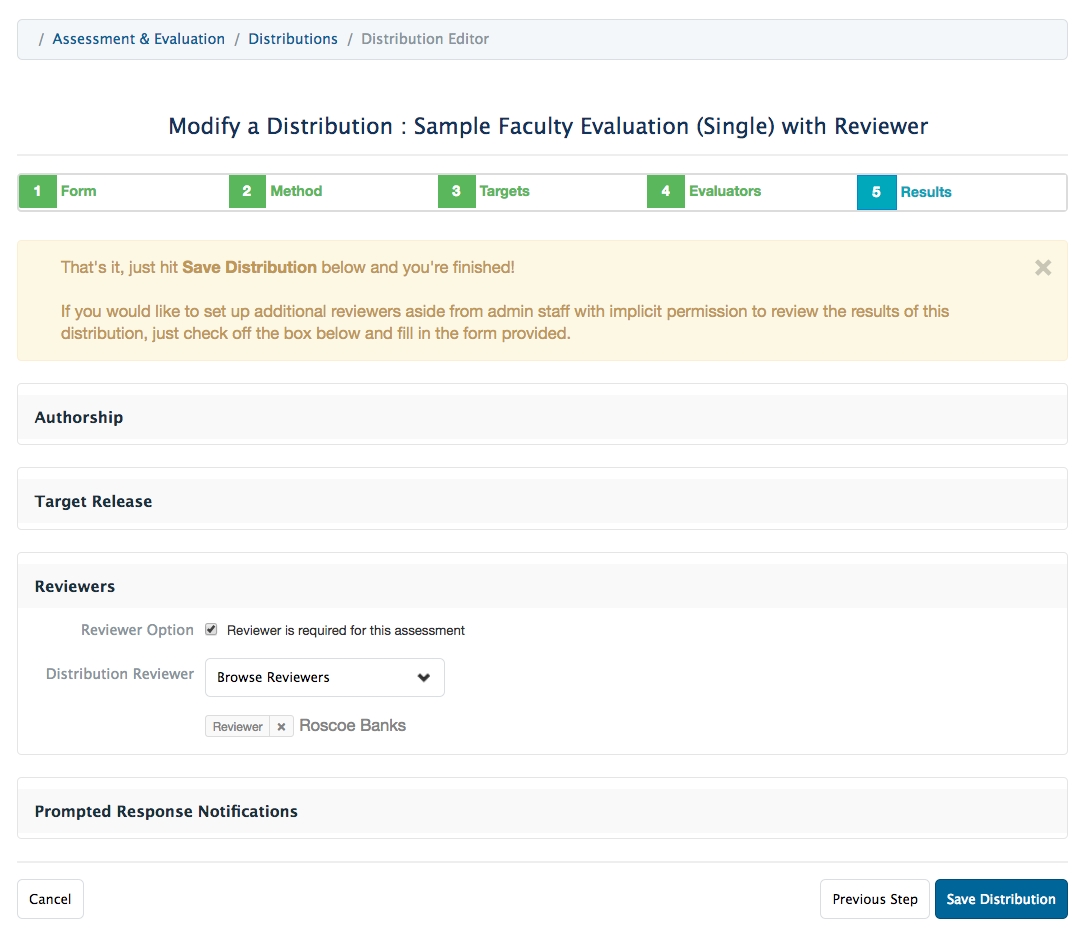

Reviewers: This allows you to set up a reviewer to view completed tasks before they are released to the target (e.g. a staff person might review peer feedback before it is shared with the learner).

Check off the box to enable a reviewer.

Click Browse Reviewers and select a name from the list. Note that this list will be generated based on the course contacts (e.g. director, curriculum coordinator) stored on the course setup page.

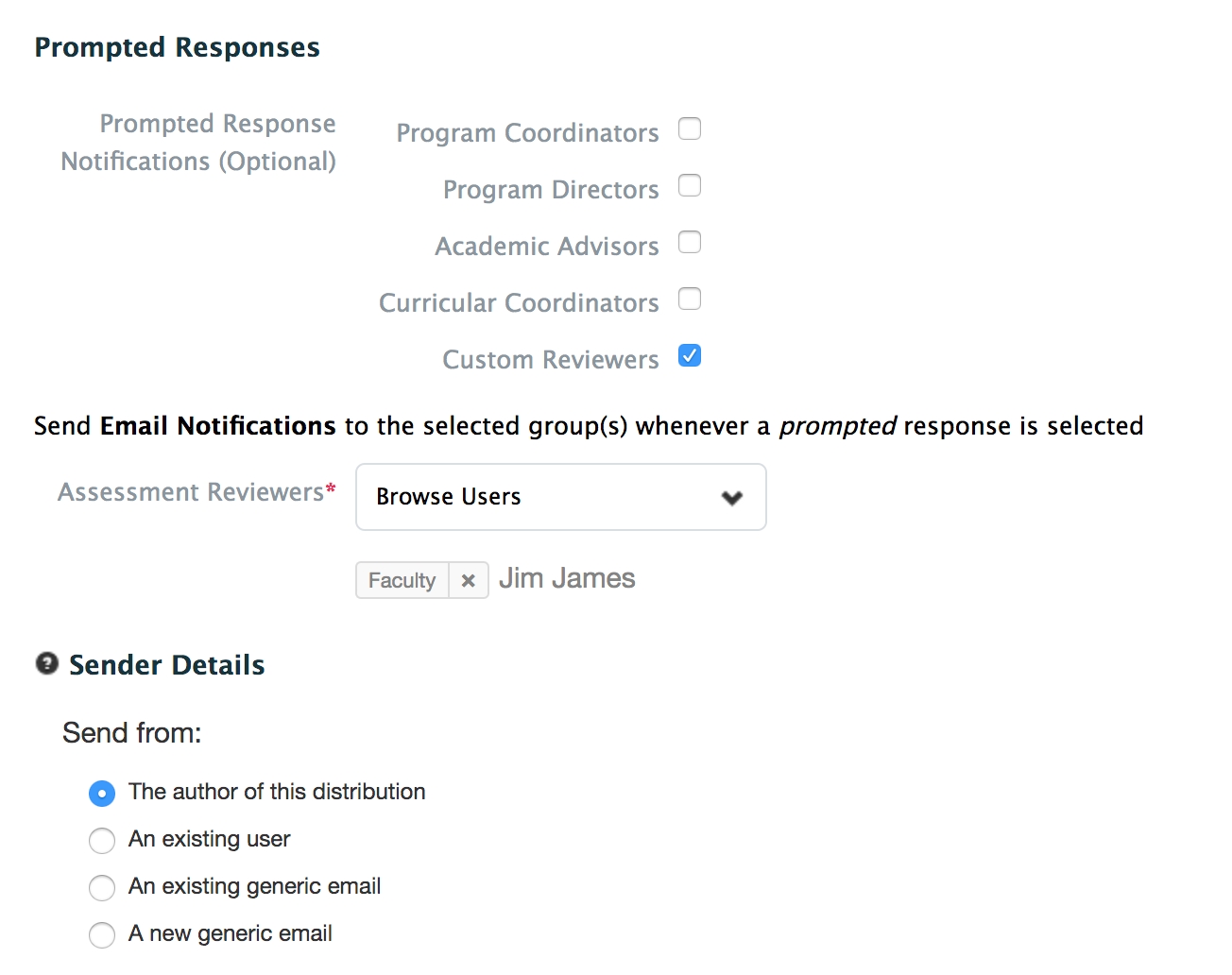

Notifications and Reminders:

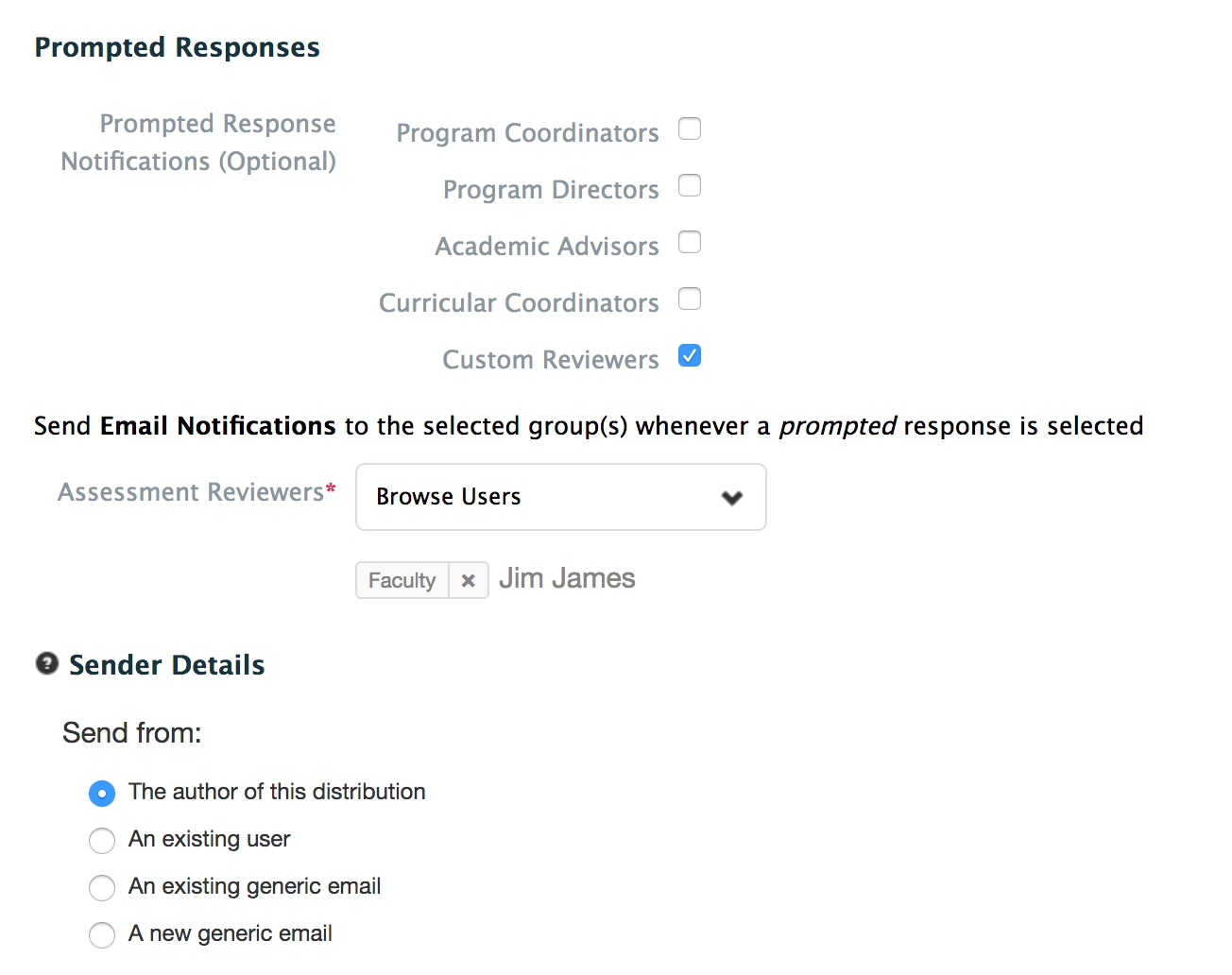

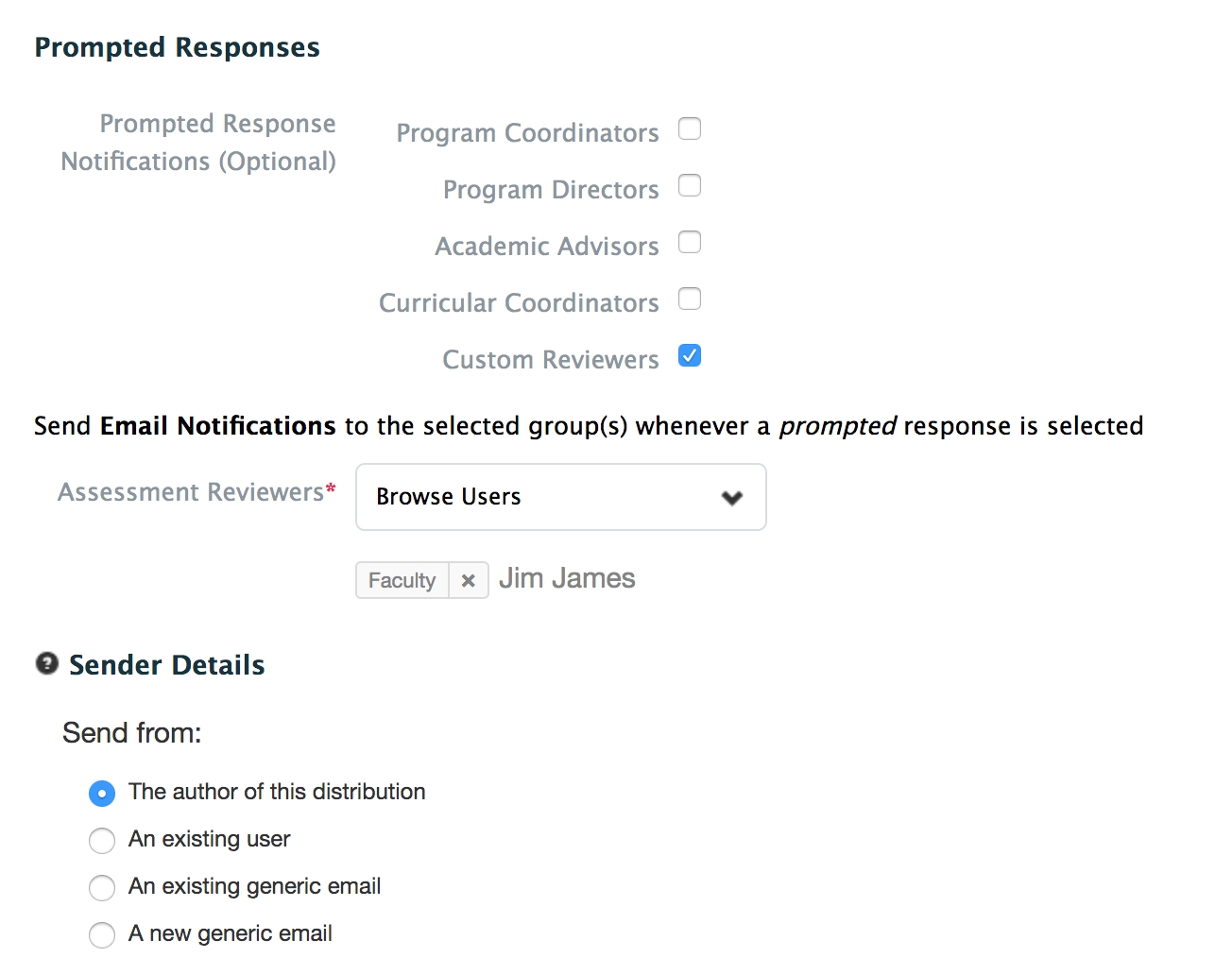

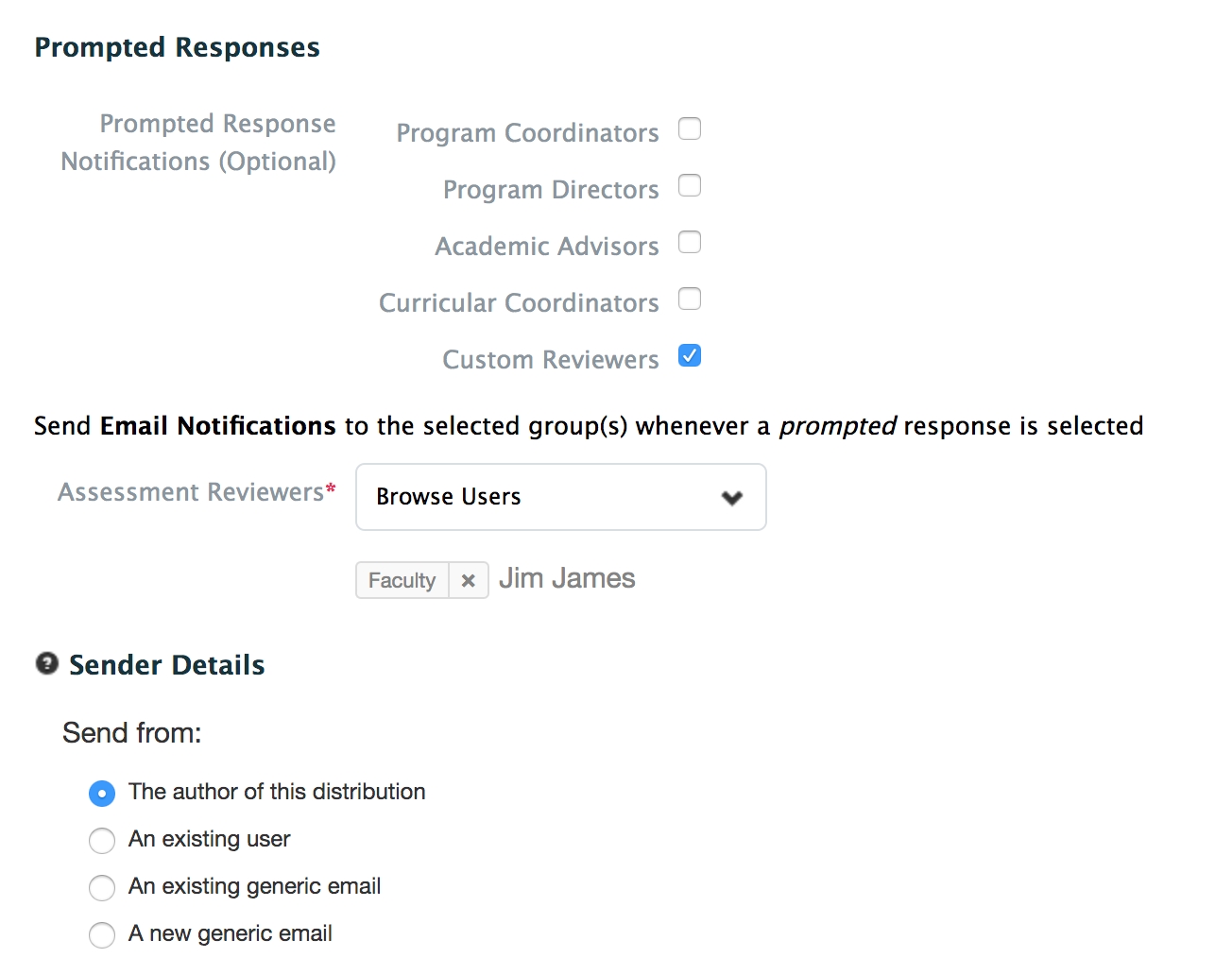

Prompted Responses: This allows you to define whom to send an email to whenever a prompted response is selected on a form used in the distribution. For example, if you have an item asking about student completion of a procedure and "I had to do it" was set as a prompted/flagged response, any time "I had to do it" is picked as an answer an email notification will be sent.

You can optionally select to email Program Coordinators, Program/Course Directors, Academic Advisors, Curricular Coordinators, or you can add a Custom Reviewer. If you select to add a Custom Reviewer you can select their name from a searchable list of users.

Sender Details: Define the email address that notifications and reminders will be sent from for a distribution.

Options are the distribution author, an existing user, an existing generic email, or a new generic email.

To create a new generic email provide a name and email address. This will be stored in the system and available to other users to use as needed.

Click 'Save Distribution'.

Rotation Based Distributions allow you to set up a distribution based on a rotation schedule. This means you can easily send a form to all enrolled learners to be delivered when they are actively in the rotation. Note that you must have rotations built using the Clinical Experience Rotation Scheduler to use this distribution method.

Navigate to Admin>Assessment and Evaluation.

Click 'Distributions' above the Assessment and Evaluation heading.

Click 'Add New Distribution'.

Distribution Title: Provide a title. This will display on the list of Distributions that curriculum coordinators and program coordinators can view.

Distribution Description: Description is optional.

Task Type: Hover over the question mark for more detail about how Elentra qualifies assessments versus evaluations. If a distribution is to assess learners, it's an assessment. If it is to evaluate courses, faculty, learning events, etc. it is an evaluation. Notice that the language on Step 4 will change if you switch your task type.

Disable Target Release: If you do not want assessors/evaluators to be able to release the form to the target upon completion, check this box. If left unchecked, assessors/evaluators will be able to release the form to the target upon completion.

Assessment/Evaluation Mandatory: This will be checked off be default.

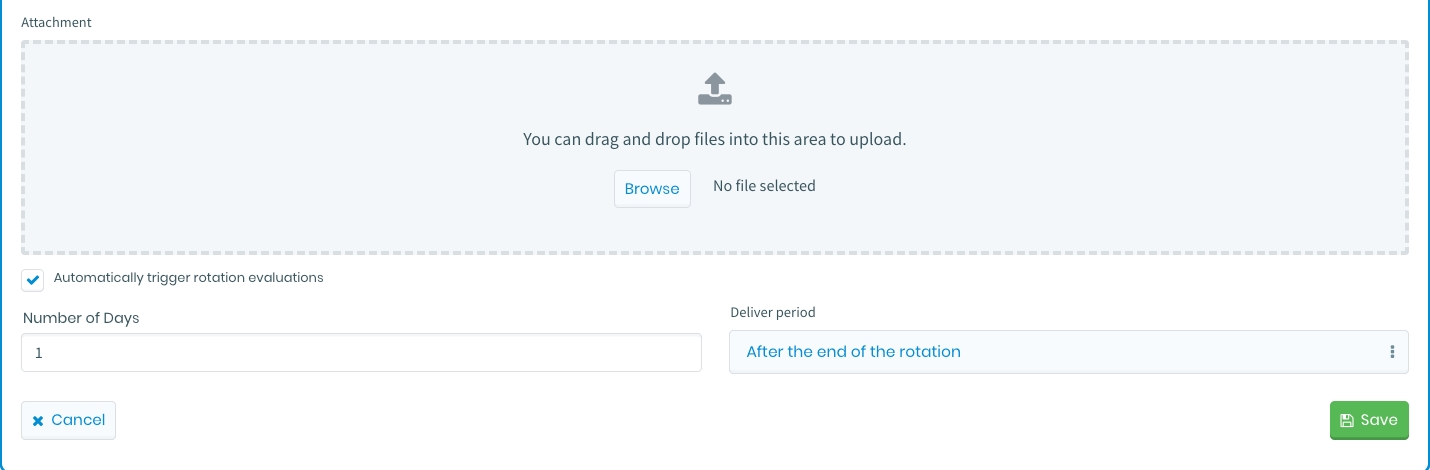

Disable Initial Task Email: Introduced in ME 1.26 this option allows administrative users to opt-out of having Elentra sent initial task email notifications to assessors/evaluators. (Applies to non-delegation based distributions).

Disable Reminders: Check this box to exclude this distribution from reminder notification emails.

Select Form: The form you want to distribute must already exist; pick the appropriate form.

Select a Curriculum Period: The curriculum period you select will impact the list of available learners and associated faculty.

Select a Course: The course you select will impact the list of available learners and associated faculty.

Click 'Next Step'

Distribution Method: Select 'Rotation Distribution' from the dropdown menu.

Rotation Schedule: Select the appropriate rotation schedule from the dropdown menu.

Specific Sites: Select a site if you wish to include only targets from a specific site on this distribution.

Release Date: This tells the system how far back on the calendar to go when creating tasks. Hover over the question mark for more detail.

Delivery Type:

Basic delivery (single): allows you to send a single evaluation task repeatedly, once per block, or once per rotation.

Dynamic delivery rules (multiple): allows you to deliver multiple evaluation tasks at specific intervals during a rotation. For example, if you have a 5-month rotation, and you’d like to deliver an interim evaluation at the 2-month mark and the 4-month mark, you can set a rule for the distribution to do so.

If you select Basic delivery (single), then select your Delivery Period:

Choose between delivering tasks repeatedly, once per block, or once per rotation.

Repeatedly means you set how often during the rotation the task sends (e.g. every 5 days)

Once per block means that for each booking the learner has in a rotation they will be sent a task

Once per rotation means that consecutive bookings (i.e., two or more back-to-back blocks) will be treated as one unit of time

For each delivery period, additional customization allows you to control the timing of the distribution (e.g. 1 day after the start of the block, or 3 days before the end of the rotation).

Options are before/after the start, before/after the middle, and before/after the end.

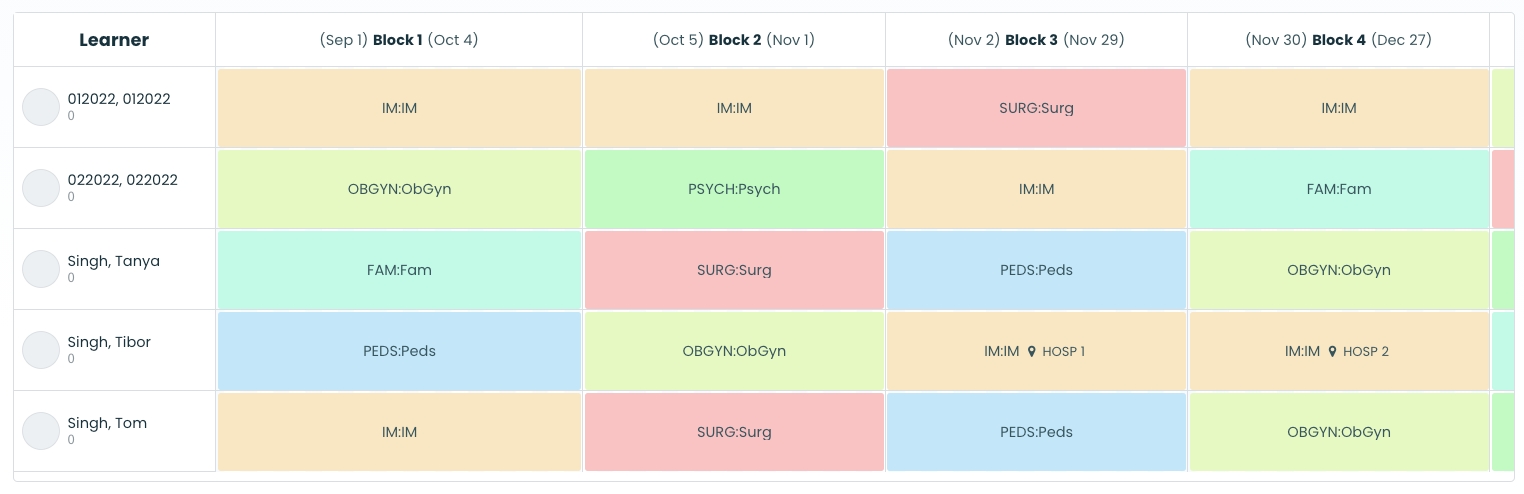

In this sample schedule, a 'Once per block' delivery for the IM rotation would send learner 012022 three tasks - one each for Blocks 1, 2, and 3. A 'Once per rotation' delivery for the IM rotation would send learner 012022 two tasks - one for Blocks 1+2, and one for Block 4.

Note that if learners have consecutive bookings at different sites they will not be treated as one rotation. For example a 'Once per rotation' delivery for the IM rotation for Tibor would result in two assessment tasks because he is at Hospital 1 and then Hospital 2.

If you select Dynamic deliver rules (multiple), then set your delivery rules.

Identify the specific delivery rules depending on the length of a rotation (you identify the length of the rotation in months, weeks, or days).

If you enter an integer larger than 1 for the number of tasks, you will be able to define when each task will be delivered (percent of the way through the rotation) and a visualization will be supplied by the Task bar below.

Clicking on Add new delivery will allow you to add additional delivery schedules that depend on the length of the rotation, which allows you to set different parameters for shorter rotations and longer rotations.

As noted in the interface, “larger” rules take precedence over smaller ones. So if you had a 0-1 month rule and a 0-2 month rule, the 0-2 month would supersede the other.

Remember: if a learner's schedule has two or more of the same rotation scheduled in a row, the system will treat them as a single rotation.

You can have as many rules as you want, but they must be contiguous (e.g., 0-2 months, 2-4 months, 4-6 months) to avoid leaving learners without an assessment.

Examples of Dynamic Delivery Scenarios:

If you would like two interim assessment tasks delivered for any rotation 5 to 8 months in length, you could identify that for a 5 – 8-month rotation, task one should be delivered at 40% of the way through the rotation and task 2 should be delivered at 80% of the way through the rotation. Then, for a learner on a 5-month rotation, the interim task would be delivered at 2 months into the rotation and again at 4 months into the rotation, for example.

If you would like three tasks delivered for any rotation 3 – 6 months in length, you could set task one at 25% through the rotation, task 2 at 50% through the rotation, and task 3 at 75% through the rotation, which would represent delivery of the task at ¼ of the way through the rotation, 1/2 the way through the rotation, and ¾ of the way through each 3-month, 4- month, 5-month, or 6-month rotation that a learner might be scheduled on.\

Task Expiry: If you check this box, the tasks generated by the distribution will automatically expire (i.e., disappear from the assessor's task list and no longer be available to complete). You can customize when the task will expire in terms of days and hours after the delivery.

Warning Notification: If you choose to use the Task Expiry option, you'll also be able to turn on a warning notification if desired. This can be set up to send an email a specific number of days and hours before the task expires.

The target is who or what the form is about (e.g., learners, faculty members, a rotation). Note that you'll only see the option to set the rotation or faculty member as a target if you are creating an evaluation.

Assessments will be delivered for: Use this area to specify the target of the form.

Targets are the assessors (self assessment): use this for self-assessments. Learners will be delivered a task where they are both the target and assessor.

Targets are learners

Learner Options:

All learners in this rotation

Learners from My Program/Outside of my Program: This refers to organizations that use on and off-service rotation slots (usually PGME programs).

Checking My Program will target leaners who are scheduled in the rotation AND enrolled in the course.

Checking Outside of my program will target learners who are scheduled in the rotation but enrolled in a different course than that in which the rotation exists.

Learner Levels: This refers to learner levels usually used in CBME enabled organizations. Checking a learner level will restrict the distribution to target only learners at that learner level. Leaving all boxes unchecked will target all learners, regardless of their learner level.

Additional Learners

Click Browse Additional Learners. Search for a learner and check the box beside the learner name. To delete a learner, click the x beside their name.

Specific learners in this rotation: Use the drop down selector to add the required learners. (Hover over a learner name to see their profile information.)

CBME Options: This will display whether you select All Learners or Specific Learners. This option applies only to schools using Elentra for CBME. Ignore it and leave it set to non CBME learners if you are not using CBME. If you are a CBME school, this allows you to apply the distribution to all learners, non CBME learners, or only CBME learners as required.

Targets are peers

This option allows for targets who have completed the rotation to assess all of their peers in the rotation block.

CBME Options: This option applies only to schools using Elentra for CBME. Ignore it and leave it set to non CBME learners if you are not using CBME. If you are a CBME school, this allows you to apply the distribution to all learners, non CBME learners, or only CBME learners as required.

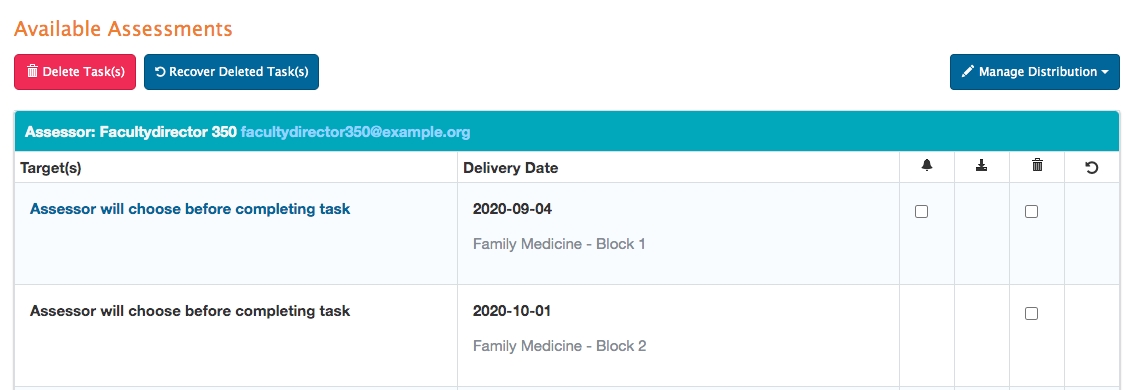

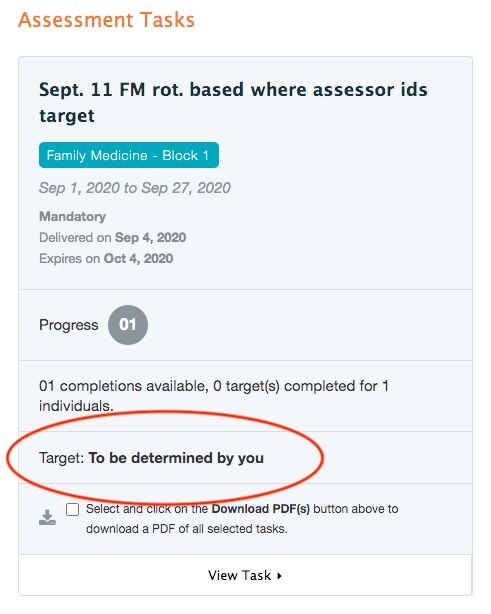

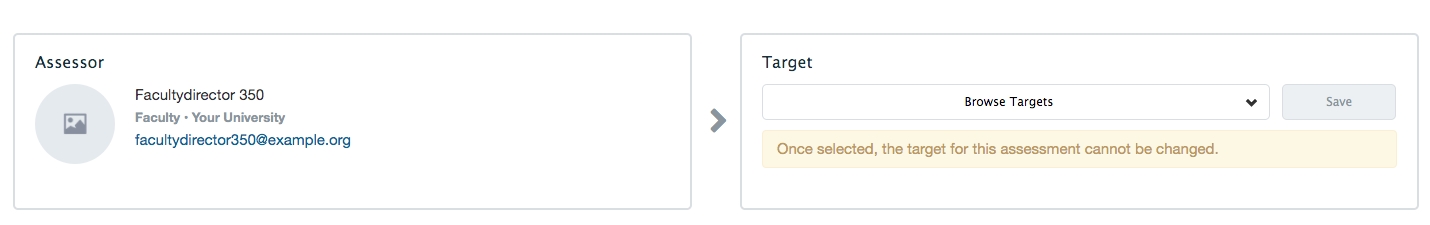

Targets for this Distribution will be determined by the Assessor

With this option, the assessor/evaluator selects the target based on their role and distribution assessor options when they are completing the task.

In the Distribution Progress Report, administrators will see the target listed as "Assessor will choose before completing task."

Target Attempt Options: Specify how many times an assessor can assess each target, OR whether the assessor can select which targets to assess and complete a specific number (e.g. assessor will be sent a list of 20 targets, they have to complete at least 10 and no more than 15 assessments but can select which targets they assess).

If you select the latter, you can define whether the assessor can assess the same target multiple times. Check off the box if they can.

Click 'Next Step'

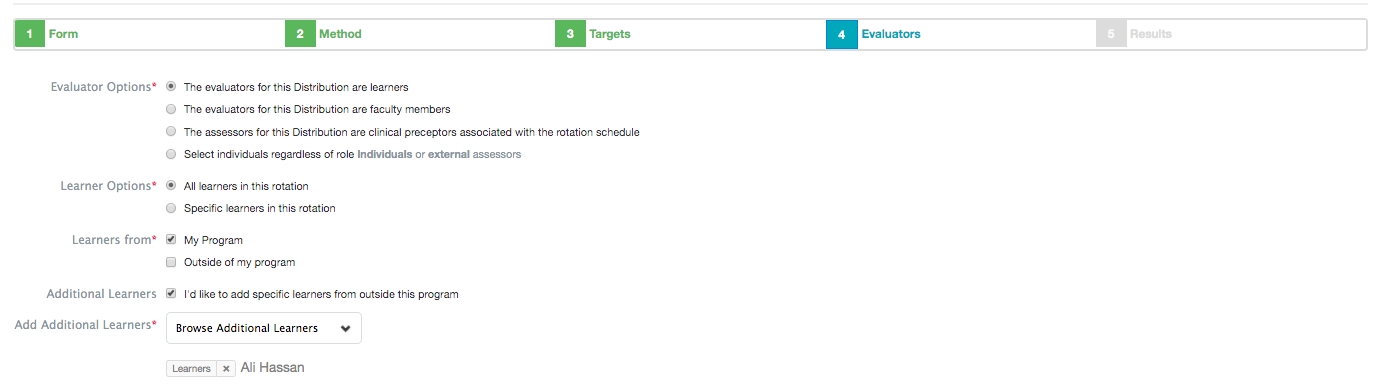

The assessors are the people who will complete the form.

Assessor Options:

Assessors are learners

Learner Options: All Learners of Specific Learners

All Learners: Select all learners in the rotation or specific learners in the rotation

All learners: Select from My Program and/or Outside of my program, use the drop down selector to add additional learners

Specific learners in the rotation: Use the drop down selector to add required learners

Additional Learners: Check this option to add additional learners from outside the program.

Assessors are faculty members

Browse faculty and click on the required names to add them as assessors

Select Associated Faculty: This tool will pull the names of faculty listed on the course setup page as associated faculty

Assessors are preceptors associated with a rotation schedule

This allows you to associated this distribution with all preceptors affiliated with the rotation based on the slot bookings made for learners. The distribution will dynamically update based on changes made to the rotation schedule.

Select individuals regardless of role (Individuals or external assessors)

This allows you to add external assessors to a distribution

Begin to type an email, if the user already exists you'll see them displayed in the dropdown menu. To create a new external assessor, scroll to the bottom of the list and click 'Add External Assessor'

Provide first and last name, and email address for the external assessor and click 'Add Assessor'

Exclude Self Assessments: Check this to stop learners from completing a self-assessment.

Feedback Options: This will only display when the assessors are faculty. Checking the box will add a default item to the distribution asking if the faculty member met with the trainee to discuss their assessment.

Give access to the results of previous assessments: This relates to Elentra's ability to provide a summary assessment task to users. If enabled, tasks generated by this distribution will link to tasks completed in the listed distributions. When users complete the summary assessment task they will be able to view tasks completed in the other distributions. For any items that are used on both forms, results from previously completed tasks will be aggregated for the assessor to view.

Click to add the relevant distribution(s).

Currently for rotation-based distributions there is no option to configure minimum tasks completed on linked distributions nor a fallback date for the summary assessment task.

Click 'Next Step'

You can immediately save your distribution at this point and it will generate the required tasks, but there is additional setup you can configure if desired.

Authorship: This allows you to add individual authors, or set the distribution to be accessible to everyone with A+E access in a course or organization. (This may be useful if you have multiple users who manage distributions or frequent staffing changes.)

Target Release: These options allow you to specify whether the targets of the distribution can see the results of completed forms.

Task List Release:

"Targets can view tasks completed on them after meeting the following criteria" can be useful to promote completion of tasks and is often used in the context of peer assessments. Targets will only see tasks completed on them after they have completed the minimum percentage of their tasks set by you.

Target Self-Reporting Release: This controls whether targets can run reports for this distribution (i.e. to generate an aggregated report of all responses). When users access their own A+E they will see a My Reports button. This will allow them to access any reports available to them.

Target Self-Reporting Options: This allows you to specify whether or not comments included in reports are anonymous or identifiable. (This will only be applied if you have set reports to be accessible to the targets.)

Reviewers: This allows you to set up a reviewer to view completed tasks before they are released to the target (e.g. a staff person might review peer feedback before it is shared with the learner).

Check off the box to enable a reviewer.

Click Browse Reviewers and select a name from the list. Note that this list will be generated based on the course contacts (e.g. director, curriculum coordinator) stored on the course setup page.

Notifications and Reminders:

Prompted Responses: This allows you to define whom to send an email to whenever a prompted response is selected on a form used in the distribution. For example, if you have an item asking about student completion of a procedure and "I had to do it" was set as a prompted/flagged response, any time "I had to do it" is picked as an answer an email notification will be sent.

You can optionally select to email Program Coordinators, Program/Course Directors, Academic Advisors, Curricular Coordinators, or you can add a Custom Reviewer. If you select to add a Custom Reviewer you can select their name from a searchable list of users.

Sender Details: Define the email address that notifications and reminders will be sent from for a distribution.

Options are the distribution author, an existing user, an existing generic email, or a new generic email.

To create a new generic email provide a name and email address. This will be stored in the system and available to other users to use as needed.

Click 'Save Distribution'.

Currently this feature only supports using completing one task per distribution. Even if you configure Step 3: Targets, Target Attempt Options to be more than one (which the user interface does allow), the system will not be able to support assessors/evaluators choosing more than one target per distribution.

After the distribution is created, the distribution progress page will show targets as "Assessor will choose before completing task."