Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Organizations will need to map their CBE curriculum before beginning to use additional CBE tools. Typically central administrative staff will create the required curriculum framework(s). Following that, curriculum can be uploaded at the organization or course level, depending on the needs of an organization.

Due to some legacy code, organizations will have to run some auto-setup tools and populate certain tag sets before they can build their own frameworks.

In the context of Royal College programs, after your CBME migrations are complete, any new program onboarding to CBE will have to map Entrustable Professional Activities, Key and Enabling Competencies, Milestones, Contextual Variable Responses and Procedure Attributes (if using procedure form template).

The CBE tools allow an organization to build a curriculum framework to define the structure of their curriculum (e.g., exit competencies, phase objectives, course objectives), and leverage that framework to build objectives trees that house the specific curriculum.

A Curriculum Framework defines a list of tag sets and sets relationships between those tag sets. Elentra will look for that information when an objective tree is built. Objective trees consist of the curriculum tags that users will work with in Elentra.

Objective Trees are built based on the structure defined in a Curriculum Framework. They define the hierarchical relationships between all of the curricular objectives (tags) included.

A Curriculum Framework may be used to generate multiple Objective Trees and Objective Trees are versioned each time there is a change to them.

There are three types of objective trees:

Organisation Trees - Use an Organization Tree when multiple courses share identical curriculum. You will be able to apply an organisation tree to courses when you set them up. You create an Organization Tree from the Admin > Manage Curriculum > Curriculum Framework Builder. Then it can be leveraged from Admin > Manage Courses > CBME > Configure CBME.

Building an organisation tree is most appropriate when a single curriculum is applied across multiple courses.

Course Trees - A Course Tree is required for every course, however there are several ways you can create a Course Tree. You can upload curriculum tags per course (appropriate when courses/programs have unique curriculum) or copy an existing Organisation Tree to multiple courses (appropriate when multiple courses share the same curriculum).

User Trees - Elentra creates unique user trees for learners enrolled in courses that have course trees. Updating a user tree is what allows learners to have different versions of organisation trees applied to them as the curriculum changes. In a typical setup, administrators don’t have to modify user trees that often and there is no initial work to build a user tree. A user tree is automatically created for a user when they are enrolled in a course.

After a curriculum framework, organisation trees and course trees are built, you can use the existing competency-based assessment tools like form templates, assessment plans, learner dashboards and program dashboards.

Before you begin to build a curriculum framework, you should create any dashboard rating scales that will be used with a tag set in your framework. Dashboard rating scales allow faculty and learners to track overall progress on specific curriculum tags shown on the dashboard. The dashboard rating scale can be different from the the milestone or global assessment scales.

If you are a Canadian Postgraduate organization, many of the prerequisites for using CBE are already in place. If the CBE module is new to your organization, there will be some developer tasks required before you begin to explore CBE.

Enable CBE for the relevant organization (database setting: cbme_enabled)

Disable CBE for all courses that don’t need it (make entries per course for cbme_enabled on the course_settings table)

Add form types to the organization

Almost all organizations will require the rubric form be active to use CBE

You can optionally add other form types (e.g. Supervisor Form Template, Smart Tag Form)

Configure workflows for the organization (at a minimum you will likely need rubric forms available to the EPA or Other Assessment workflow)

Create Assessment & Evaluation rating scales as needed (this can be done by a medtech:admin user)

If a rating scale will be used for global entrustment or milestone ratings, additional developer work can allow users to have automated question prompts based on the scale selected

The following are required only if you plan to version your curriculum and automatically apply new versions to learners when they transition to a new stage within your curriculum framework:

Configure learner levels as required by your organization (e.g., PGY 1, PGY 2). Store these in the global_lu_learner_levels table.

Enable the enhanced enrolment tab to allow administrative staff to enter learner level and CBE status information (database setting: learner_levels_enabled)

NOTE: Some schools choose to just store this information in the database and not have administrative staff input it (they are presumably getting the data from another system).

Set learners up to land on their My Event Calendar tab on the dashboard instead of their CBE Progress Learner Dashboard. There is no database setting for this; a developer just has to make code changes to support it if that is an organization's preference.

Enable learner self-assessment options for curriculum tags displayed on the learner dashboard (database setting: cbe_dashboard_allow_learner_self_assessment and/or learner_access_to_status_history)

Enable visual summary dashboards (database setting: cbme_enable_visual_summary)

Provides access to additional reporting on EPA assessments

This step is optional based on your curriculum structure and needs. An Organization Tree makes the most sense to use when multiple courses share identical curriculum. You will be able to apply an organisation tree to multiple courses when you set them up and build their course trees.

Click on the framework title in the Curriculum Builder table where you want to create, or edit an organization tree.

Click the Organization Tree tab.

On the Create New Organization Tree page, input the Title name.

Click the Save and Proceed to Uploader button.

At this point you'll be prompted to upload the required files to populate your tree.

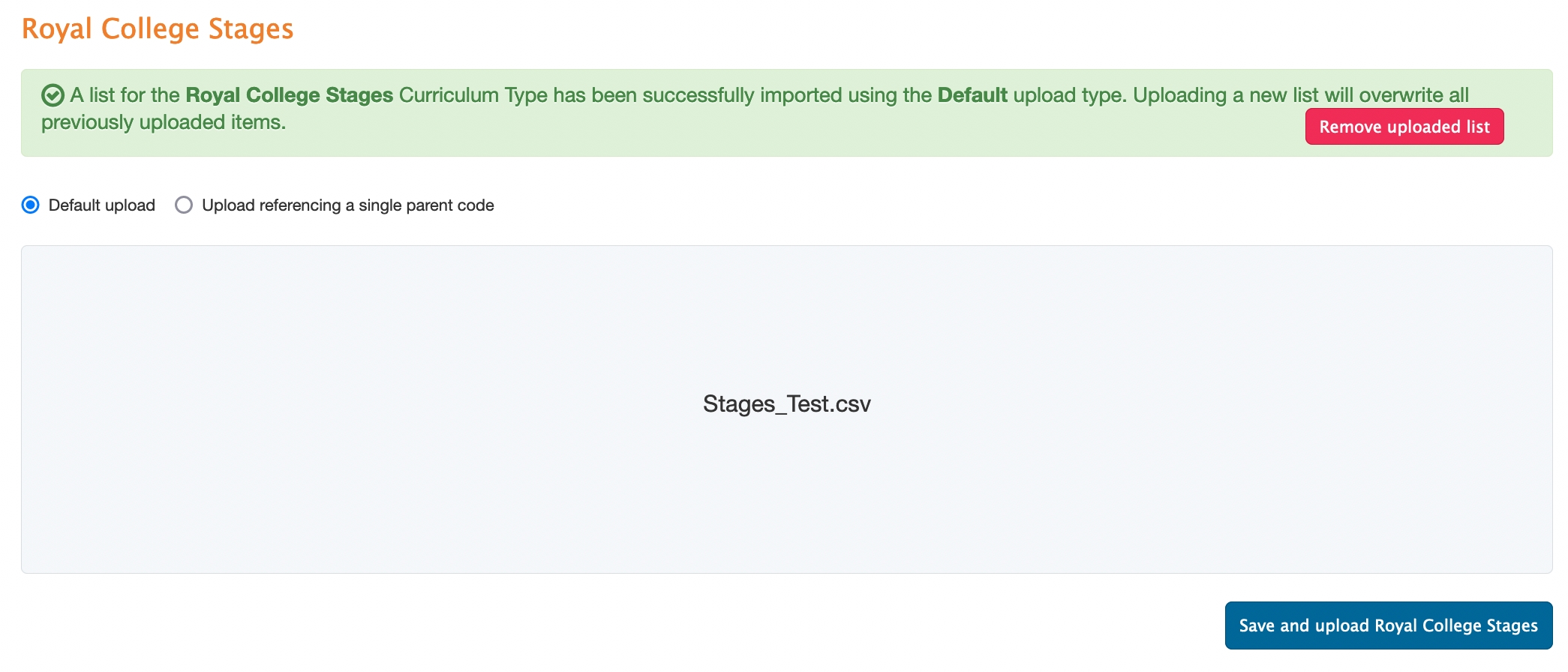

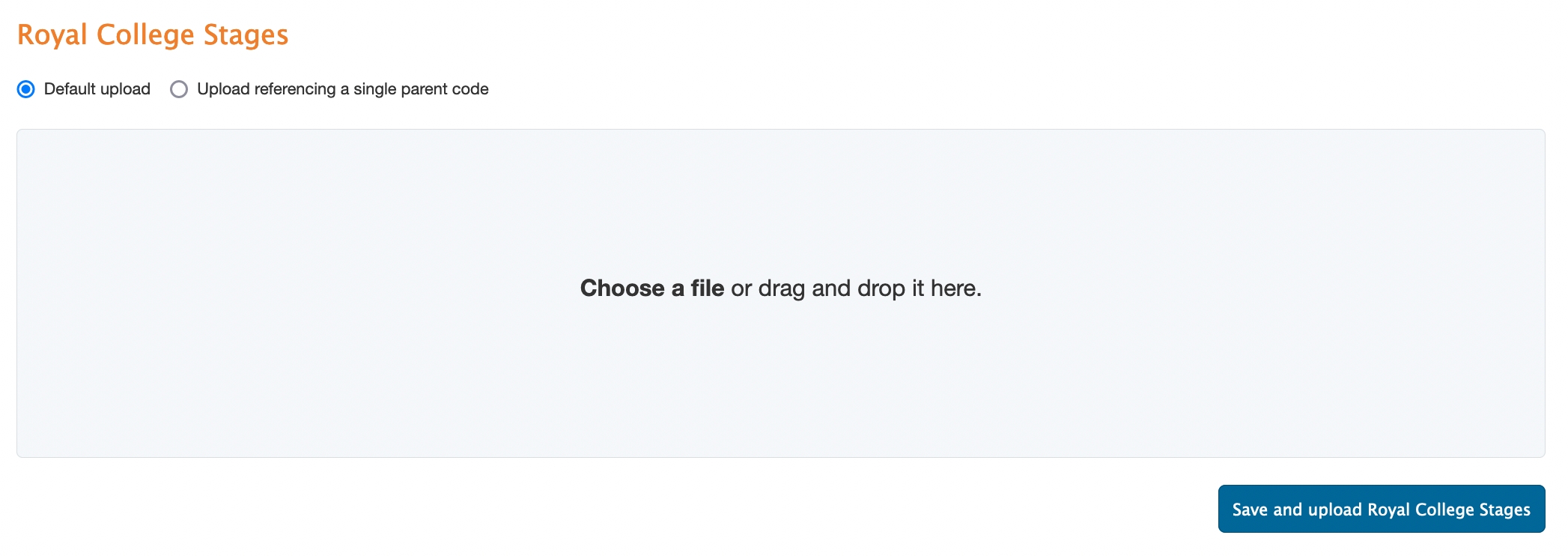

For each tag set select Default Upload or Upload referencing single parent code.

Click Choose a file or drag and drop to add a .csv file.

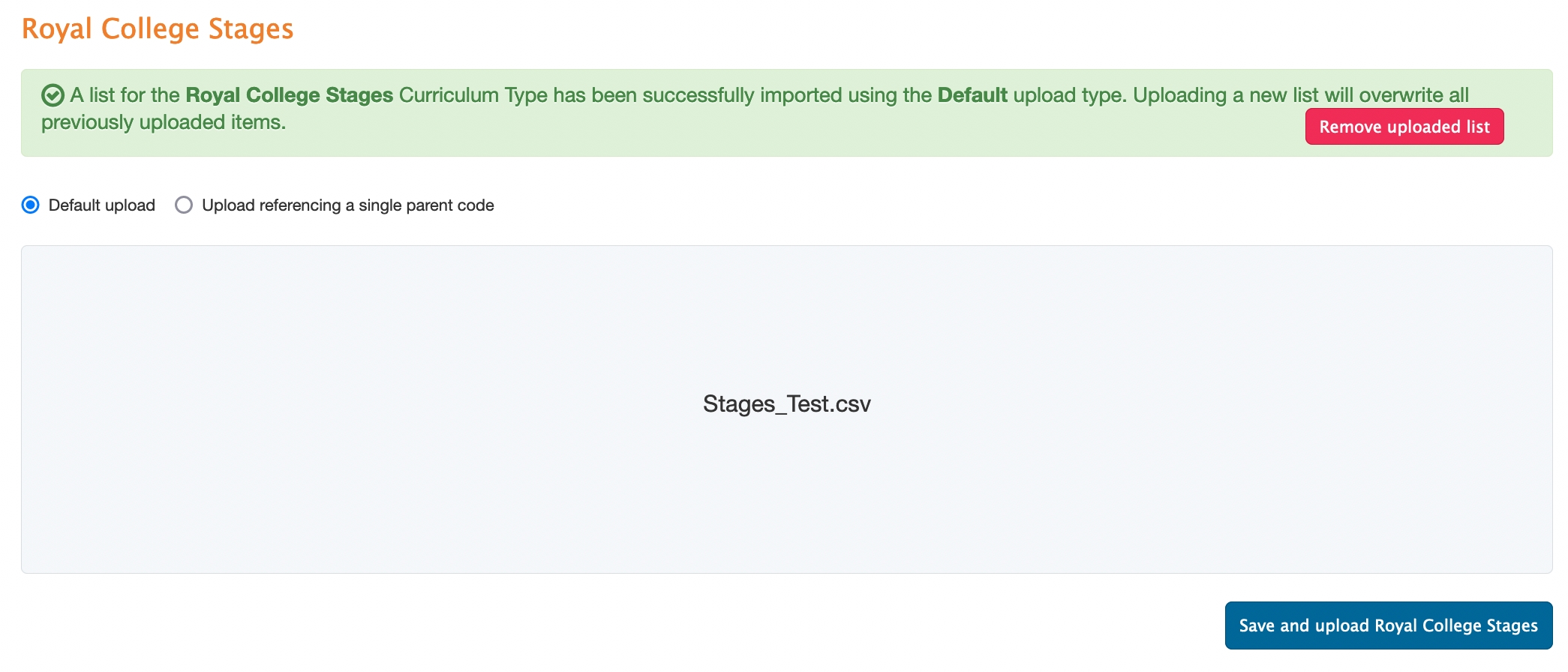

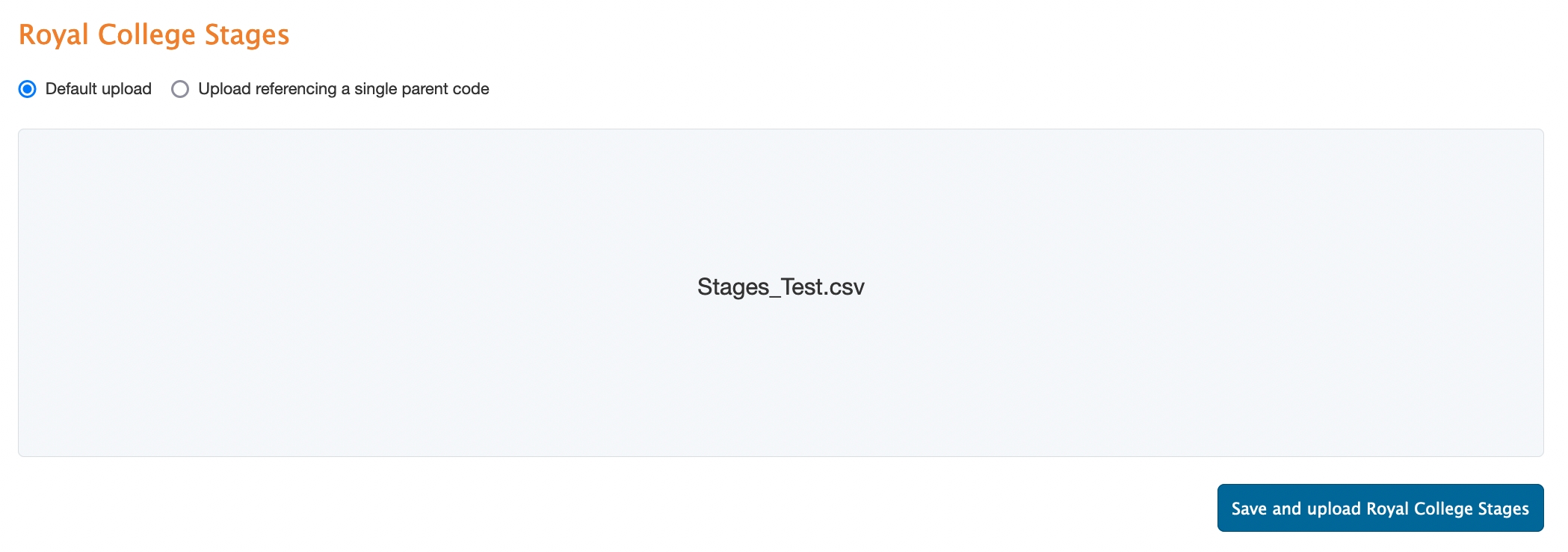

Once the .csv file is selected, the upload area will display the file name.

Click the Save and upload tag set name button. A confirmation highlighted in green will display when the file is uploaded successfully.

Scroll down to click the Next Step button.

Continue until all tag sets are populated.

On the Organization Tree tab, click the trash icon associated with the tree you want to delete.

On the Delete an Organization Tree page, click the Delete this tree button.

You will receive a notification that the tree was deleted, and the page will return to the Organization Trees table.

If you plan to use curriculum versioning and have residents automatically move to a new curriculum version when they move to their next stage, you must define the learners as CBME enabled. A developer can do this directly in the database of you can use the data base setting (learner_levels_enabled) to allow course administrators to set learner levels and CBE status on the course enrolment page.

To ensure CBME works correctly for RC programs, each organisation that has CBME enabled must have a developer or technical administrator set the default_stage_objectivesetting value in the elentra_me.settingstable to the global_lu_objectives.objective_idof the first stage of competence (ex: Transition to Discipline).

After the provided software migrations run with the upgrade to ME 1.24 or higher, some additional configuration is required for your users to move forward using Elentra as they currently do. Please check all tag set settings for the tags included in your Royal College Framework (Milestones). This is important to ensure that the learner dashboard, program dashboard and assessment plan builder behave as expected.

Before you build a Curriculum Framework, please make sure that the database setting cbme_enabled is enabled.

A Curriculum Framework defines tag sets and the relationships between those tag sets.

To use the CBE dashboards, you must create a curriculum framework and define the tag set options you'd like to use for each tag set included in the framework. The tag set options will determine things like whether a tag set appears on the assessment trigger interface or whether a tag set is shown on a learner dashboard.

Once a Curriculum Framework is create, you can optionally create an organizational tree or multiple course trees.

Creating an organisational tree is optional and is required only when you have multiple courses that will rely on the same curriculum. Otherwise, once you have built the required framework(s), you can work within a course page to build course trees.

If you plan to use the CBE learner dashboard, you should set up dashboard rating scales before building your curriculum framework and tag sets so that you can apply the dashboard scales as needed on your tag sets.

Log in as a MedTech or Admin user.

Open the Admin drop-down on the top menu and click Manage Curriculum.

Click Curriculum Framework Builder in the left-side menu.

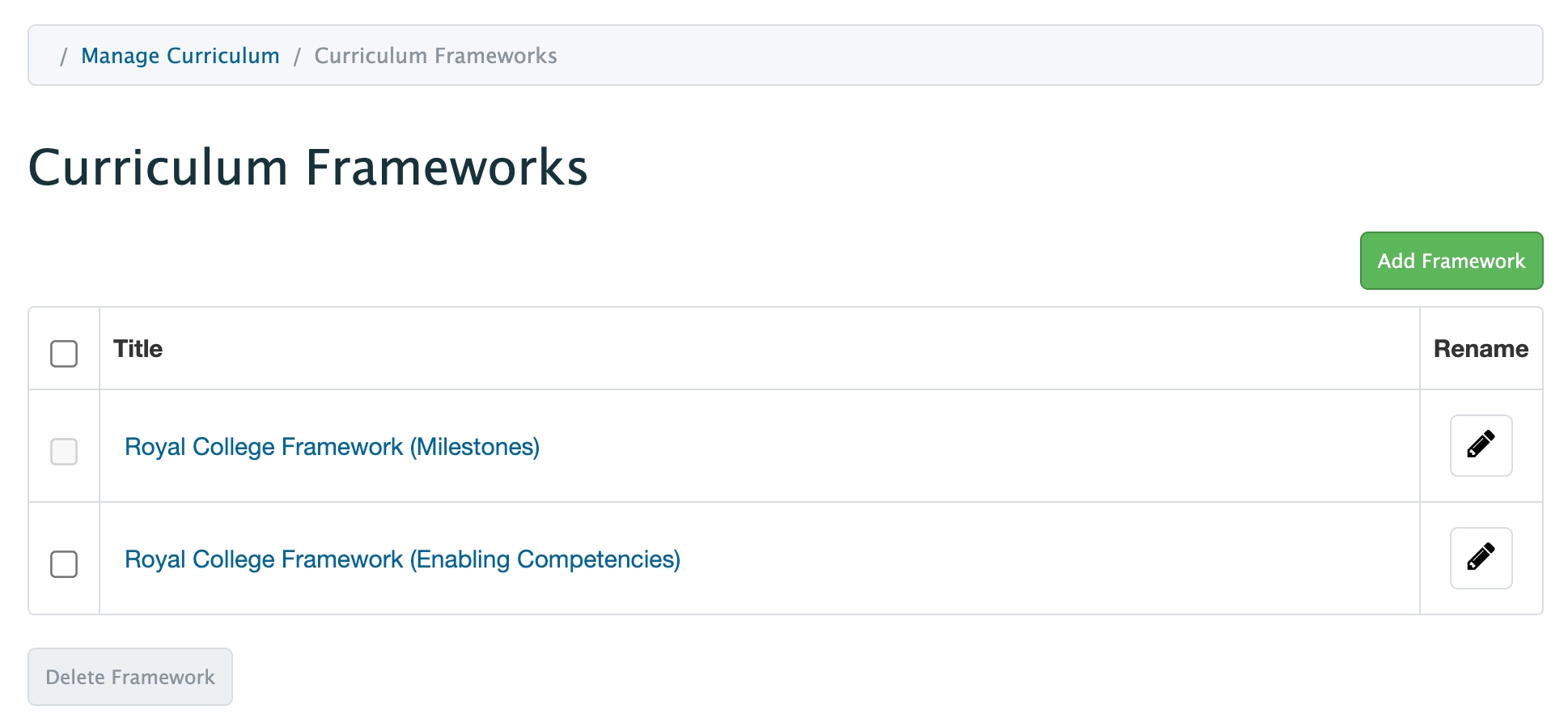

Click the Add Framework button.

In the Adding New Framework box, input the Title of the framework and click the Add Framework button.

The new framework will appear in the Curriculum Frameworks table.

Rename a framework as needed by clicking the edit icon on the right side of the Curriculum Frameworks table.

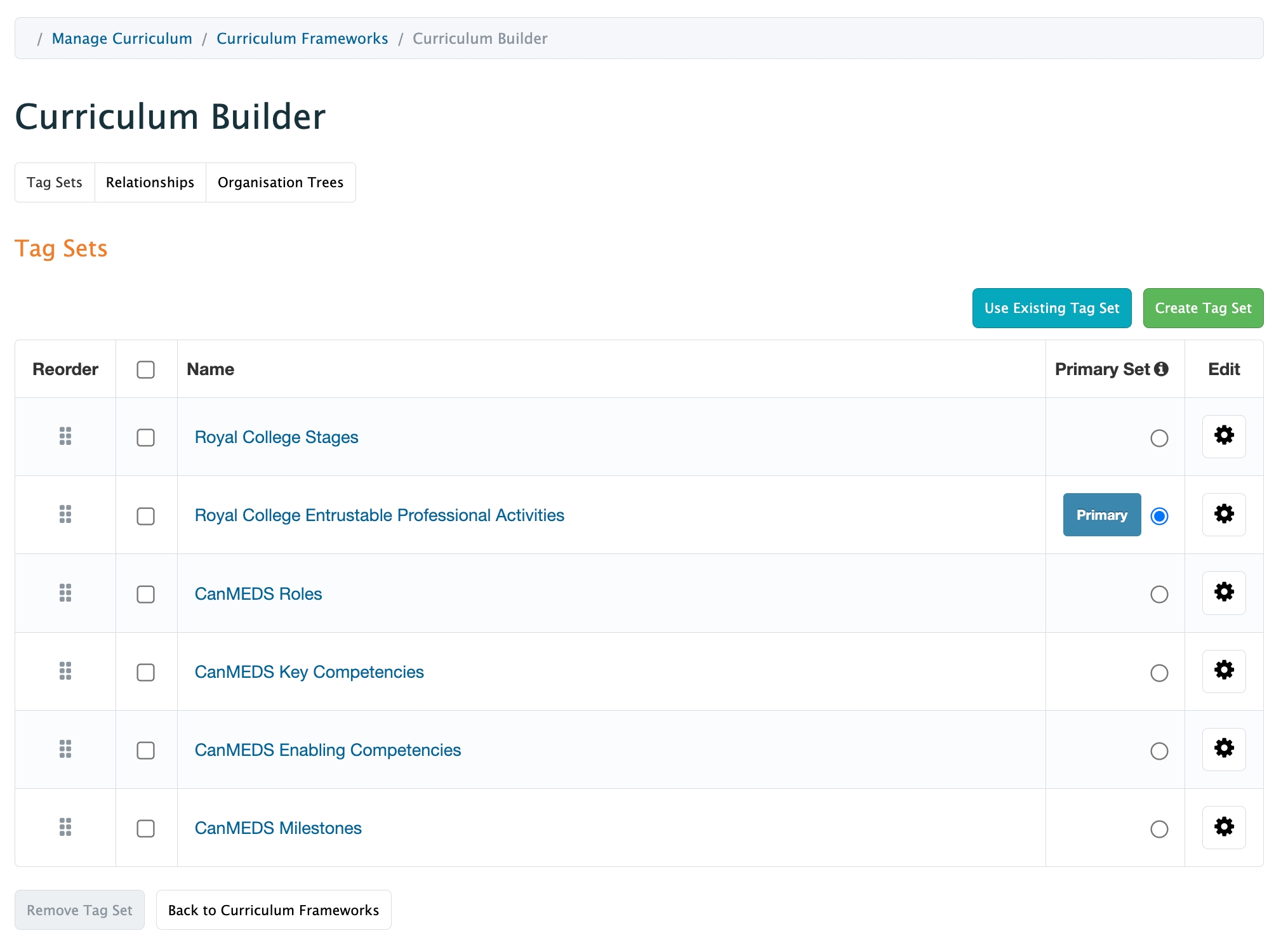

Once a Curriculum Framework exists, you need to define the tag sets to include in that framework. You can create new tag sets or add existing tag sets. If you add existing tag sets, you'll still need to upload objectives for those tag sets when you build an objective tree, however you'll be able to manually map tags uploaded through an objective tree to other tags stored in Elentra.

From Admin > Manage Curriculum > Curriculum Frameworks, click on the framework to which you want to add tag sets.

On the Tag Sets page, click the Create Tag Set button.

On the Adding Tag Set page, under the Details section, input the Code, Name, Shortname. These fields are required.

Scroll down to select which course(s) the tag set will be associated with.

Scroll down to select from the options under Framework Specific Options, if applicable. See Curriculum Framework Tag Set Options for more details.

Scroll down to select from the options under Form Options, if applicable. See Curriculum Framework Tag Set Options for more details.

Scroll down to select from the options under Advanced Options, if applicable. See Curriculum Framework Tag Set Options for more details.

Click the Save button to add the tag set.

Note that even if you add an existing tag set to a curriculum framework, Elentra will still require you to upload objectives to build an objective tree.

Click on the framework title in the Curriculum Builder table that you want to add tag sets.

On the Tag Sets page, click the Use Existing Tag Set button.

On the Use an Existing Objective Set box, use the Existing Objective Set drop-down to select a tag set.

Click the Import button.

On the Edit Tag Set page, under the Details section, ensure the correct Code, Name, and Shortname appear.

Scroll down to ensure the proper course(s) are associated with the tag set.

Scroll down to ensure the necessary tag set options are selected, if applicable:

Click the Save button to add the tag set.

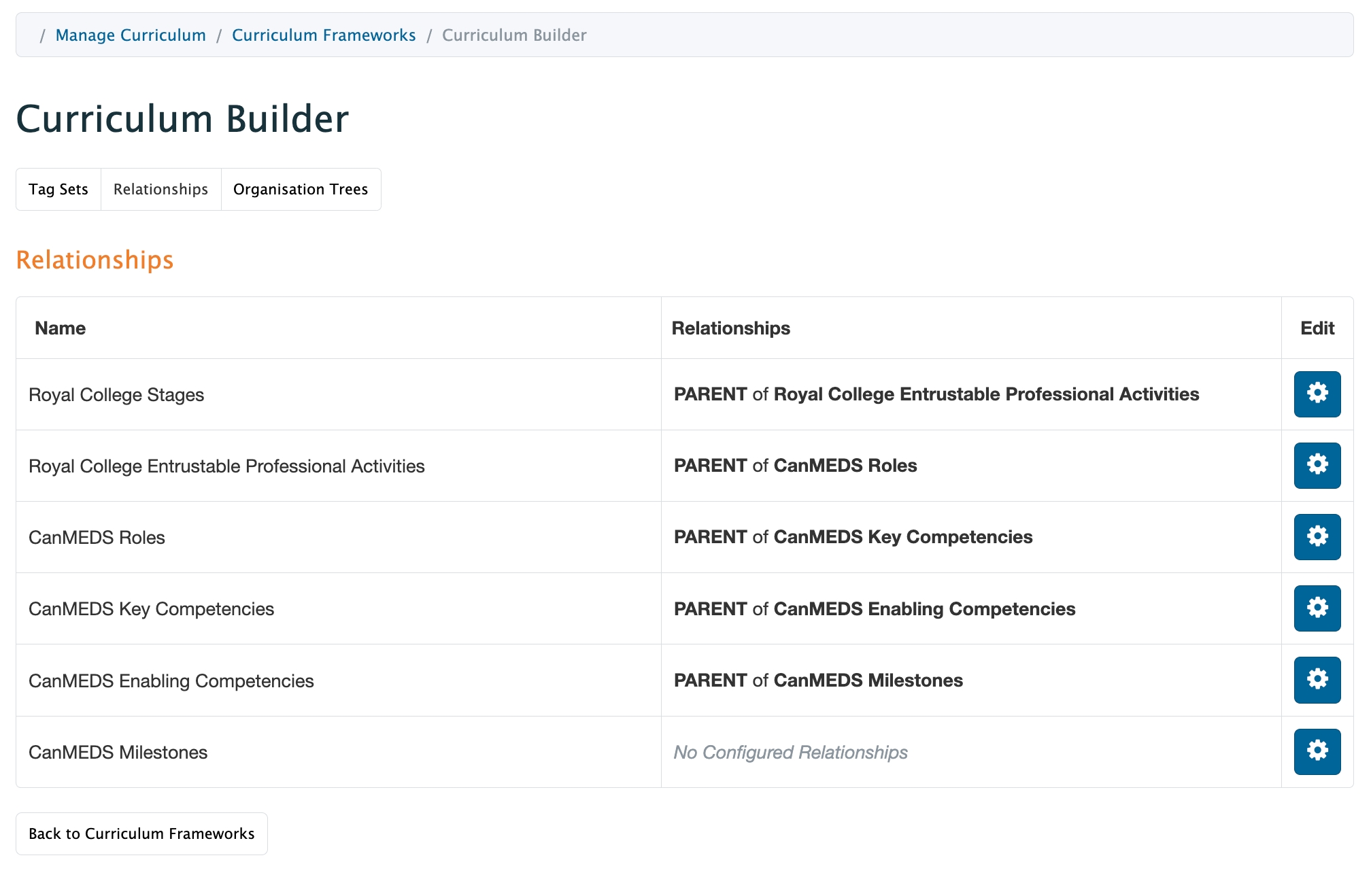

This step is necessary to define the relationships between tags. These relationships will help store information properly when you import curriculum tags to create the map of your curriculum.

Click on the framework title in the Curriculum Builder table that you want to manage the tag set relationships for.

Click on the Relationships tab.

Click on the Manage Relationships buttons for the tag set you want to add a relationship.

In the Adding Relationships to [insert tag set title] box, use the Relationship Type and Tag Set drop-downs to add the proper relationship.

Repeat the above steps for all necessary tag sets. This will create the framework for your CBE Course (program).

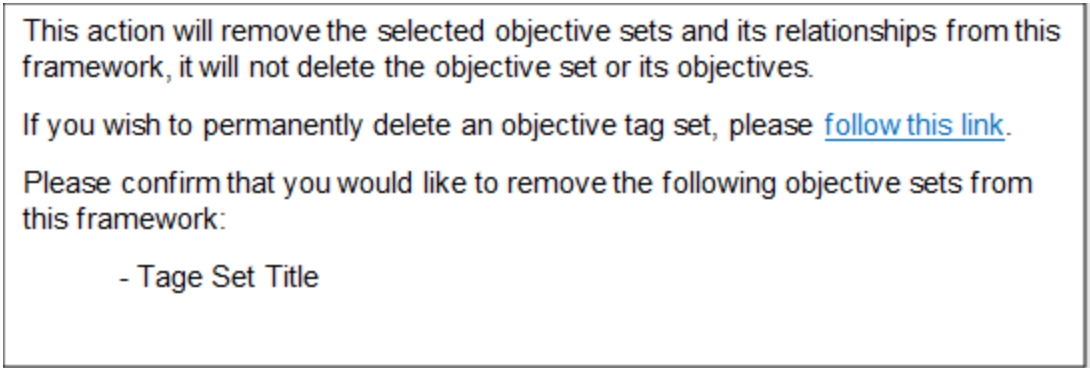

On the Curriculum Builder page, select the tag set on the Tag Set table.

Click the Remove Tag Set button.

On the Remove Objective Sets box, review the instructions:

4. Click the Remove button to delete.

Although Elentra allows individual programs using a Royal College framework to upload specific Key and Enabling Competencies, organizations are still required to upload a standard list of Key and Enabling Competencies at the organization level.

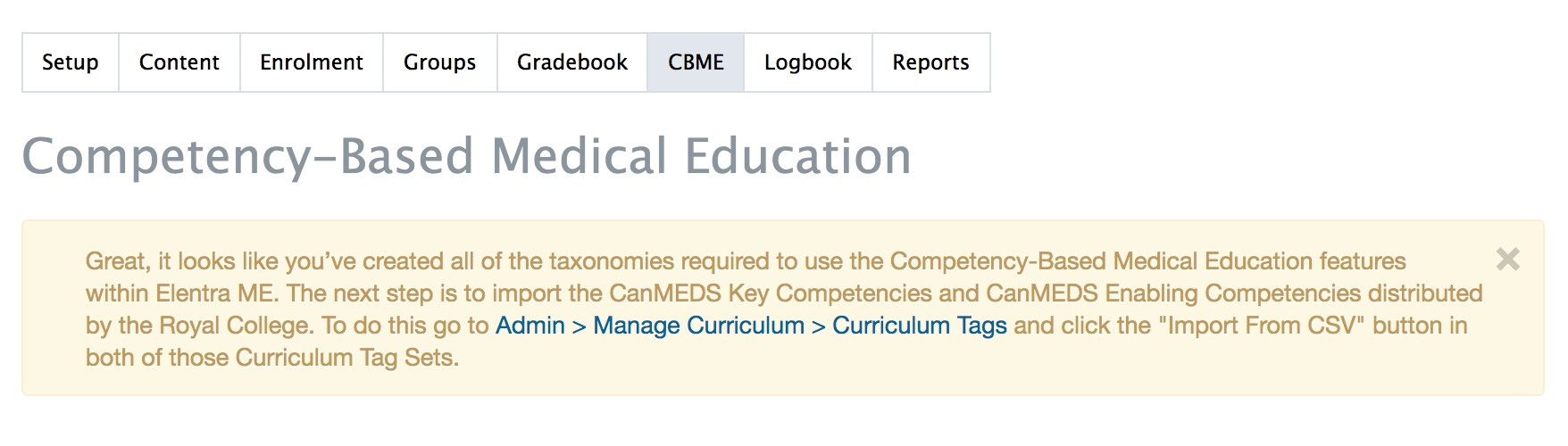

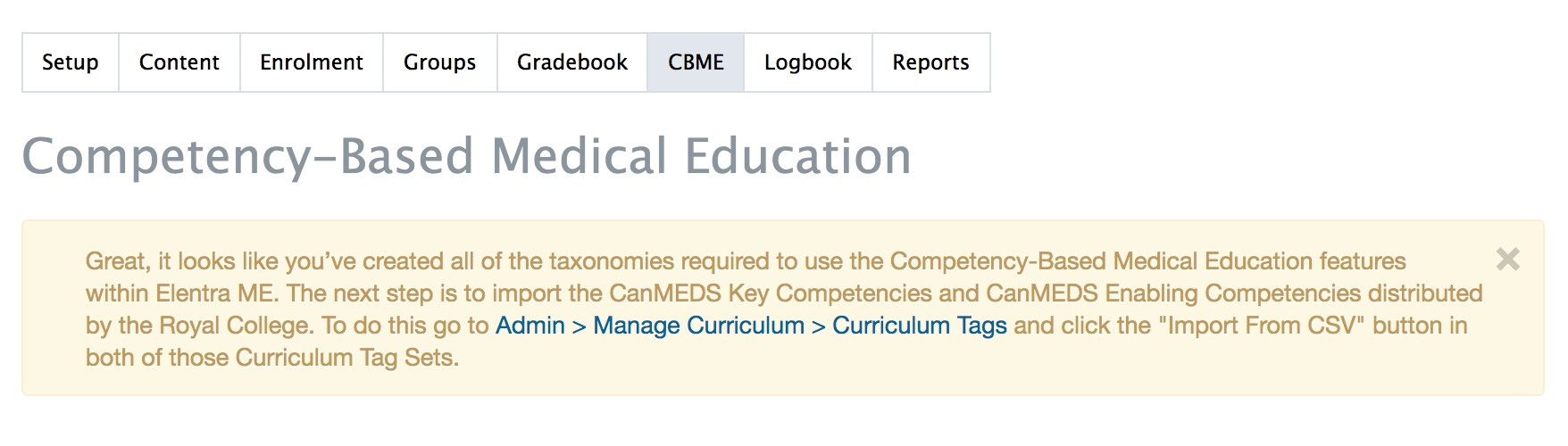

If you see a warning like the one below, it means your organization still needs to upload standard key and enabling competencies through Manage Curriculum.

For Consortium schools who are exploring CBE in Elentra, although you are never going to use the Key and Enabling Competency tag sets, Elentra still requires you to populate these tag sets with some data.

Download these files to have available for the curriculum tag upload.

Upload Key and Enabling Competency Tags

Navigate to Admin > Manage Curriculum.

Click 'Curriculum Tags' from the Manage Curriculum card on the left sidebar.

Click on a curriculum tag set name (e.g. CanMEDS Key Competencies) and then click 'Import from CSV' in the top right.

Drag and drop or select the appropriate file from your computer and click 'Upload'.

You will get a success message and the curriculum tags you've added will appear on the screen.

Now that you have fulfilled the requirements of the auto-setup tool, you can begin to create your own curriculum framework as needed.

Most organizations will have already completed this step while they were using CBME. If you are testing things or building a new organization for some reason, these files can be used to populate the standard key and enabling competencies. When you build objective trees for individual programs you'll need to prepare KC and EC templates to upload for that program.

Upload Standard Key and Enabling Competency Tags

Navigate to Admin > Manage Curriculum.

Click 'Curriculum Tags' from the Manage Curriculum card on the left sidebar.

Click on a curriculum tag set name (e.g. CanMEDS Key Competencies) and then click 'Import from CSV' in the top right.

Drag and drop or select the appropriate file from your computer and click 'Upload'.

You will get a success message and the curriculum tags you've added will appear on the screen.

Now that you have fulfilled the requirements of the auto-setup tool, you can begin to create your own curriculum framework as needed.

Even if you are not using the Royal College of Physician and Surgeons curriculum structure, Elentra still has some RC prerequisites in the system. You can upload dummy data to meet the requirement to populate certain curriculum tag sets.

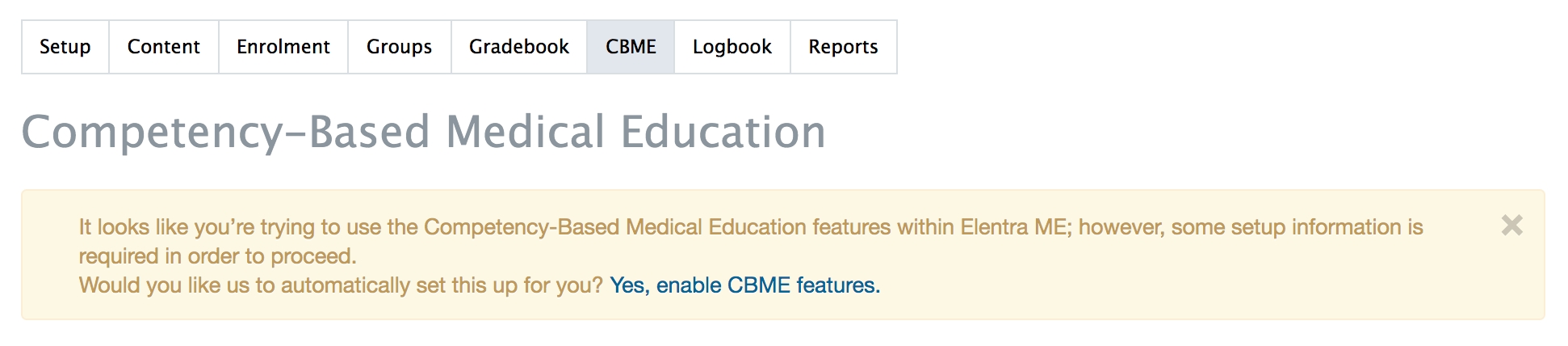

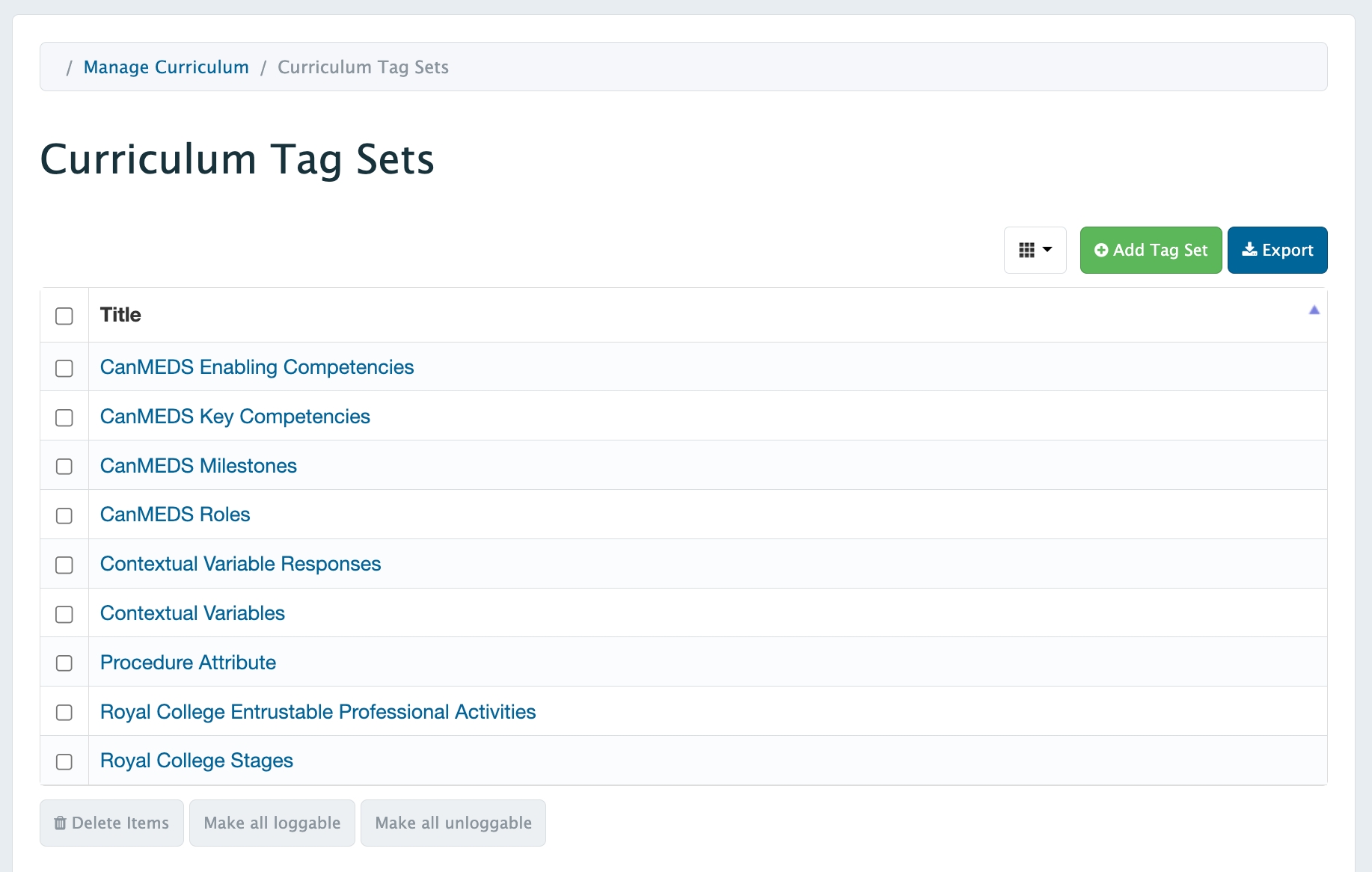

To use Elentra's CBE tools, nine required curriculum tag sets must be in place. An auto-setup tool will create the tag sets.

Navigate to Admin > Manage Course > Course (new or existing), and click the CBME tab.

If the required curriculum tag sets aren't yet configured, Elentra will prompt users to auto-setup the required curriculum tag sets and will populate the CanMEDS Roles, Royal College Stages, and Contextual Variables tags. You just do this once for an organization.

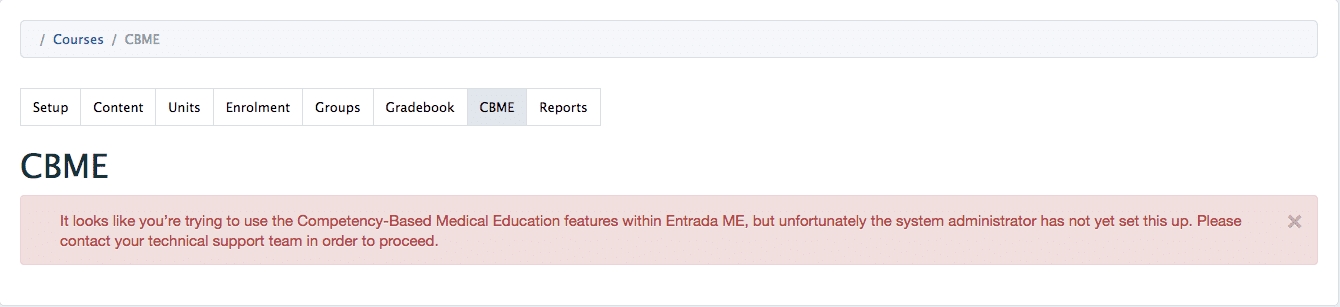

Users without permission to use the auto-setup feature will be directed to contact a system administrator with the message below.

After building the required tag sets, Elentra will prompt you to populate a standard list of key and enabling competencies. This is also a relic of CBME supporting Royal College programs but schools exploring CBE can upload dummy data to fulfil this requirement. An organization only has to do this once. Go to the next page to learn how to upload information for the standard key and enabling competency tag sets.

Unless you are working with a developer, you cannot change the tags in the pre-built tags sets or, after you do, you will be prompted to run the auto-setup feature again.

Use .csv format to upload objectives

A Course Tree is required for every course, however there are several ways you can create a Course Tree. You can upload curriculum tags per course (appropriate when courses/programs have unique curriculum) or copy an existing Organisation Tree to multiple courses (appropriate when multiple courses share the same curriculum).

Upload objectives within a course under Admin > Manage Courses.

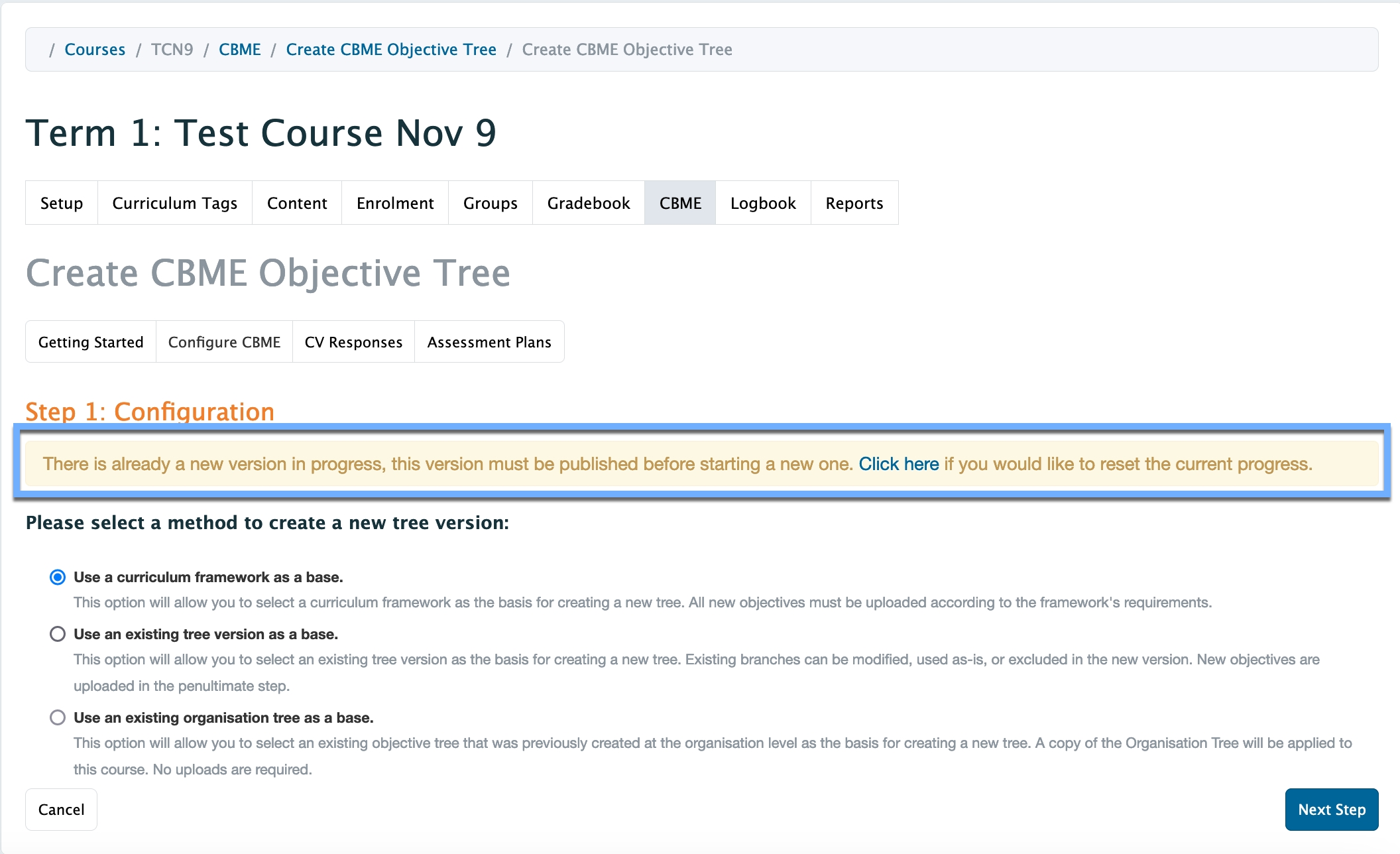

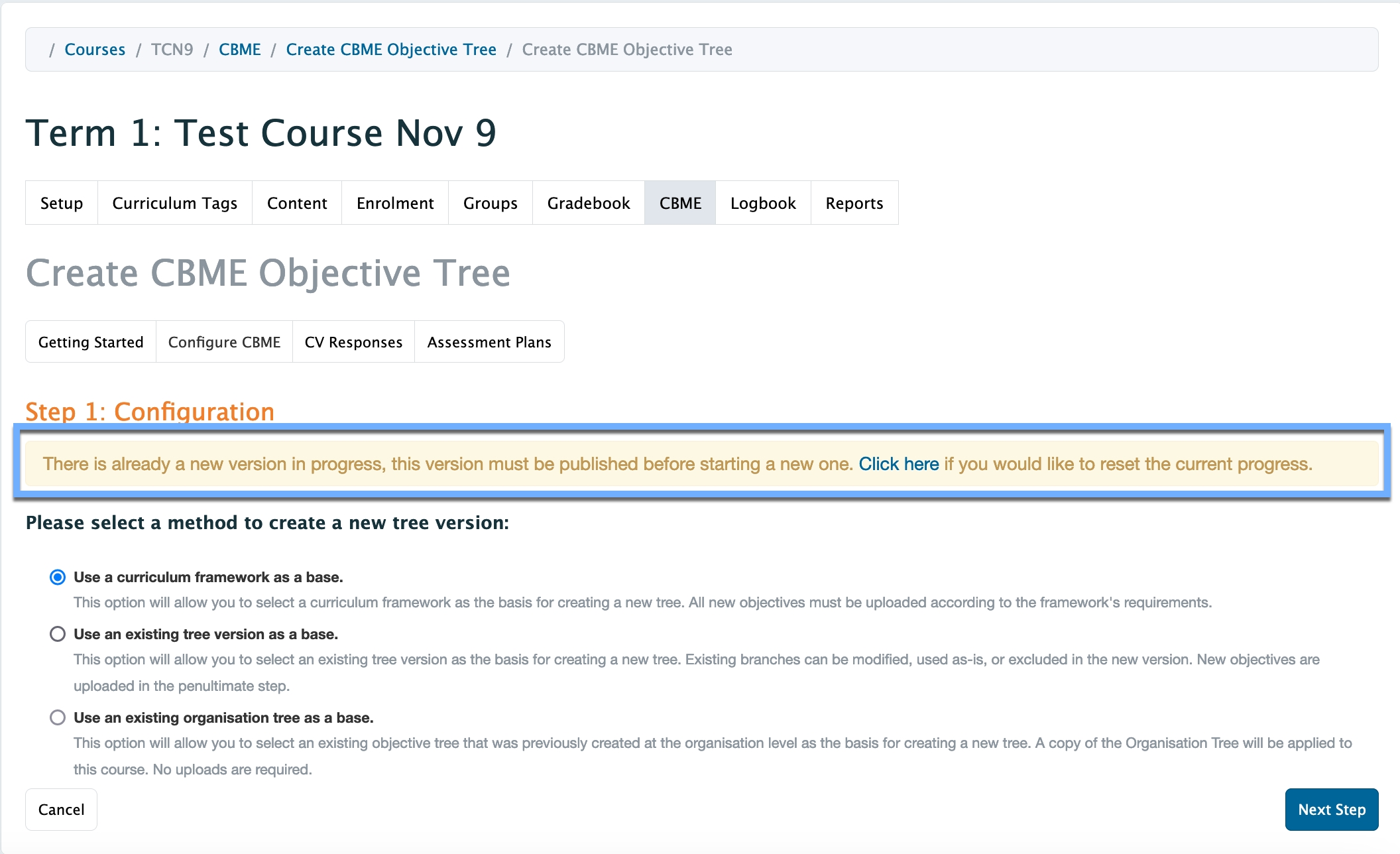

Within a course, select the CBME tab and then the Configure CBME tab to begin Step 1: Configuration. Select Use a curriculum framework as a base and click Next Step.

Select a framework in the drop-down and click Next Step.

Ensure the objective .csv files are formatted as indicated in the highlighted instructions.

For each curriculum tag set,

Select Default upload or Upload referencing a single parent code

Select the appropriate file

Click 'Save and upload (tag set name)'

Choose a file or drag and drop to add a .csv file.

A confirmation highlighted in green will display when the file is uploaded successfully.

Repeat for each curriculum tag set as needed.

If you forget to select the correct Upload radio button (ex. Upload referencing a single parent code), you must reset the upload by going back to Step 1: Configuration in the CBME tab of the course.

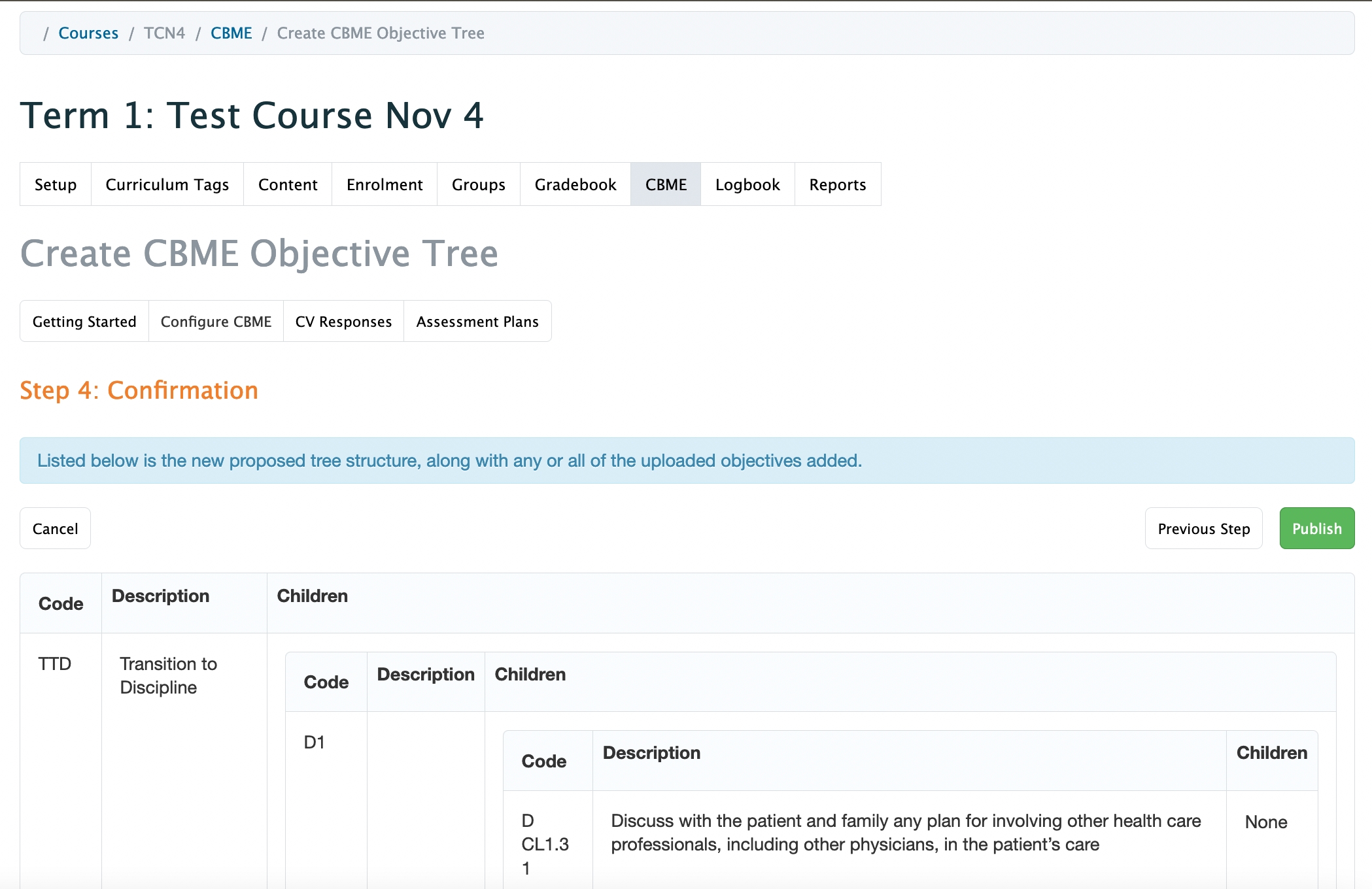

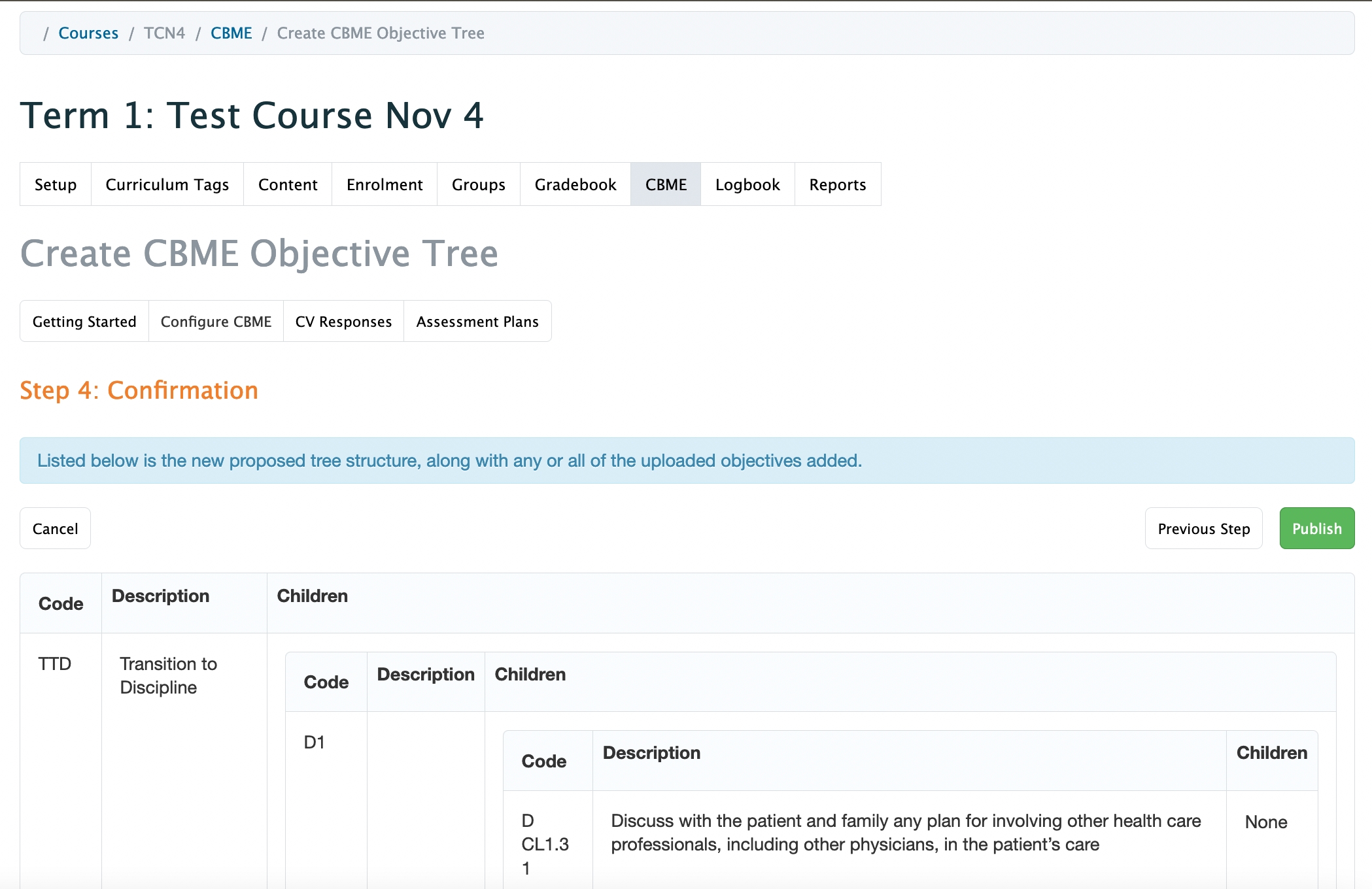

You can review the new proposed tree structure with the uploaded objectives added. Click the Publish button to save.

Note: if there are errors in the upload, then the objective uploads have not been published. The publish button also does not appear when there is an error. You will need to reset the objective upload. See Notes section in Step 4 of this documentation.

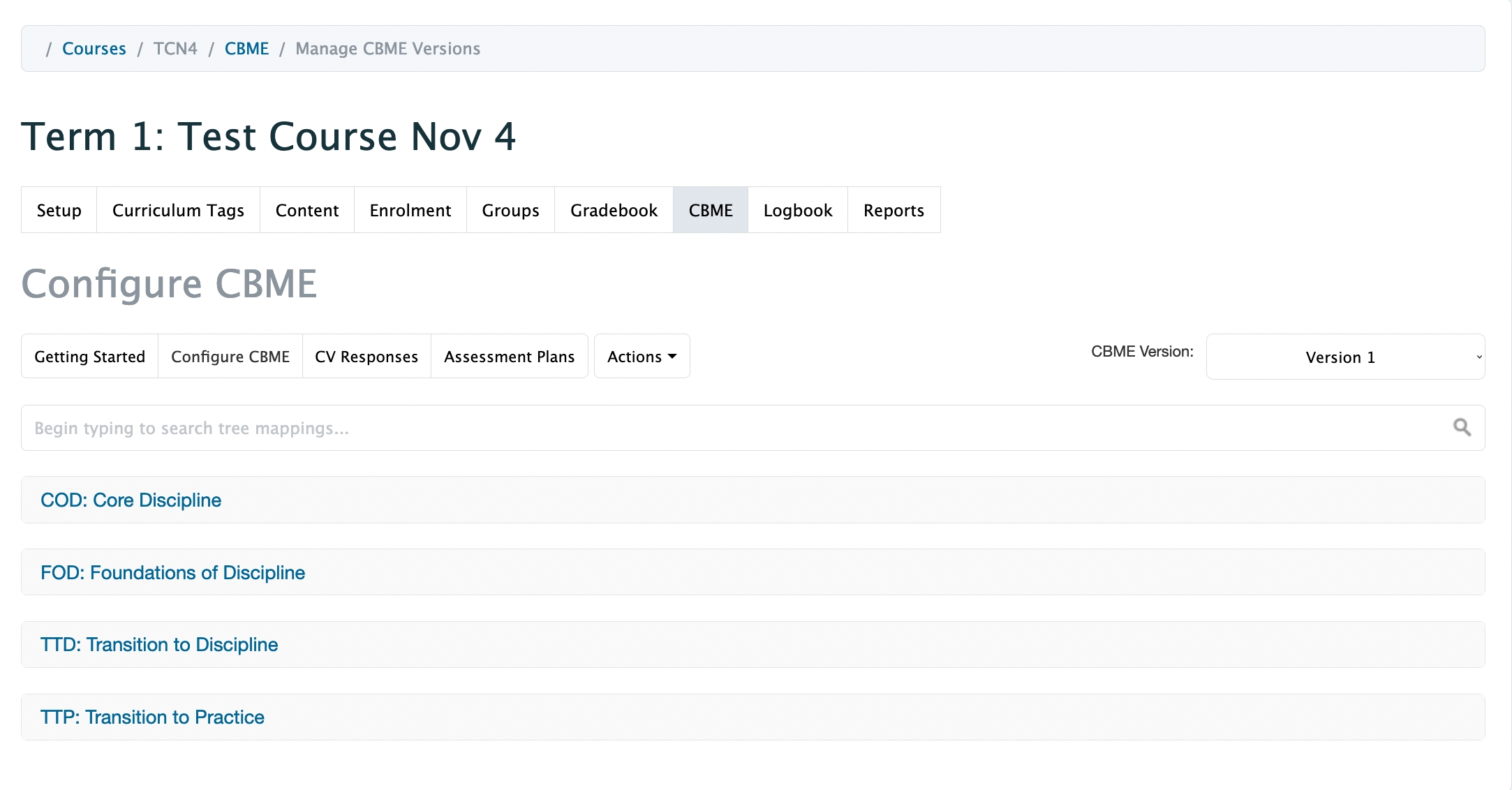

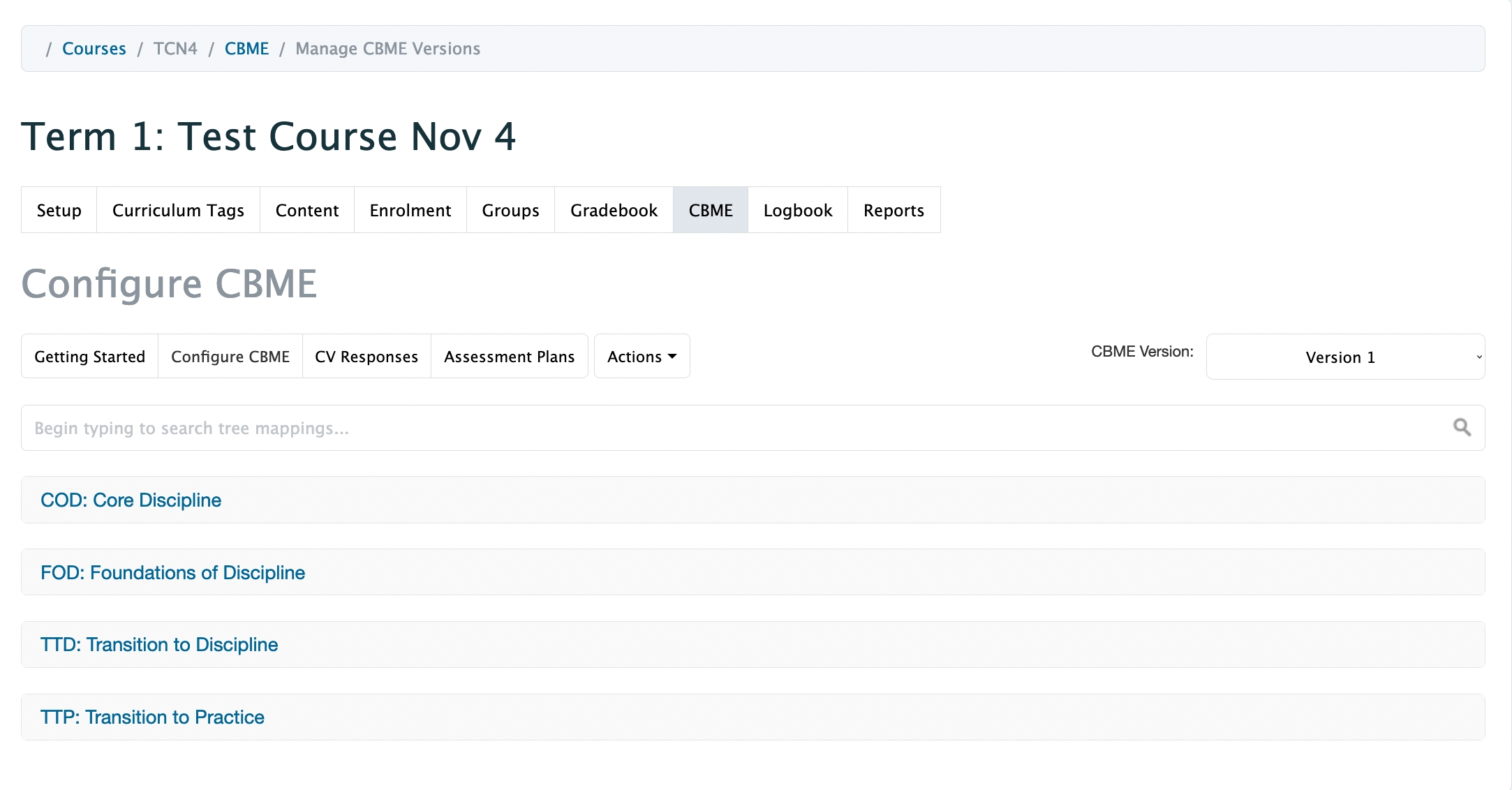

A behind the scenes task will run to publish your objective tree and make it visible to you. At most schools, this happens once a day. After sufficient time has passed, you can refresh the Configure CBME tab screen to review and search within the new tree mappings.

Use this path to review migrated CBME data to CBE

Pre-requisite: A developer has already migrated CBME data to CBE.

After migrations have run and you log back into your upgraded Elentra ME 1.24 or higher environment, you can expect to see some automatically generated Curriculum Frameworks.

Since most schools have mapped to Milestones, we'll focus on that framework for demonstrative purposes.

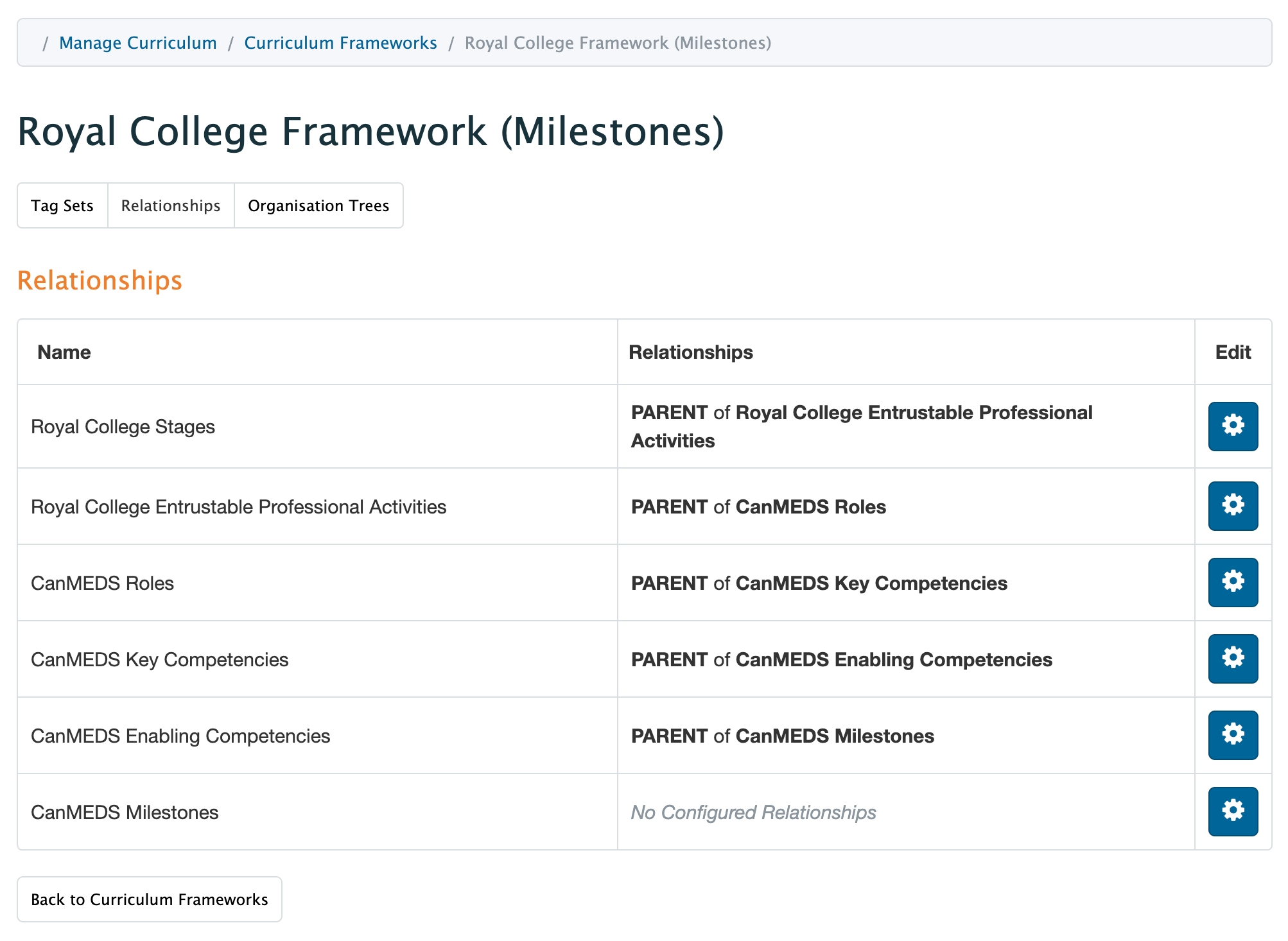

The Royal College Framework (Milestones) framework should look like this:

Where tag set relationships are defined as follows:

You should not need to change anything about this setup.

Please check all tag set settings for the tags included in your Royal College Framework (Milestones). This is important to ensure that the learner dashboard, program dashboard and assessment plan builder behave as expected. (See recommended tag set settings in Step 8 on the next page.)

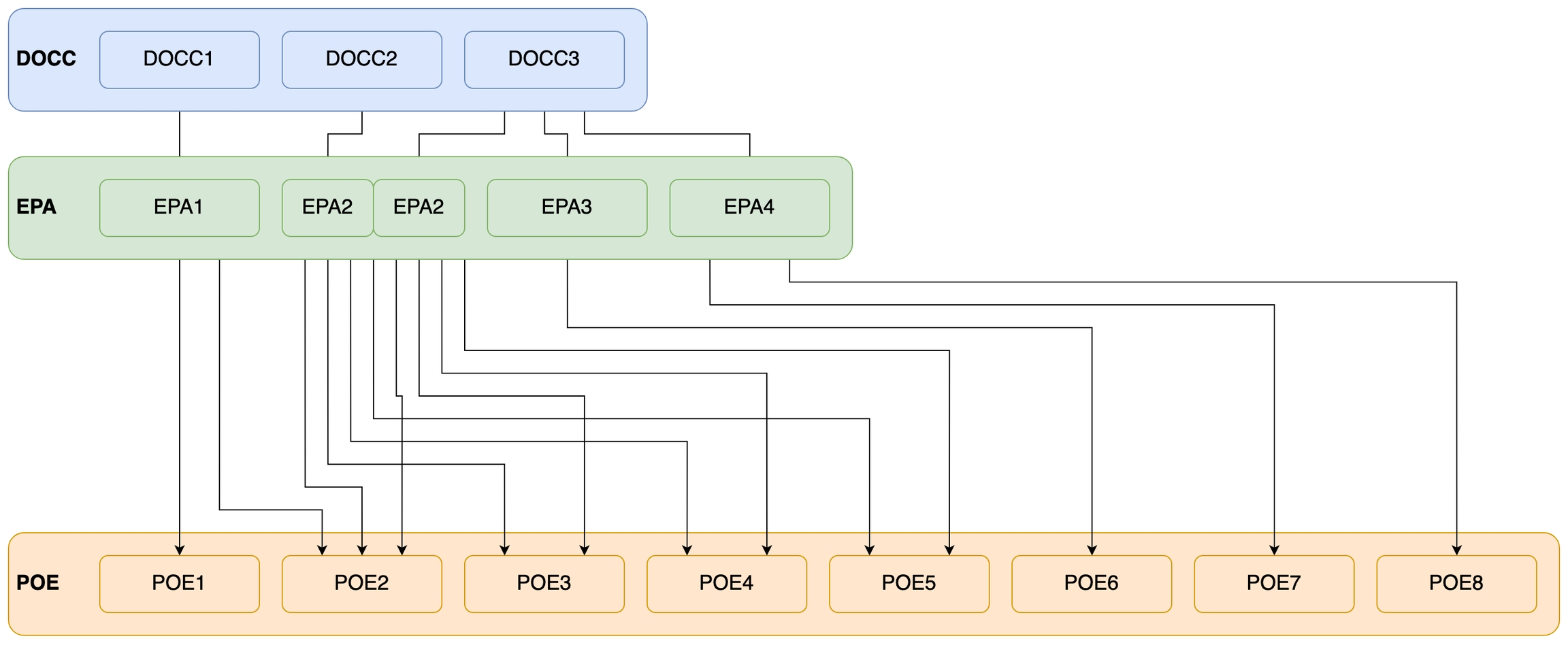

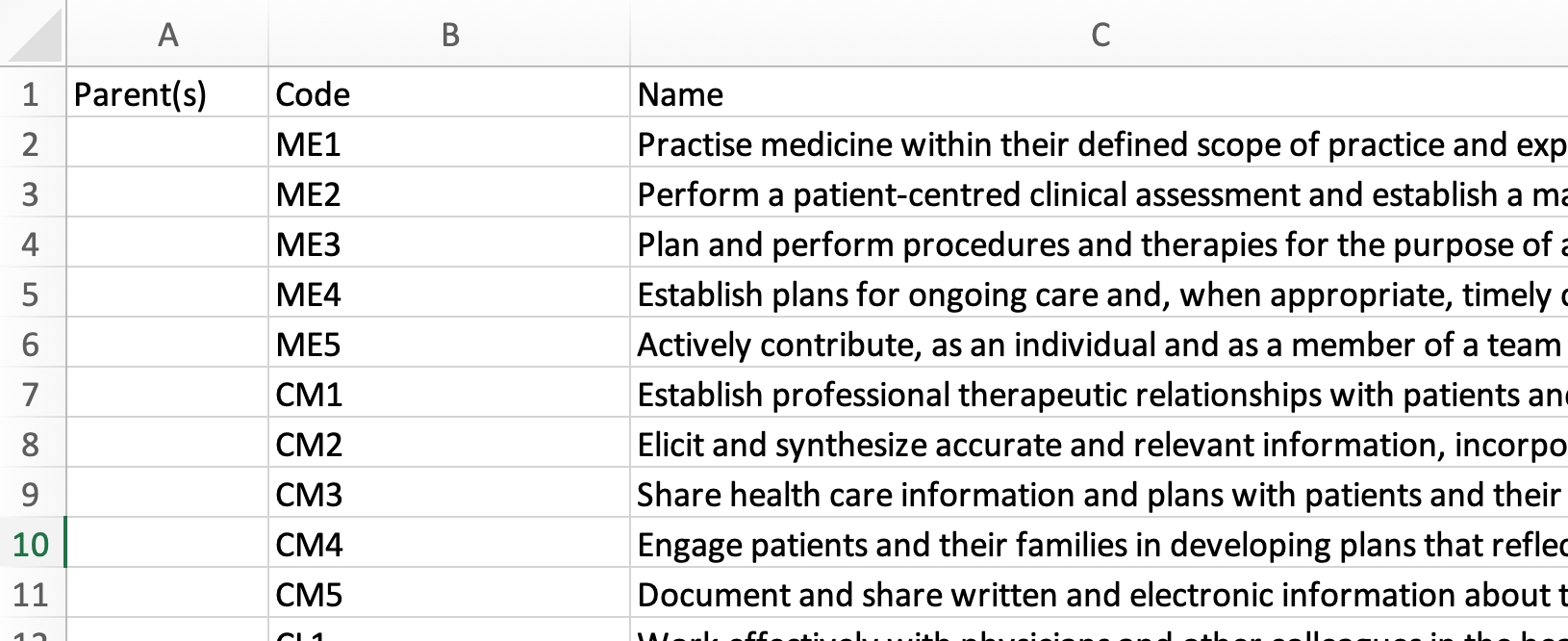

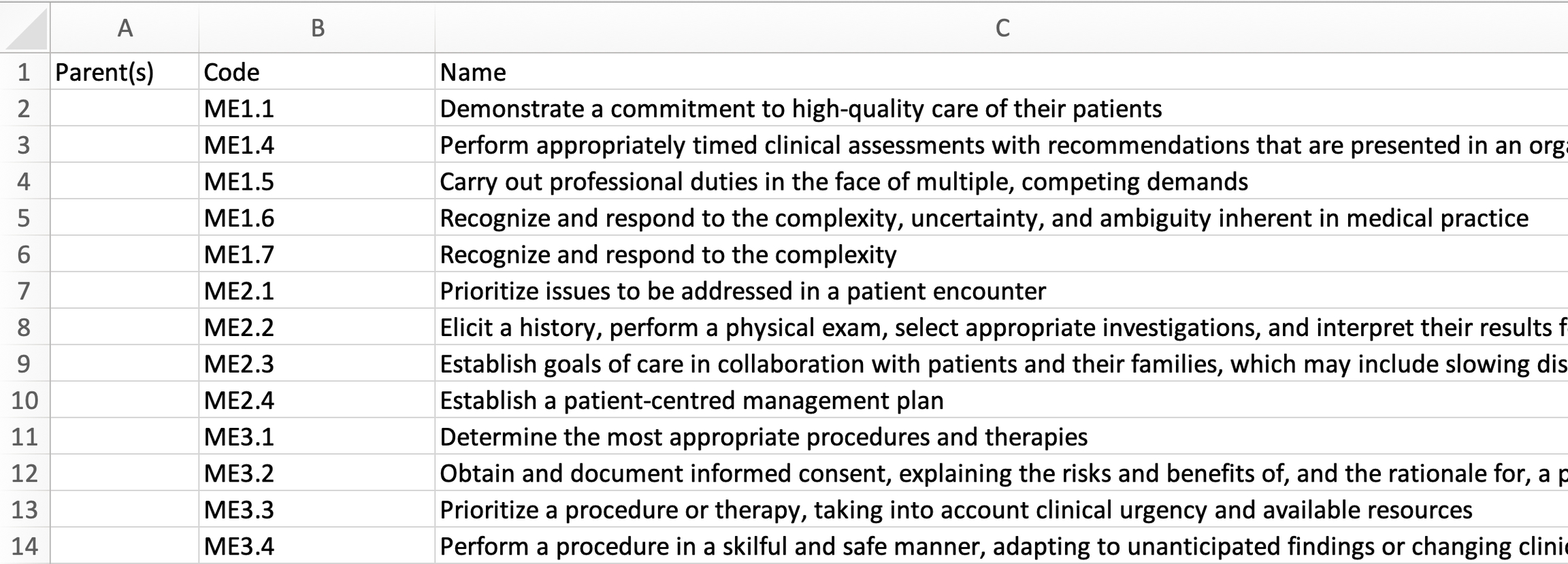

Use .csv format to upload objectives

In the context of Royal College programs, each program will upload its own curriculum via Admin > Manage Courses/Programs > CBME > Configure CBME.

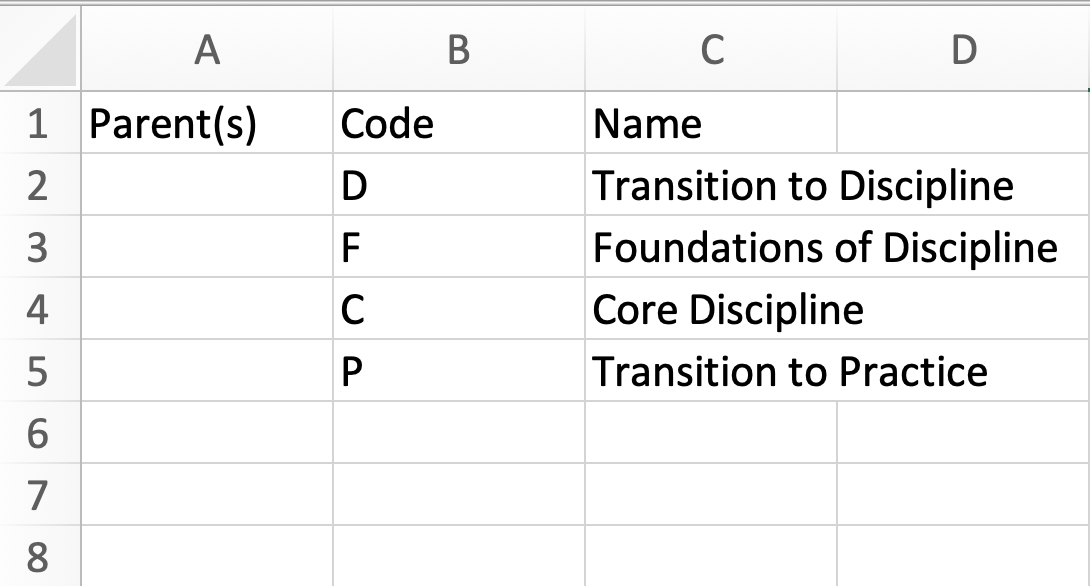

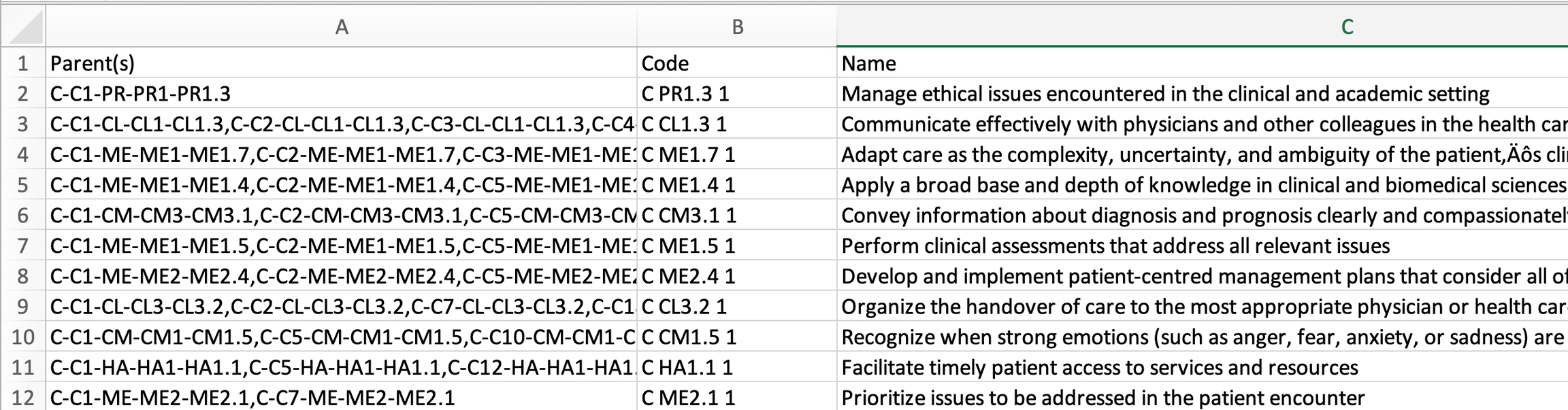

csv files prepared for RC program curriculum imports should include the following column headings:

Parent(s)

Code

Name

Description

Detailed Description

While all tag sets should include code and name information, the full parent path is only required on the milestone template. The other curriculum tag sets can by uploaded with no parents at all.

Navigate to the Configure CBME tab. Select Use a curriculum framework as a base and click Next Step.

2. Select a framework in the drop-down and click Next Step.

3. Complete the required upload for each tag set.

Reminder: While all tag sets should include code and name information, when using the default upload method, the full parent path is only required on the milestone template. The other curriculum tag sets can by uploaded with no parents at all.

Select an upload method.

For the Stages upload, ensure the Default upload option is selected.

For the Entrustable Professional Activities upload, ensure the Default option is selected.

For the Roles upload, ensure the Default upload option is selected.

For the Key Competency upload, ensure the Default upload option is selected.

For the Enabling Competency upload, ensure the Default upload option is selected.

For the Milestones upload, ensure the Default upload option is selected.

Drag and drop a file into the appropriate space. You will see the file name when the file is there.

Click Save and upload and you'll see a green success message.

Notes:

If you forget to select the correct Upload radio button (e.g., Upload referencing a single parent code), you must reset the upload by going back to Step 1: Configuration in the CBME tab of the course or in the Organization Trees section of the Curriculum Framework Builder.

You can review the new proposed tree structure with the uploaded objectives added. Click the Publish button to save.

Note: If there are errors in the upload, the green Publish button will not display and you will be unable to publish. You will need to reset the objective upload. See Notes section in step 3 of this documentation.

After you publish your course tree, a behind the scenes task must run for the curriculum to publish. At many schools this happens once a night. After time has passed, you can refresh the Configure CBME tab screen to view the imported curriculum.

Please reach out to the Consortium Core Team on Slack if you are a Consortium school and are looking for some sample data to test CBE with.

Use .csv format to upload objectives

Depending on your curriculum framework and organization's needs, you may upload your tag sets within the organizational tree (and then leverage that tree in multiple courses), or you may upload your tag sets through individual courses.

The titles of the column headings in the uploaded spreadsheets must be the following:

Note: Make sure you list all parents for a curriculum tag in one row, with commas separating the parents. There should be no duplicates in the Code column; if there are, Elentra will only pick up the first parent relationship for that code/tag and ignore the remaining entries.

The “Parent(s)” field must be specific as to where the objective is to be located in the Curriculum Framework structure:

Example: for objective PTYPE1, Parent(s) field may be: “DOCC1-EPA1-POE1, DOCC1-EPA1-POE2” - This will attach objective PTYPE1 to Curriculum Tag PEO1 under EPA1, which is under DOCC1, as well as PEO2, under EPA1, which is under DOCC1.

Parent(s) field can be empty until used in another upload. For example, objectives at the highest level would be uploaded with the “Parent(s)” field empty.

Sample .csv format:

Sample .csv format:

Upload objectives referencing their immediate parent.

Example: when uploading objective PTYPE1, the Parent(s) field may only specify “POE1, POE2”. This attaches PTYPE1 to wherever Curriculum Tags POE1 and POE2 appear, no matter their parents or where they appear in the hierarchy.

Sample .csv format:

Sample .csv format:

The Core Team recorded a demo session with a complete walk-through of the creation and versioning of Objective Trees:

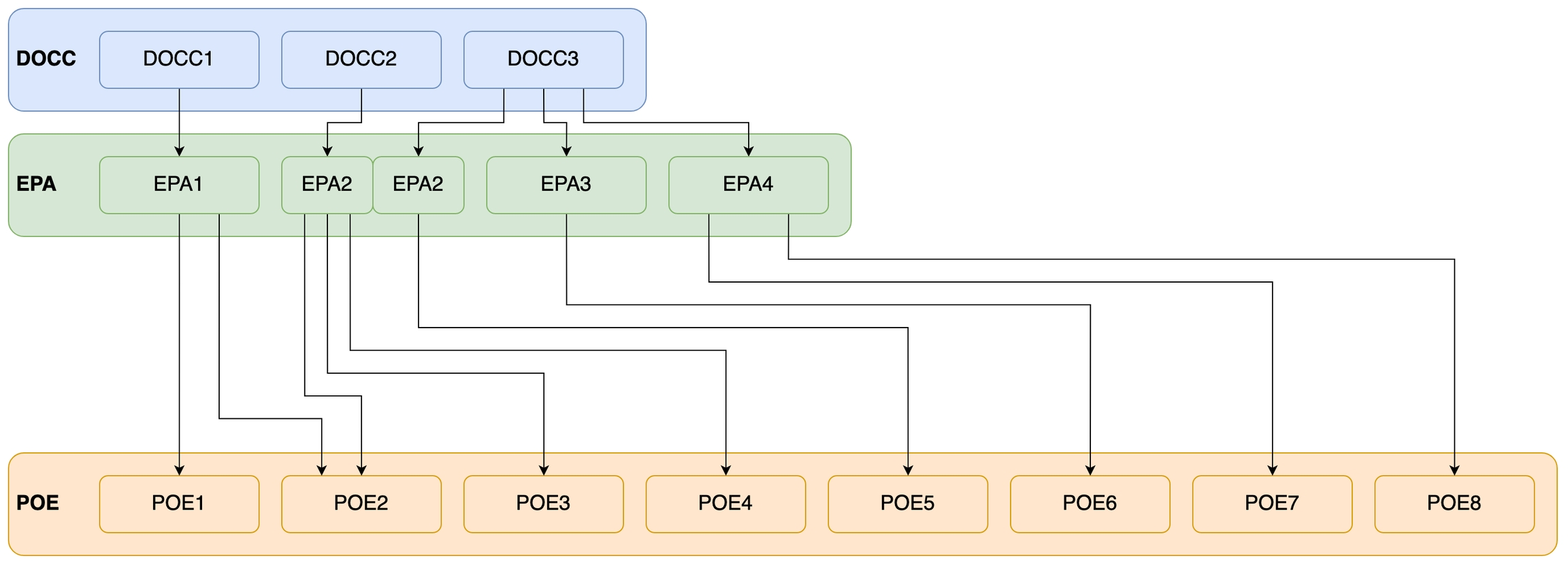

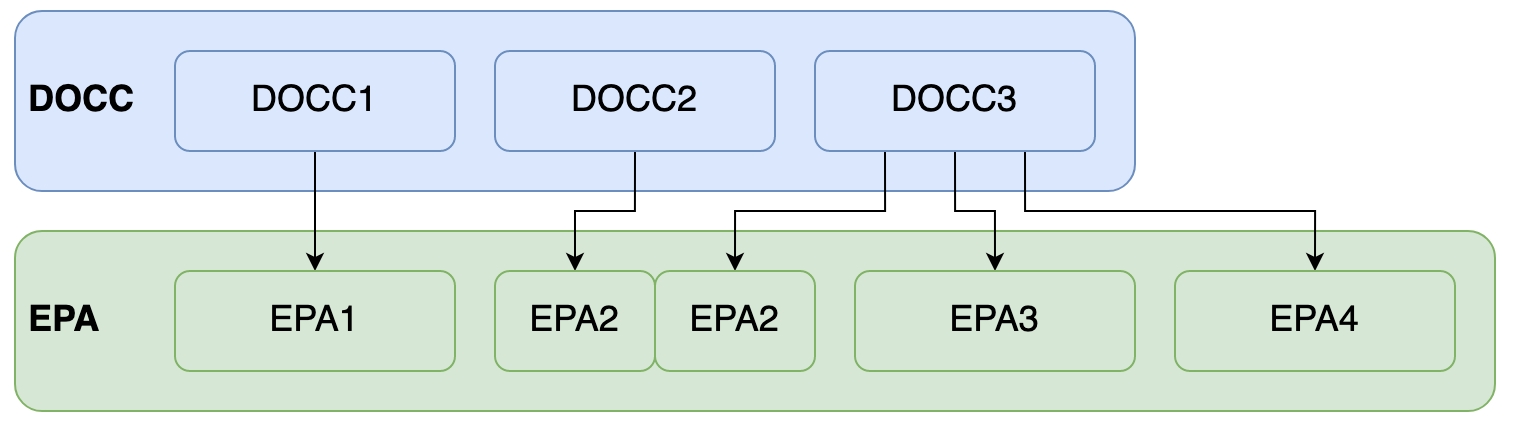

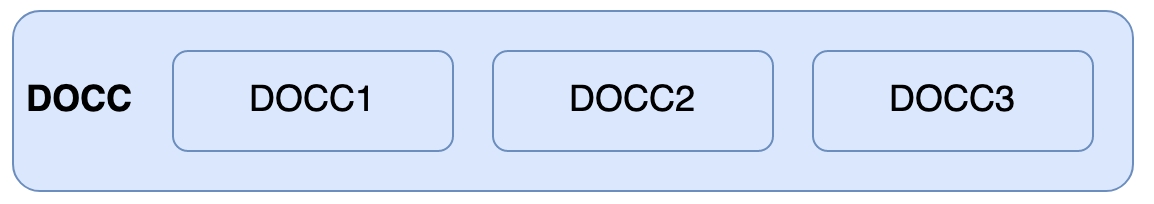

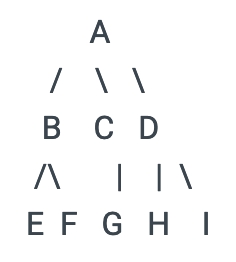

Given Curriculum Framework with structure:

DOCC - Domains of Clinical Care

EPA - Entrustable Professional Activity

POE - Phase of Encounter

PTYPE - Procedure Type

P - Procedure

SD/FMR - Skill Dimensions & FM CanMEDs Role

PT - Priority Topic

P&P - Progress & Promotion

When selecting to create a new Objective Tree Version and choosing to Upload, a page will be displayed to allow the user to upload objectives for each of the above Curriculum Tag Sets in the hierarchy, in order of hierarchy:

Domains of Clinical Care

Progress & Promotion

Entrustable Professional Activity

Phase of Encounter

Skill Dimensions & FM CanMEDs Role

Priority Topic

Procedure Type

Procedure

Note: For each of the above, the user can upload a CSV of objectives and select either Default upload or Upload referencing a single parent code.

For Curriculum Tag Sets with no parent in the Curriculum Framework structure, the selected method does not matter, since no Parent is specified for the Objectives.

For example, the following can be used to set objectives for Curriculum Tag Domains of Clinical Care, no matter the upload method since no Parent is specified.

In addition, for Curriculum Tag Sets that appear in the second level (below the top) in the Curriculum Framework, the upload method will also not matter since only a single Parent is specified:

In the above example, objective EPA2 has two parents, DOCC2 and DOCC3.

For Curriculum Tag Sets that appear in lower levels than the top in the Curriculum Framework, the selected upload method will matter.

Default Upload Method Example

The following is an example of how POE objectives can be uploaded using the Default Method, under EPAs:

The above will set objective POE1 under DOC1-EPA1 in the hierarchy for example. Also note the difference between POE4 and POE5.

POE4 is assigned to EPA2, but only EPA2 under DOCC2 in the hierarchy, where POE5 is assigned to EPA2 under DOCC3:

Single Parent Code Upload Example

The following is an example of how POE objectives can be uploaded using the Single Parent Code method, under EPAs:

In the above, EPA2, no matter where it is in the hierarchy, is assigned the set objectives POE2, POE3, POE4 and PEO5. Therefore, both DOCC2-EPA2, and DOCC3-EPA2 are assigned those objectives.

Subsequent levels would be assigned objectives in a similar way.

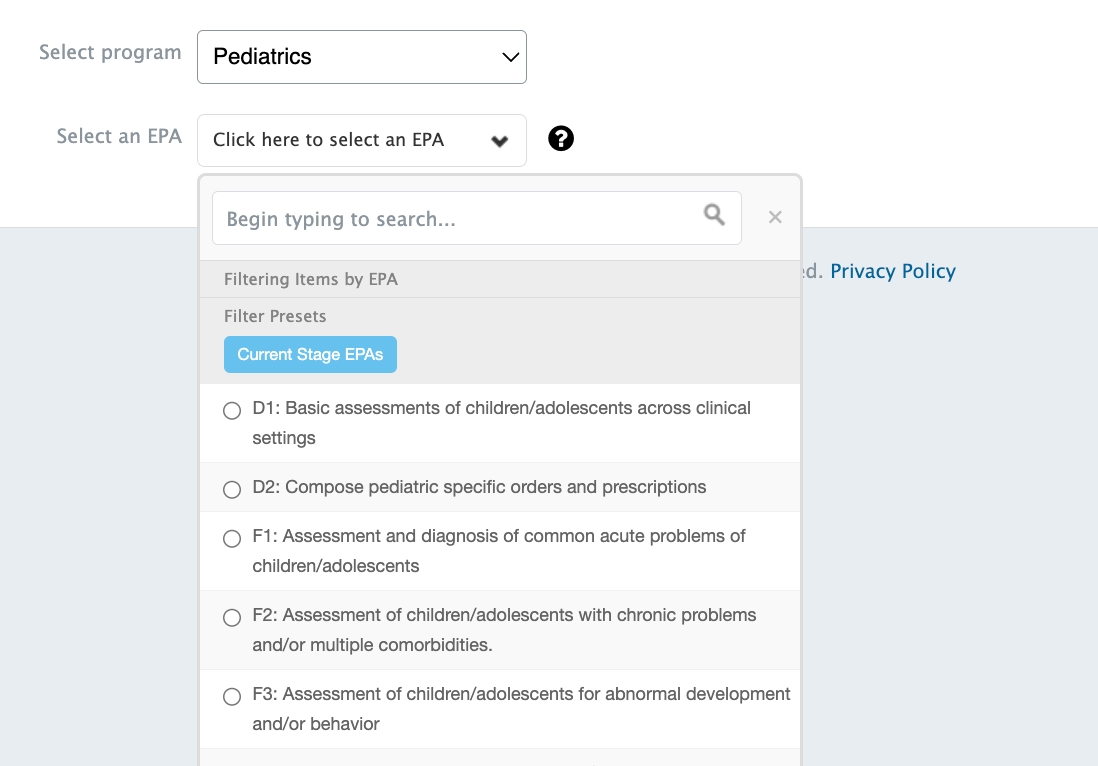

This template organizes the information about EPAs and allows the information to be uploaded to Elentra.

Each program should complete an EPA template with the following information:

Parent(s): This can be left blank because you will provide the full parent path in the Milestones Template. If you prefer to complete this column, you can indicate the parent of the EPA which is the Stage (i.e., D, F, C, P)

Code: Indicate the EPA code (e.g., C1, C2, C3). Note that there is no space between the letter and number in the EPA Code.

EPA codes should be recorded in uppercase letters and include the learner stage of training and the EPA number. Use the learner stage letters outlined below to ensure that the EPAs correctly map to other curriculum tags. D: Transition to Discipline F: Foundations of Discipline C: Core Discipline P: Transition to Practice

Name: Provide the competency text

Description: Provide additional detail about the EPA as required.

Detailed Description: Not every program will use the ‘Detailed Description’ column.

Save your file as a CSV.

The tools to support competency-based education in Elentra were significantly updated in ME 1.24. Whereas users were previously restricted to a rigid curriculum structure to fit the requirements of Canadian post-graduate medical programs, the new Curriculum Framework Builder allow organizations to build a curriculum structure that suits their needs. Once this curriculum structure is populated with curriculum objectives, organizations can take advantage of the learner and program dashboards that were built to accompany CBME.

You can watch recordings about CBE at (login required).

The CB(M)E module in Elentra is optional and is controlled through a database setting (setting: cbme_enabled).

With CBE enabled, an organization will be able to:

create one or more curriculum frameworks and populate organization and course trees (i.e., map a curriculum that includes objectives at multiple levels on multiple paths)

use supervisor, procedure, smart tag and field note form templates to create assessment forms users can initiate on demand (requires some developer assistance to configure workflows),

create rubric and periodic performance assessment (PPA) forms that are linked to the curriculum (can be used on-demand or sent via distributions),

monitor learner progress on a configurable learner dashboard (i.e., determine which curriculum tag sets display on dashboards),

create assessment plans to set assessment requirements for individual curriculum tags,

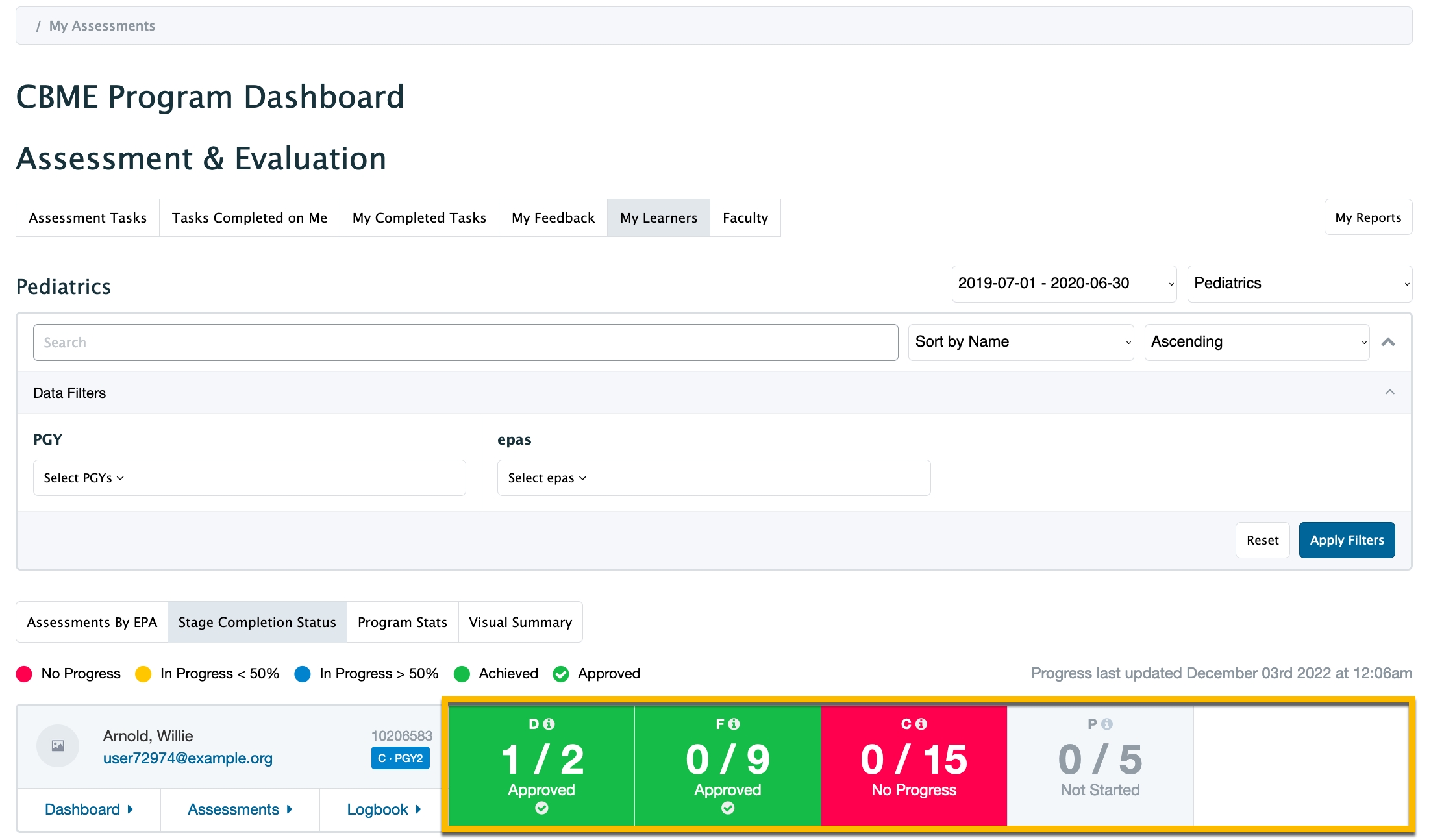

monitor learner progress towards assessment plans through a program dashboard, and

monitor learner performance and EPA task completion rates through optional visual summary dashboards.

In addition to these core tasks, users of CBE tools may opt to:

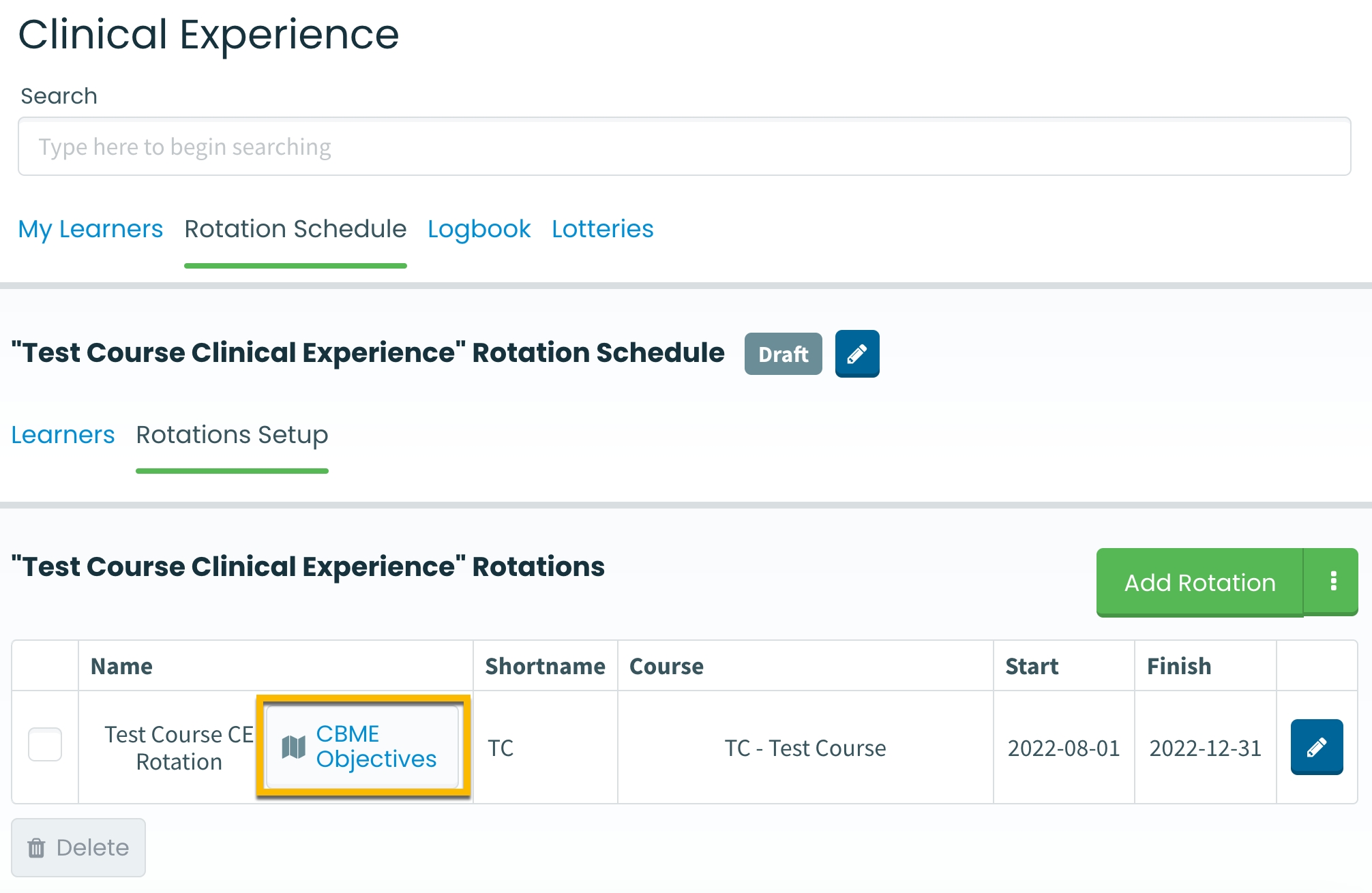

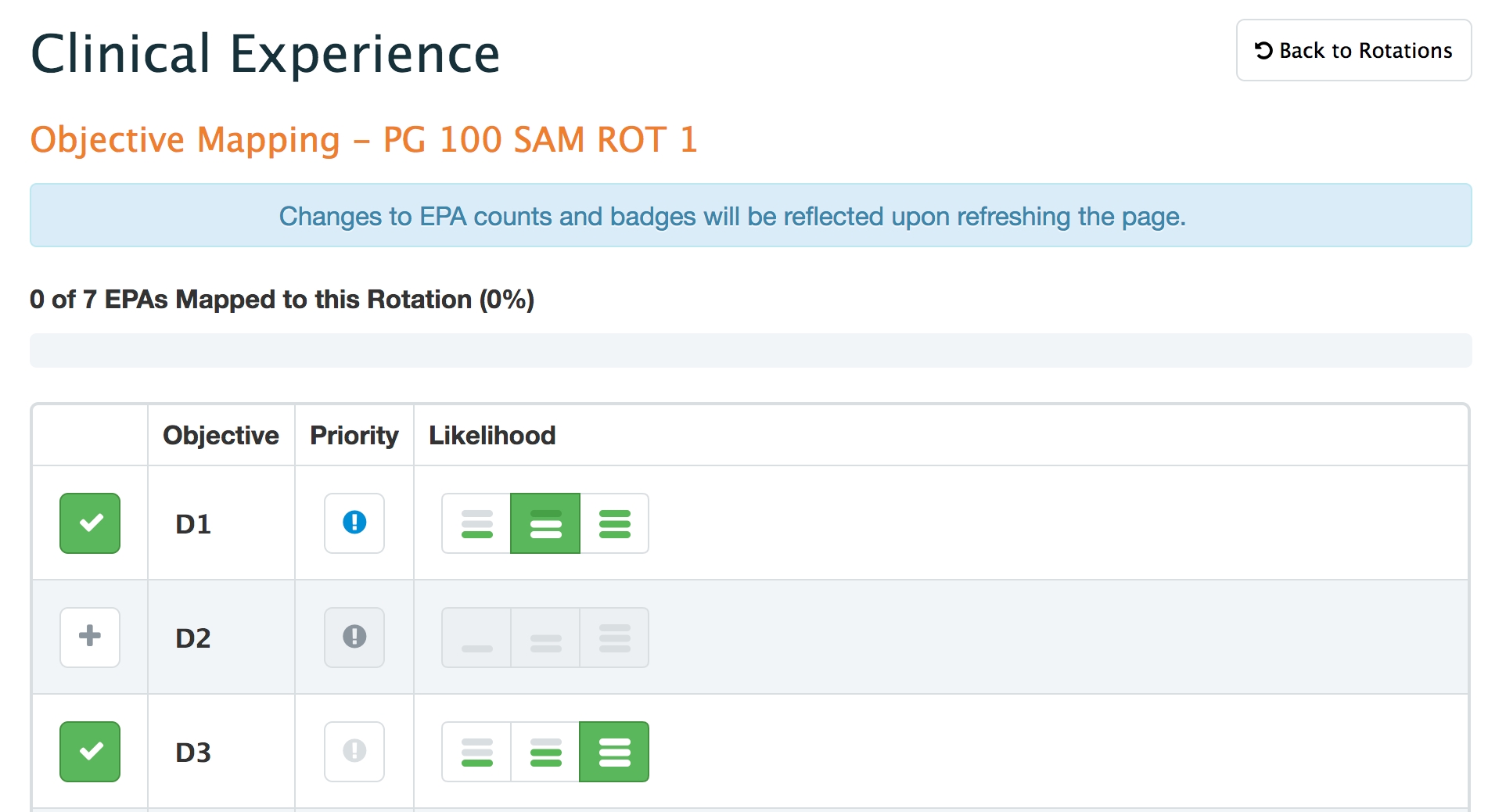

map CBE curriculum tags to specific rotations (requires that a program is using the Clinical Experience Rotation Schedule)

assign faculty members as competence committee members and academic advisors to provide them access to view specific learners,

and log meeting information for each learner.

Be aware that if you enable CBE in an organization, learners will land on their CBE dashboard when they log into Elentra. They still have access to the My Event Calendar but will have to click to view the calendar.

If your organization prefers that learners still land on their My Event Calendar tab when logging in, you'll need a developer to make that change for you.

Faculty and staff users with access to My Learners will also experience a difference because by default they will see a Program Dashboard view when accessing My Learners. If a CBE course does not have a CBE assessment plan set up, most of the Program Dashboard will be empty, however faculty and staff can still access a learner's assessments and logbook from their name card.

This template organizes the information about Stages and allows the information to be uploaded to Elentra.

Every program can use the same Stages template when they upload their program curriculum.

Parent(s): This column can be left blank.

Code: Provide the code (i.e., D, F, C, P).

Name: Provide the full stage name text

D: Transition to Discipline F: Foundations of Discipline C: Core Discipline P: Transition to Practice

Description: Not required.

Detailed Description: Not required.

Save your file as a CSV.

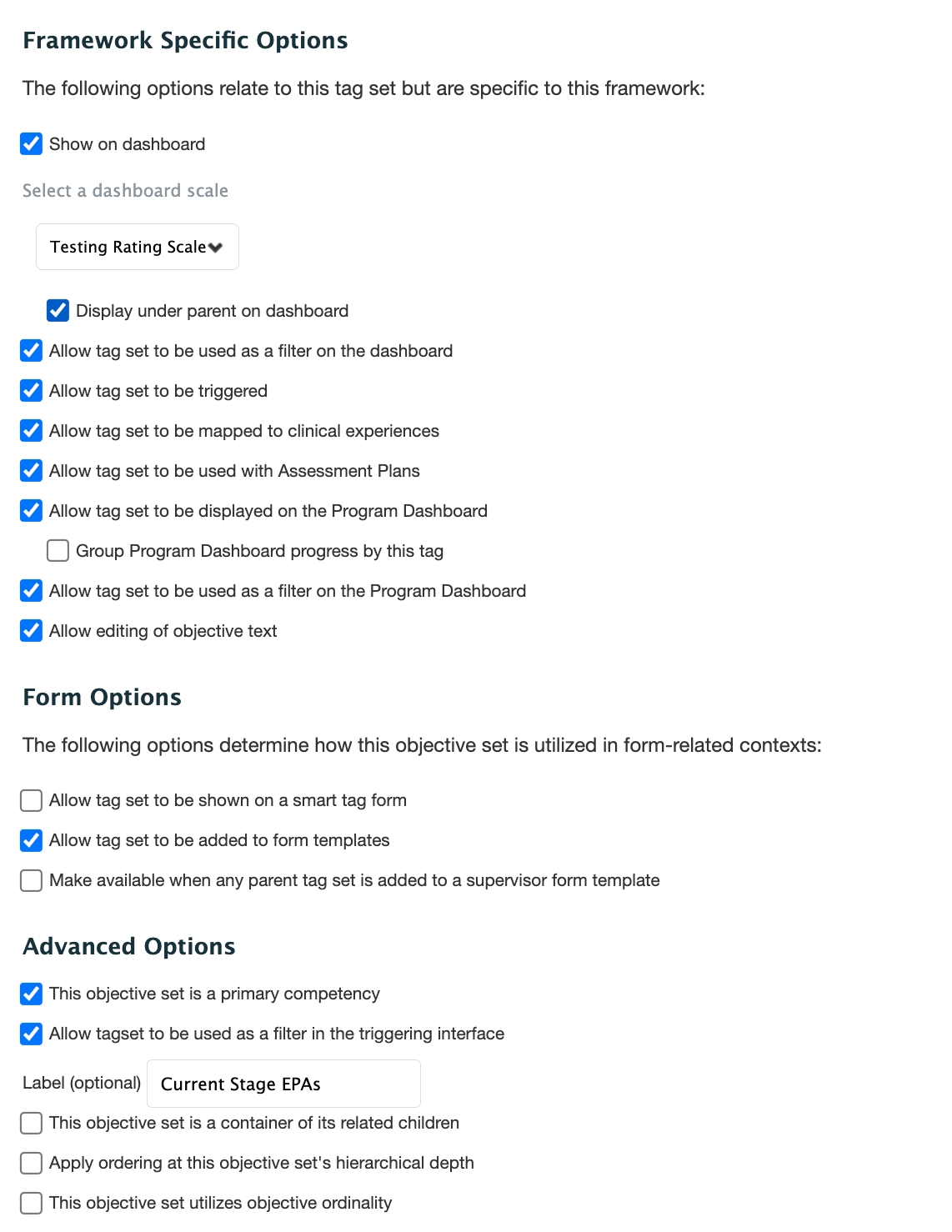

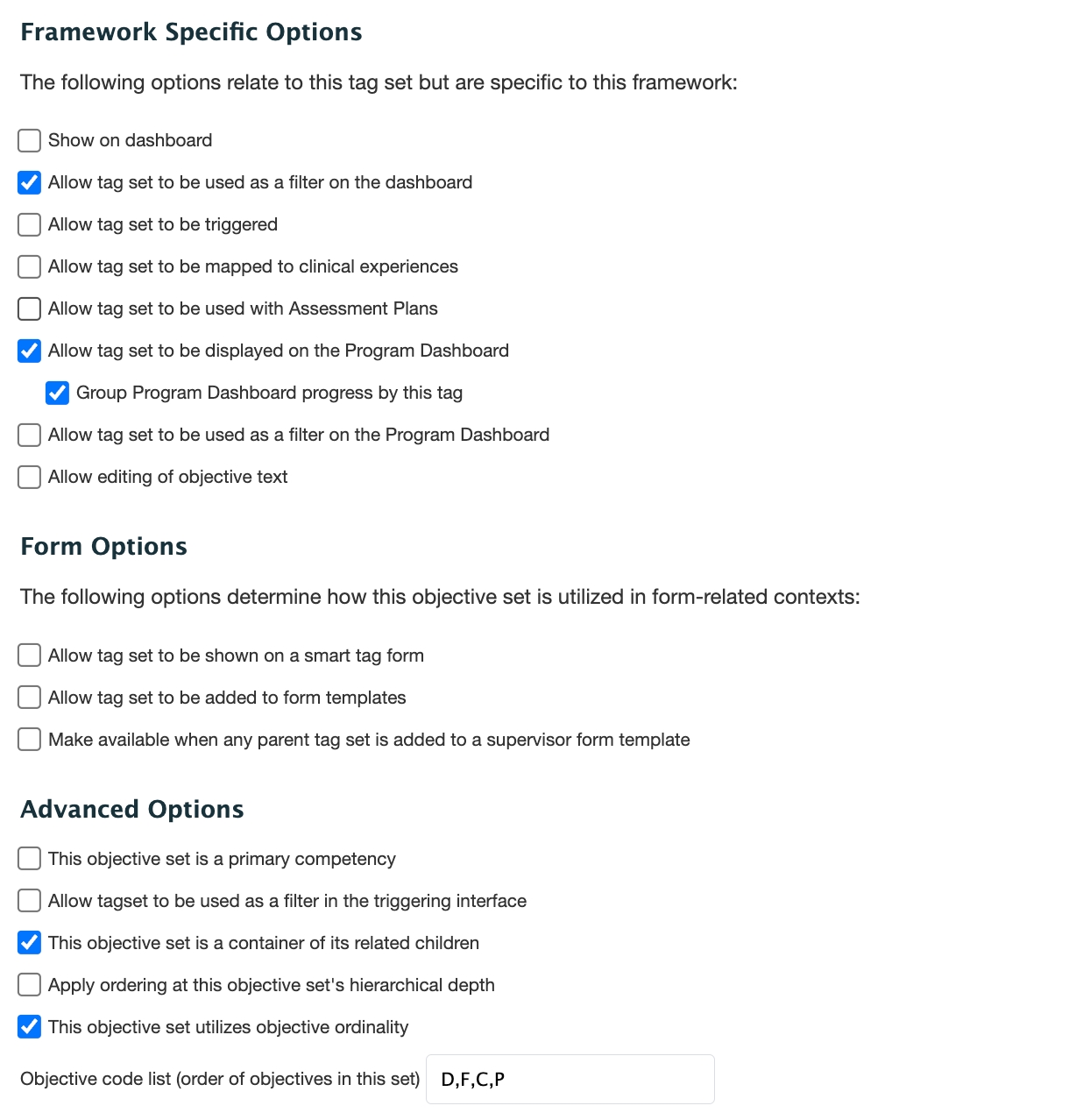

When working with tag set in the context of the Curriculum Framework Builder, an administrator must configure the settings for each tag set. These settings tell Elentra which tag sets to show on the learner and program dashboard, which tag sets should be accessible as filters and in the Assessment Plan, etc.

Select this to have a tag set display on the learner dashboard.

When this option is selected, a dashboard scale can also be selected. The dashboard scale will display and allow progress reviewers with permission to set a status for the objective (when clicking the status circle). If a user doesn't have permission to set the status for an objective, they will only be able to see the status.

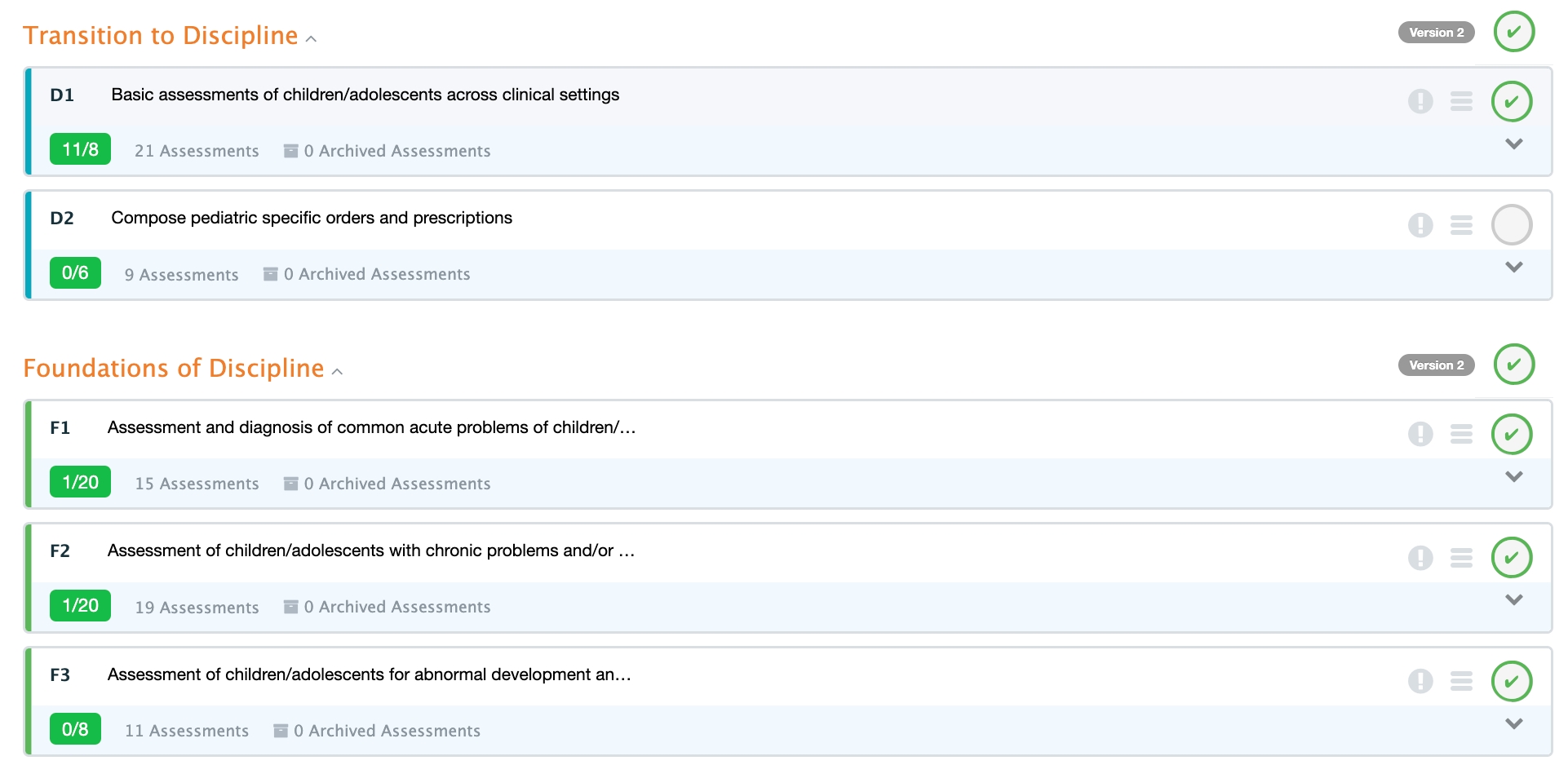

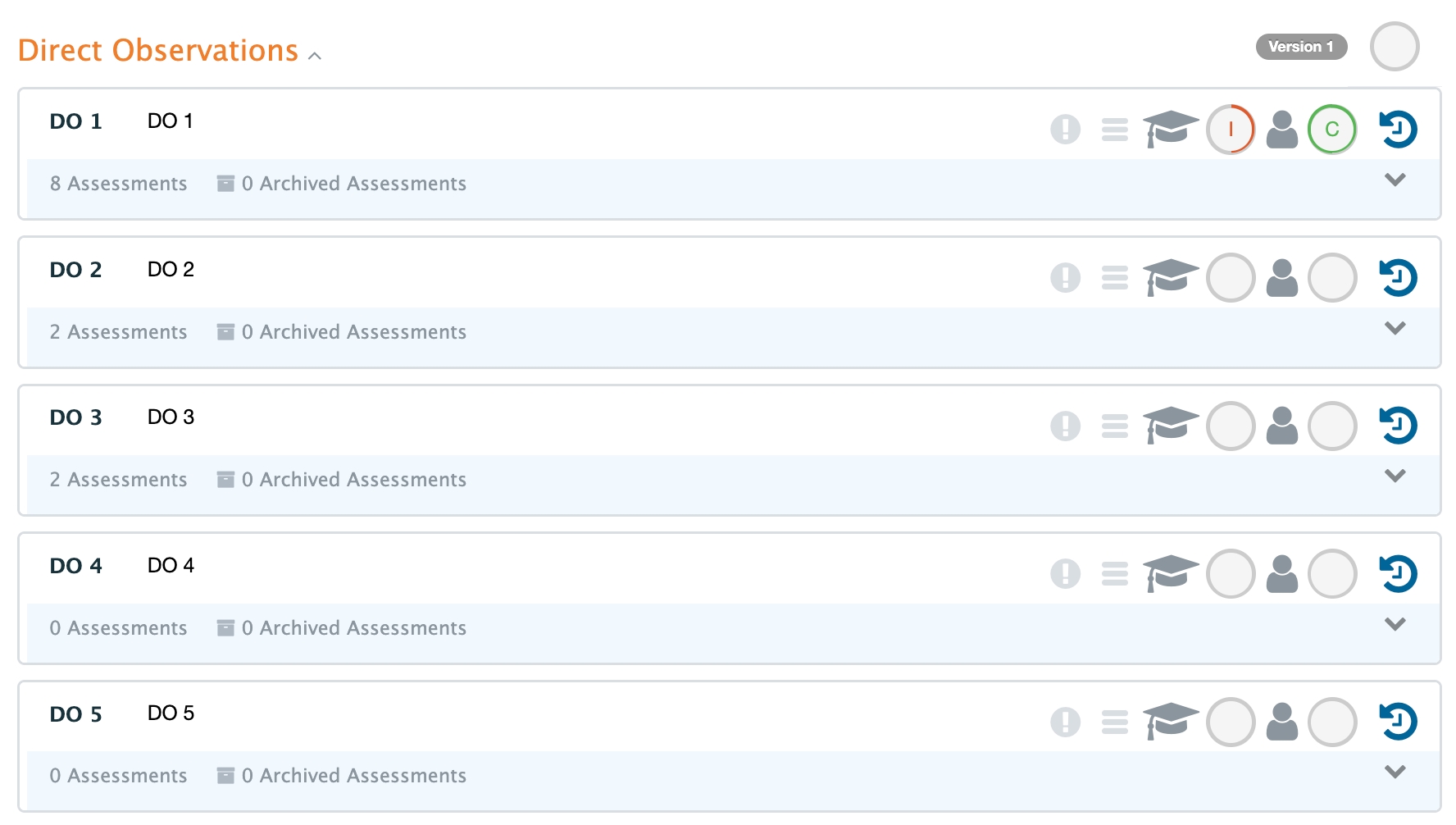

In this example, the tag set "Direct Observations" was set to show in dashboard.

This tag set option will display the objectives under their parents as categories on the dashboard.

"EPAs" are displayed under their parent, "Stages", on the Learner Dashboard

This option will add the tag set as a filter on the learner dashboard.

This option should be selected if the tags will be used when triggering on-demand assessments.

This option allows the tag set to be mapped to clinical experiences (i.e., rotations).

This option should be selected if the tag set is to be used in the assessment plan builder.

This option will allow the tag set to be displayed on the program dashboard.

This option will group children of this tag set on the program dashboard.

This option will use the tag set as a filter on the program dashboard.

This option does not currently impact the user interface in any way.

The intention is to allow users to edit curriculum tags at the Course level to accommodate unique course/program needs. This has not yet been implemented.

This option will add this tag set as a smart tag item on the smart tag form templates. Smart tag items will display the mapping of the triggered branch after the form has been generated.

This option will allow this tag set to be used on CBE form templates (Supervisor Form, Procedure Form, Field Note Form).

This option will add this tag set as a mapped objective on Supervisor form templates. You would primarily use this option when you have children of a parent and you want to add the parent tag to a Supervisor form and have the child tags automatically pulled into the form for use.

This is meant to show an EPA; where we expect to show EPA related information, this option allows the objective set to be used in its place.

This is also important to select as the child of a primary objective (example: Parent - Stages, Child - EPAs).

You will want to select this to ensure all children and grandchildren of this tag set/objective set will be displayed in the objective tree mapping for your CBE course.

This option sets the tag set as a filter when initiating tasks on-demand.

If the objective is a primary competency, this filter will display the set of objectives the learner is currently in-progress (e.g., Display EPAs by the current stage). When this option is selected, name the filter.

This option may be used if your curriculum uses stages. For example, your learners must complete a foundations stage before they move into a core stage.

This option determines that ordered objectives must start at the depth of this tag set. This is relevant when multiple types appear on the same depth. The tree in the example below is the Framework and not actual branches of objectives. If the order for B is set as a value of 2, and the order for C is set to 1 and D is left unset, then, the relevant interfaces (currently, only Smart Tag Triggering) would render as such: C, B, D

Use Case

When two objectives live on the same level, both are triggerable and you would like to identify which order they should be displayed in. Relevant to smart tag form triggering.

Clicking this option allows you to enter the order of the objective codes on each tag in this tag set, in the order that the learner will complete it. In the case of the Stages tag set, enter D,F,C,P. This indicates that the learner would complete the D, F, C, P objective codes in this tag set, in this order.

This applies to curriculum versioning.

Go to Admin > Manage Curriculum.

Click Curriculum Framework Builder in the left sidebar.

Click Add Framework on the right.

Provide a title and click Add Framework.

The framework will be created and you can scroll to the bottom of the list of frameworks to find it.

Click on the framework name to open it and configure its tag sets.

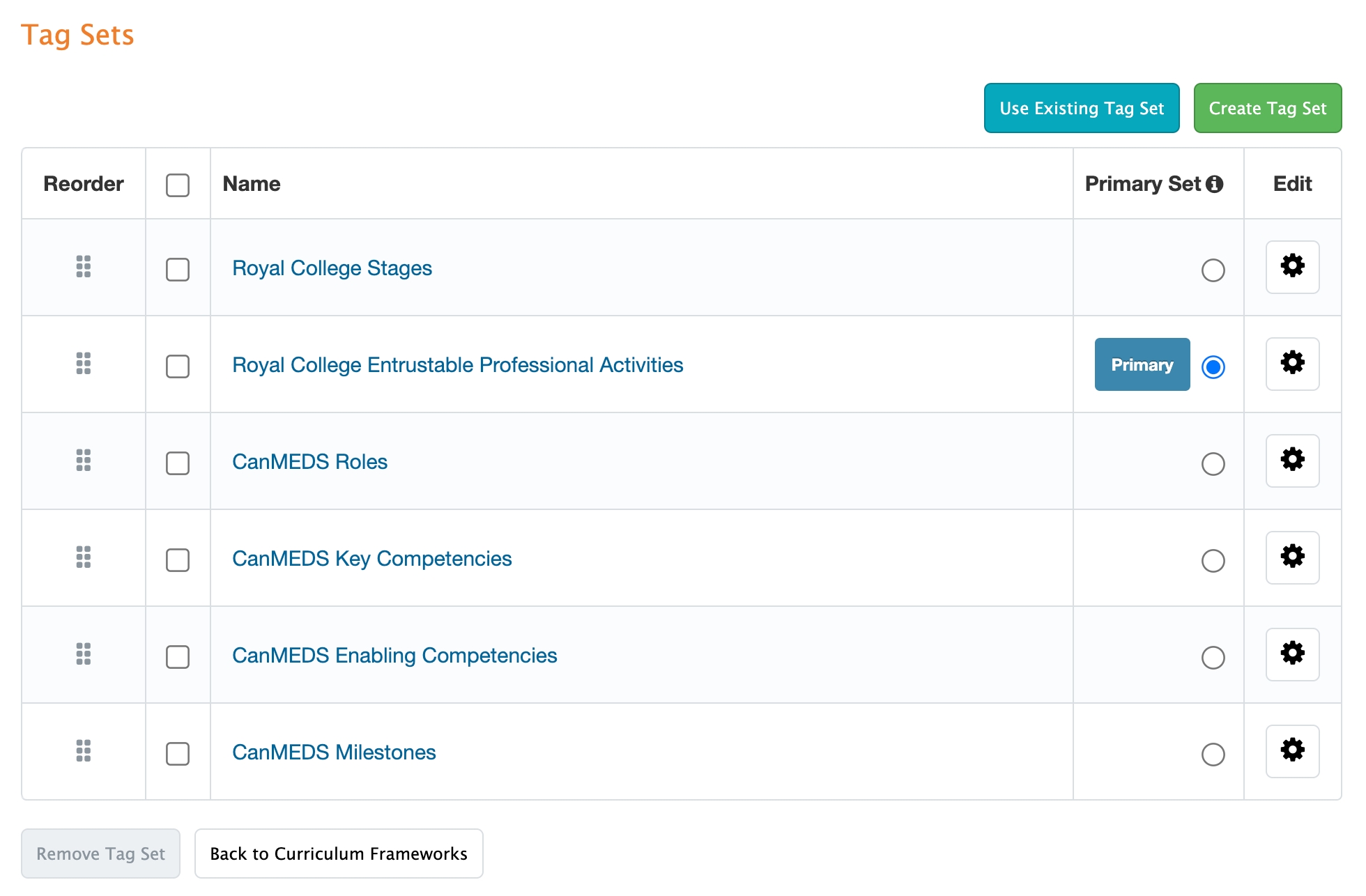

Because the CBME auto-setup tool has run, all tag sets required for a RC program should already exist. Click Use Existing Tag Set, then select the appropriate existing objective set and click Import. Build your curriculum framework as follows:

Next, from the Tag Sets tab and click on each tag set to configure its tag set options.

For Royal College Stages:

Set as applicable to All Courses

Framework Specific Options

Allow tag set to be used as a filter on the dashboard

Group Program Dashboard progress by this tag

Allow tag set to be used as a filter on Program Dashboard

Form Options

None

Advanced Options

This objective set is a container of its children

This objective set uses objective ordinality (set as D,F,C,P)

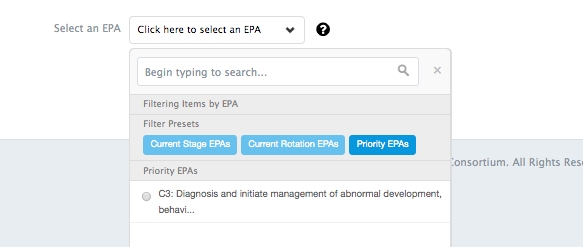

For Entrustable Professional Activities:

Set as applicable to All Courses

Framework Specific Options

Check all EXCEPT 'Group Program Dashboard progress by this tag'

Form Options

Check 'Allow tag set to be added to form templates'

Advanced Options

Check 'This objective set is a primary competency'

Check 'Allow tagset to be used as a filter in the triggering interface'

Set Label as "Current Stage EPAs"

For Roles:

Set as applicable to All Courses

Make no additional selections

For Key Competencies:

Set as applicable to All Courses

For Enabling Competencies:

Set as applicable to All Courses

For Milestones:

Set as applicable to All Courses

Framework Specific Options

Check 'Allow tag set to be used as a filter on the dashboard'

Form Options

Check 'Make available when any parent tag set is added to a supervisor form template'

Advanced Options

None

After all required tag sets are configured, click the Relationships tab. Click on each tag set to define its relationship. Set the relationships between tag sets as follows:

Create a course in Manage Courses.

Follow the tab flow under the Configure CBME tab in the course under Manage Courses to upload curriculum tags.

DOCC1

Care of Adults

DOCC2

Maternity & Newborn

DOCC3

Children & Adolescents

DOCC4

Care of the Elderly

DOCC1-EPA1-POE6

PTYPE1

Integumentary Procedure

DOCC1-EPA2-POE6, DOCC1-EPA3-POE6

PTYPE2

Local Anaesthetic Procedures

DOCC1-EPA3-POE6, DOCC1-EPA4-POE6

PTYPE3

Eye Procedures

DOCC1-EPA4-POE6

PTYPE4

Ear Procedures

DOCC1-EPA2-POE6, DOCC1-EPA3-POE6

PTYPE5

Nose and Throat

DOCC1

EPA1

Care of the Adult with a Chronic Condition

DOCC1

EPA2

Care of the Adult with Minor Episodic Problem

DOCC1

EPA3

Care of the Adult with Acute Serious Presentation

DOCC1

EPA4

Care of the Adult with Multiple Medical Problems

DOCC1

EPA5

Performing a Periodic Health Review of an Adult

EPA1, EPA2, EPA3, EPA4, EPA5

POE1

Hypothesis Generation

EPA1, EPA2, EPA3, EPA4, EPA5

POE2

History

EPA1, EPA2, EPA3, EPA4, EPA5

POE3

Physical Examination

EPA1, EPA2, EPA3, EPA4, EPA5

POE4

Diagnosis

EPA1, EPA2, EPA3, EPA4, EPA5

POE5

Investigation

EPA1, EPA2, EPA3, EPA4, EPA5

POE6

Procedural Skills

DOCC1

Care of Adults

DOCC2

Maternity & Newborn

DOCC3

Children and Adolescents

DOCC1

EPA1

Care of the Adult with Chronic Condition

DOCC2, DOCC3

EPA2

Care of the Adult with Minor Episodic Problem

DOCC3

EPA3

Care of the Adult with Acute Serious Presentation

DOCC3

EPA4

Care of the Adult with Multiple Medical Problems

DOCC1-EPA1

POE1

...

DOCC1-EPA1, DOCC2-EPA2

POE2

...

DOCC2-EPA2

POE3

...

DOCC2-EPA2

POE4

...

DOCC3-EPA2

POE5

...

DOCC3-EPA3

POE6

...

DOCC3-EPA4

POE7

...

DOCC3-EPA4

POE8

...

DOCC1-EPA1

POE1

...

DOCC1-EPA1, DOCC2-EPA2

POE2

...

DOCC2-EPA2

POE3

...

DOCC2-EPA2

POE4

...

DOCC3-EPA2

POE5

...

DOCC3-EPA3

POE6

...

DOCC3-EPA4

POE7

...

DOCC3-EPA4

POE8

...

cbme_enabled

Controls whether an organization has CBME enabled or not.

cbme_standard_kc_ec_objectives

Controls whether you give courses/programs the option to upload program specific key and enabling competencies.

allow_course_director_manage_stage

Enable to allow course directors to manage learner stages (in addition to Competence Committee members).

allow_program_coordinator_manage_stage

Enable to allow program coordinators to manage learner stages (in addition to Competence Committee members).

cbme_memory_table_publish

cbme_enable_tree_aggregates

cbme_enable_tree_aggregate_build

cbme_enable_tree_caching

default_stage_objective

Must have information entered for CBME to work. Set to

global_lu_objectives.objective_idof the first stage of competence (e.g., Transition to Discipline)

cbme_enable_visual_summary

Enables additional views from program dashboard

cbe_versionable_root_default

Default versionable root for trees

cbe_tree_publish_values_per_query

Number of nodes to be inserted in bulk into a tree table

cbe_smart_tags_items_autoselect

Determines whether smart tag items should be pre-filled on trigger

cbe_smart_tags_add_associated_objectives

Adds the associated objectives item to smart tag forms on trigger

cbe_smart_tags_items_mandatory

Determines whether smart tag items are required

cbe_smart_tags_items_assessor_all_objectives_selectable

Determines whether assessors can view all smart tag objectives in the learner tree, rather than the objectives in the learner tree

cbe_smart_tags_items_target_all_objectives_selectable

Determines whether targets cal also see all selectable objectives, not just the ones applied via the Smart Tag form trigger process

cbe_smart_tags_items_all_objectives_selectable_override

Overrides the target and assessor *_all_objectives_selectable settings, if set

cbe_dashboard_allow_learner_self_assessment

Determines whether learners can provide a self-assessment on dashboard objectives

learner_access_to_status_history

Determines whether learners can see their status history; i.e the "epastatushistory page" where rating scale updates and advisor comments/files are displayed

All templates you upload to the system must be in .csv format.

If you are working in Excel or another spreadsheet manager use the “Save as” function to select and save your file as a .csv

To upload your files, either search for or drag and drop the file into place. You'll often see a green checkmark when your file is successfully uploaded.

If you receive an error message make sure that you have:

Deleted any unused lines. This is especially relevant in templates with pre-populated columns including the contextual variables template, and enabling competencies mapping template.

Completed all required columns. If a column is empty for a specific line the file may fail to upload.

If you've imported all CBME data for a program but you are not able to see EPA maps in the EPA Encyclopedia or in the Configure CBME tab, double check that you've uploaded your files without spaces between the EPA Code letter and number, nor between the CanMEDS Role and Key Competency number. Having spaces in the incorrect places will prevent the system from retrieving the required information to produce maps.

While you can correct minor typos and add additional mapping info. through the user interface you can not delete, nor undo mapping between tag sets.

The option to reset all CBME data for a program was removed in a previous Elentra version.

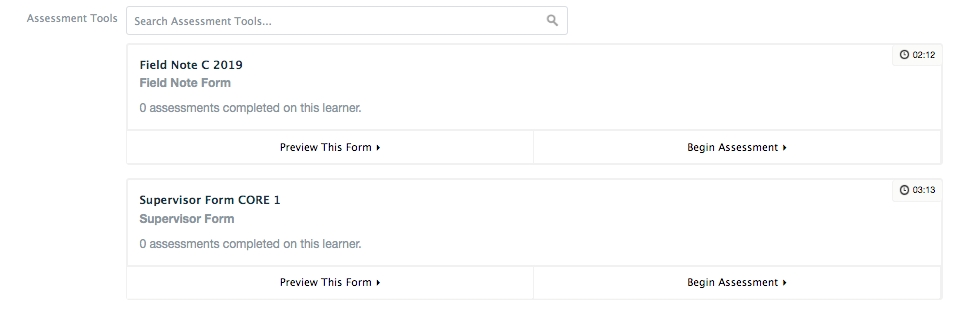

The process to build new assessment tools after curriculum versioning is relatively straight-forward. Administrators need to build new assessment tools for new EPAs or EPAs that have changed. Assessment tools will be carried over for all EPAs that were marked as "not changing."

Navigate to Admin > Assessment and Evaluation.

Click ‘Form Templates’ on the tab menu.

Click the green ‘Add Form Template’ button in the top right and a pop-up window will appear.

Type in a form name and select the form type from the dropdown menu. Select the appropriate form template and course (e.g., ‘Supervisor Form’, Pediatrics).

Now that you have two (or more) curriculum versions in the system, the Form Template Builder will default to loading the most recent version.

If you want to build new forms for learners using a previous version, change the EPA Version to what you want, click 'Save', and Elentra will load the appropriate EPAs.

Complete the sections of the form builder. This is the same as any previous form building but see details below as needed.

Publish Form Template

Click 'Publish' to make your template available for use. The forms will be available within the hour.

On each form template you create you’ll notice a greyed out area at the bottom including Next Steps, Concerns, and a place for feedback. This is default form content that cannot be changed.

Once a form template has been published, you can rearrange the template components for each form; however, you cannot makes changes to the scales or contextual variables. To make these changes, copy the form template and create a new version.

Within a given form, you can only tag curricular objectives (e.g., EPAs or milestones) from the same curriculum version. To ensure that you do not accidentally add an EPA from a different version, you must create the form first and then "Create & Attach" new items to the form.

Click Admin > Assessment & Evaluation.

Click on Forms from the subtab menu

Click Add Form.

Provide a form name and select PPA Form or Rubric/Flex Form from the Form Type dropdown menu; then click Add Form.

Now that you have two (or more) curriculum versions in the system, the Form Editor will default to loading the most recent version. Under "EPA Version", simply select the appropriate version. Click Save.

If you want to build new forms for learners using Version 1, simply change the EPA Version to Version 1 and it will load the appropriate EPAs.

In order to use the "Programs" filter in the form bank, you need to add Program-level permissions to each form so it is recommended you do so.

Click "Individual", change to "Program"

Begin typing in your program name in the "Permissions" box.

Click on your program to add it.

Complete the sections of the form builder. This is the same as any previous form building but see details below as needed.

Publish Form Template

Click 'Publish' to make your template available for use. The forms will be available within the hour.

The default Feedback and Concerns sections will be added when the form is published.

Rubrics are assessment tools that describe levels of performance in terms of increasing complexity with behaviourally anchored scales. In effect, performance standards are embedded in the assessment form to support assessors in interpreting their observations of learner performance.

If you create a rubric form and at least one item on the form is linked to an EPA the form will be triggerable by faculty and learners once published. Results of a completed rubric form are included on a learner's CBME dashboard information.

Within a given form, you can only tag curricular objectives (e.g., EPAs or milestones) from the same curriculum version. To ensure that you do not accidentally add an EPA from a different version, we recommend you create the form first and then "Create & Attach" new items to the form.

You need to be a staff:admin, staff:prcoordinator, or faculty:director to access Admin > Assessment & Evaluation to create a form.

Click Admin > Assessment & Evaluation.

Click on Forms from the subtab menu.

Click Add Form.

Provide a form name and select Rubric Form from the Form Type dropdown menu; then click Add Form.

Form Title: Form titles are visible to end-users (learners, assessors, etc.) when being initiated on-demand. Use something easily distinguishable from other forms.

Form Description: The form description can be used to store information for administrative purposes, but it is not seen by users completing the form.

Form Type: You cannot change this once you have created a form.

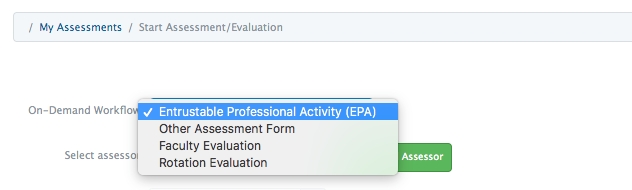

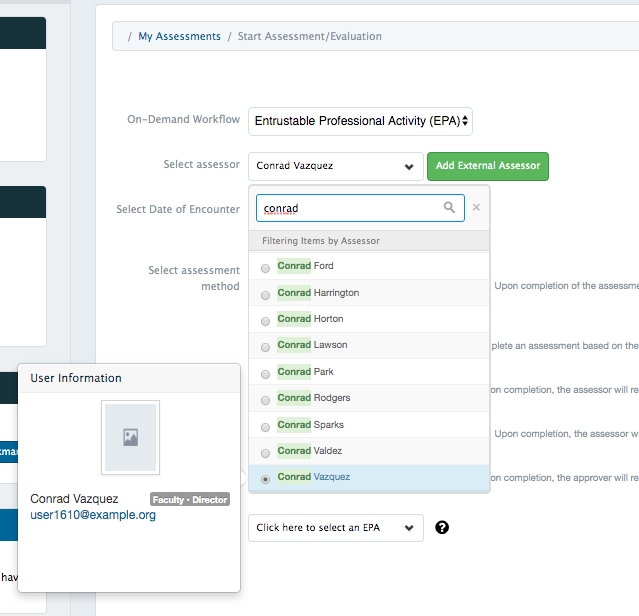

On-Demand Workflow: On-demand workflows enable users to initiate a form on-demand using different workflow options (EPA, Other Assessment Form, Faculty Evaluation and Rotation Evaluation).

EPA: Use for forms tagged to EPAs that you want users to be able to initiate. Contributes to CBME dashboards.

Other Assessment Form: Use for forms that you want users to be able to initiate and complete on demand without tagging to EPAs; or, for tagged forms that you don't want to appear in the EPA list. Only forms with EPAs tagged will contribute to CBME dashboards.

Course: Program coordinators and faculty directors may not have access to multiple courses, while staff:admin users are likely to. If you have access to multiple courses, make sure you've selected the correct course to affiliate the form with.

EPA Version: Select the CBME Version that this form will be used for. After setting the version, you will only be able to tag EPAs from that version to this form.

By default, the Form Editor will load the most recent CBME version. Under "EPA Version", simply select the appropriate version. Click Save. If you want to build new forms for learners using Version 1, simply change the EPA Version to Version 1 and it will load the appropriate EPAs.

Permissions: It is highly recommended that you assign course/program-level permissions to all of your forms, as some filters rely on this setting. Additionally, using a form in a workflow requires that it be permissioned to a course.

Authorship permissions give other users access to edit the form. You can add individual authors or give permission to all administrators in a selected program or organization.

To add individual, program, or organization permissions, click the dropdown selector to select a category, and then begin to type in the appropriate name, clicking on it to add to the list.

You can add multiple individuals, programs, and organizations to the permissions list as needed.

In order to use the "Programs" filter in the form bank and when learners initiate assessments/evaluations, you need to add Program-level permissions to each form.

Select the relevant contextual variables for this form by clicking on the checkbox. Adjust which contextual variable responses should be included by clicking on the gray badge. This allows you to deselect unneeded contextual variable responses which can make the form faster to complete for assessors.

If you want to include an Entrustment Rating on the form, click the checkbox. Select an entrustment rating scaled from the dropdown menu. Note that the responses will be configured based on the scale you select. It is also possible that the Item Text will be autopopulated based on the scale you select.

For the optional Entrustment Rating, set requirements for comments noting that if you select Prompted comments you should also check off which responses are prompted in the Prompt column. If you use this option and any person completing the form selects one of the checked responses, s/he will be required to enter a comment. Additionally, if the form is part of a distribution you'll be able to define how prompted responses should be addressed (e.g. send email to program coordinator whenever anyone chooses one of those response options).

The default Feedback and Concerns items will be added when the form is published.

Add form items by clicking 'Add Items', or click the down arrow for more options.

'Add Free Text' will allow you to add an instruction box.

If you add free text, remember to click Save in the top right of the free text entry area. Any free text entered will display to people using the form.

'Add Curriculum Tag Set' should not be used.

To create and add a new item, click the appropriate button.

Select the Item Type and add any item responses, if applicable.

Tag Curriculum Tags to your newly created item.

In the example below, because you are using a form that is mapped to "Version 2", the curriculum tag sets will be locked to "Version 2". This will ensure that you do not accidentally tag an EPA from a different version.

After you have added items to your form you may download a PDF, and preview or copy the form as needed.

Save your form to return to it later, or if the form is complete, click Publish. You will see a blue message confirming that a form is published. Unlike form templates which require a behind the scenes task to be published, a rubric form will be available immediately.

Rubric forms can also be scheduled for distribution through the Assessment and Evaluation module.

Users can't access the form when initiating an assessment on demand. Why is this happening?

Check that your form is permissioned to a course and has a workflow (e.g. Other Assessment) defined.

My PPA or Rubric Form is not displaying a publish button. Why is this happening?

In order for a PPA or Rubric form to be published, you must have at least one item that is mapped to part of your program's "EPA tree". You will only see the "publish" button appear after you have tagged an item to either an EPA(s) or a milestone(s) within an EPA. After saving the item, you will now see a "Publish" button appear.

Is it a requirement to publish PPA and Rubric forms?

You only need to publish PPAs and Rubric forms if you wish to leverage the EPA/Milestones tagging functionality in the various CBME dashboards and reporting. You are still able to use PPAs and Rubric forms without tagging EPAs or milestones if you only need to distribute them, or select them using the "Other Assessment" trigger workflow. If you want any of the standard CBME items, such as the Entrustment Item, Contextual Variables, or the CBME Concerns rubric, you must tag and publish the form. Keep in mind that the assessment plan builder only supports forms that have the standard entrustment item on it - meaning, only published PPAs/Rubrics forms.

This template organizes the information about Key Competencies and allows the information to be uploaded to Elentra.

Parent(s): This column is optional because you will provide the full parent path in the Milestones template.

If you do chose to populate this columns, indicate the parent(s) of the enabling competency by providing the stage, EPA, and role (e.g., C-C1-PR).

Code: Indicate the key competency code (e.g., ME1, CL2, HA3)

Name: Provide the key competency text

Description: Not required but complete with CanMEDS information as you wish.

Detailed Description: Not required.

Save your file as a CSV.

When coding the Key Competencies remember these required codes for the CanMEDS stages and roles:

Transition to Discipline: D Foundations of Discipline: F Core Discipline: C Transition to Practice: P Professional: PR Communicator: CM Collaborator: CL Scholar: SC Leader: LD Advocate: HA Medical Expert: ME

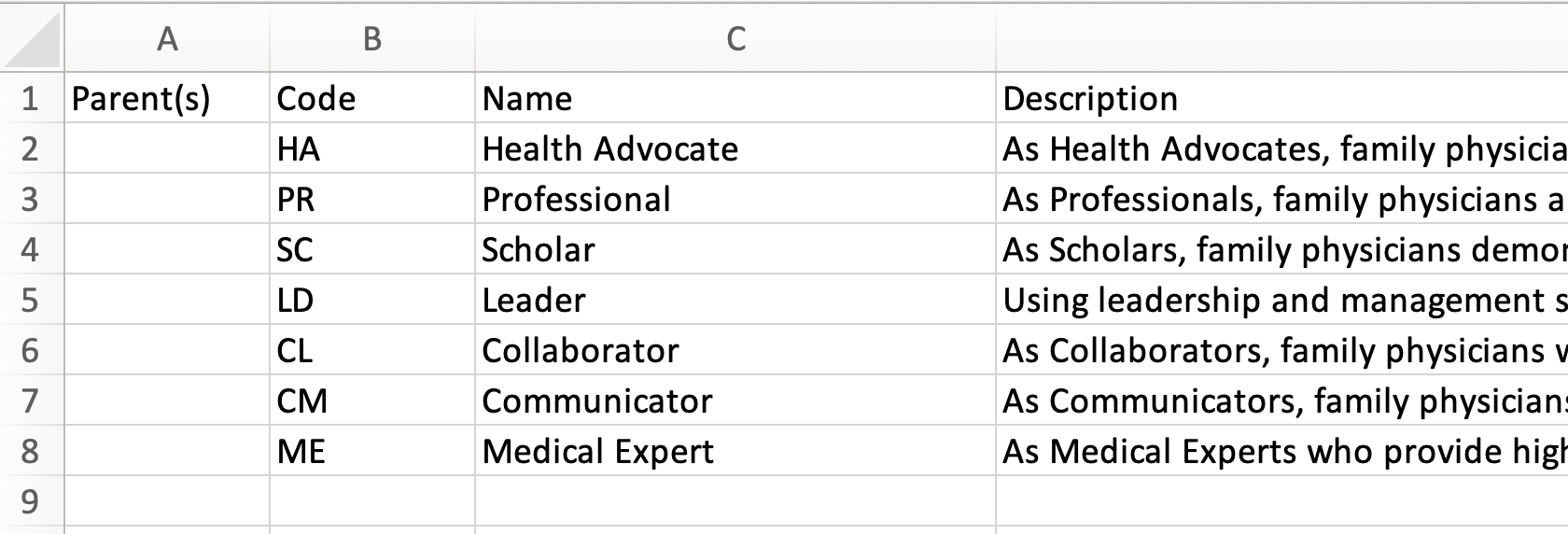

This template organizes the information about Roles and allows the information to be uploaded to Elentra.

Every program can use the same Roles template when they upload their program curriculum.

Parent(s): This can be left blank because you will provide the full parent path in the Milestones Template.

Code: Provide the code for the role

ME, CL, CM, LD, HA, SC, PR

Name: Provide the full role text

ME: Medical Expert

CM: Communicator CL: Collaborator LD: Leader HA: Health Advocate SC: Scholar PR: Professional

Description: Not required but complete with CanMEDS information as you wish.

Detailed Description: Not required.

Save your file as a CSV.

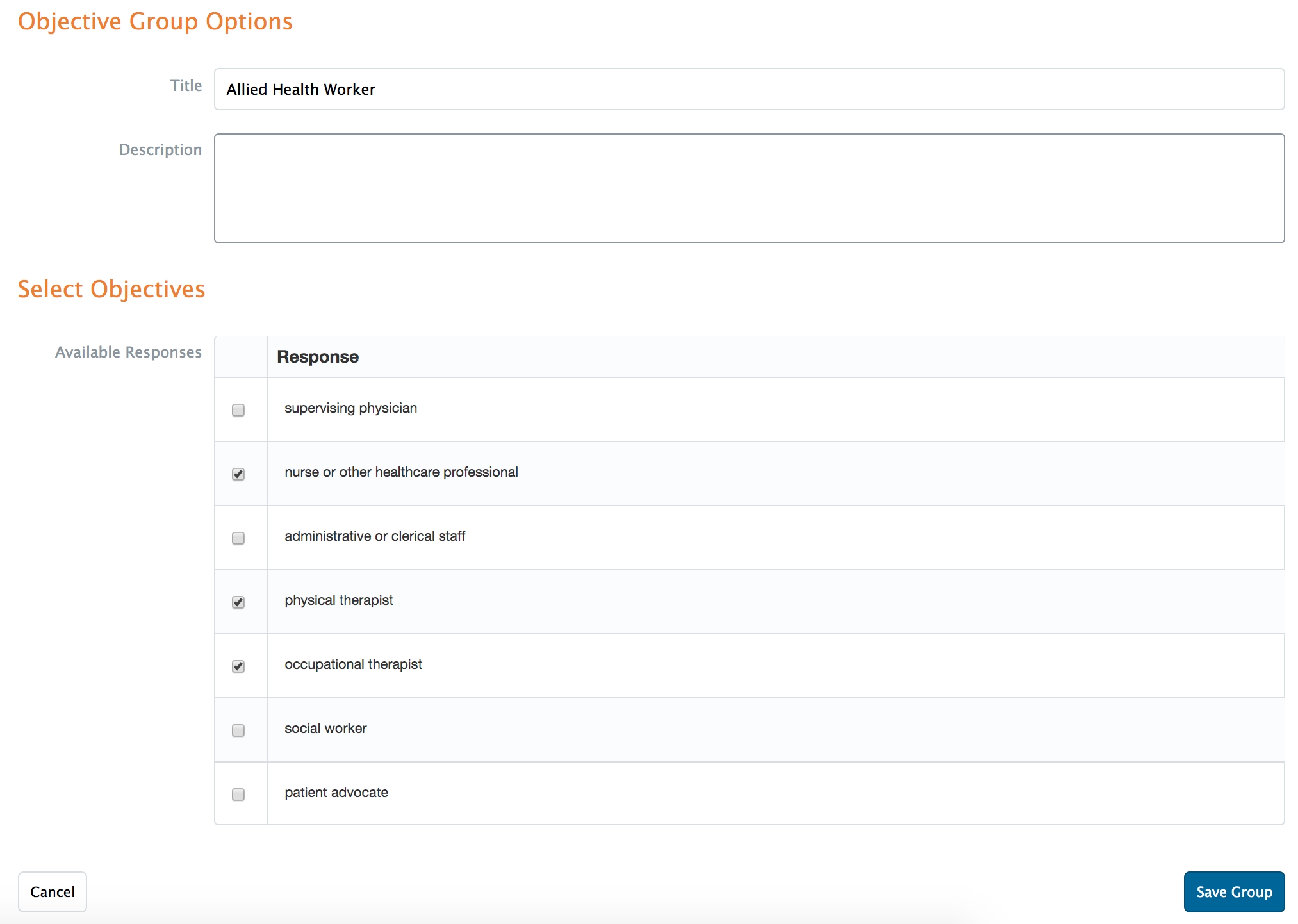

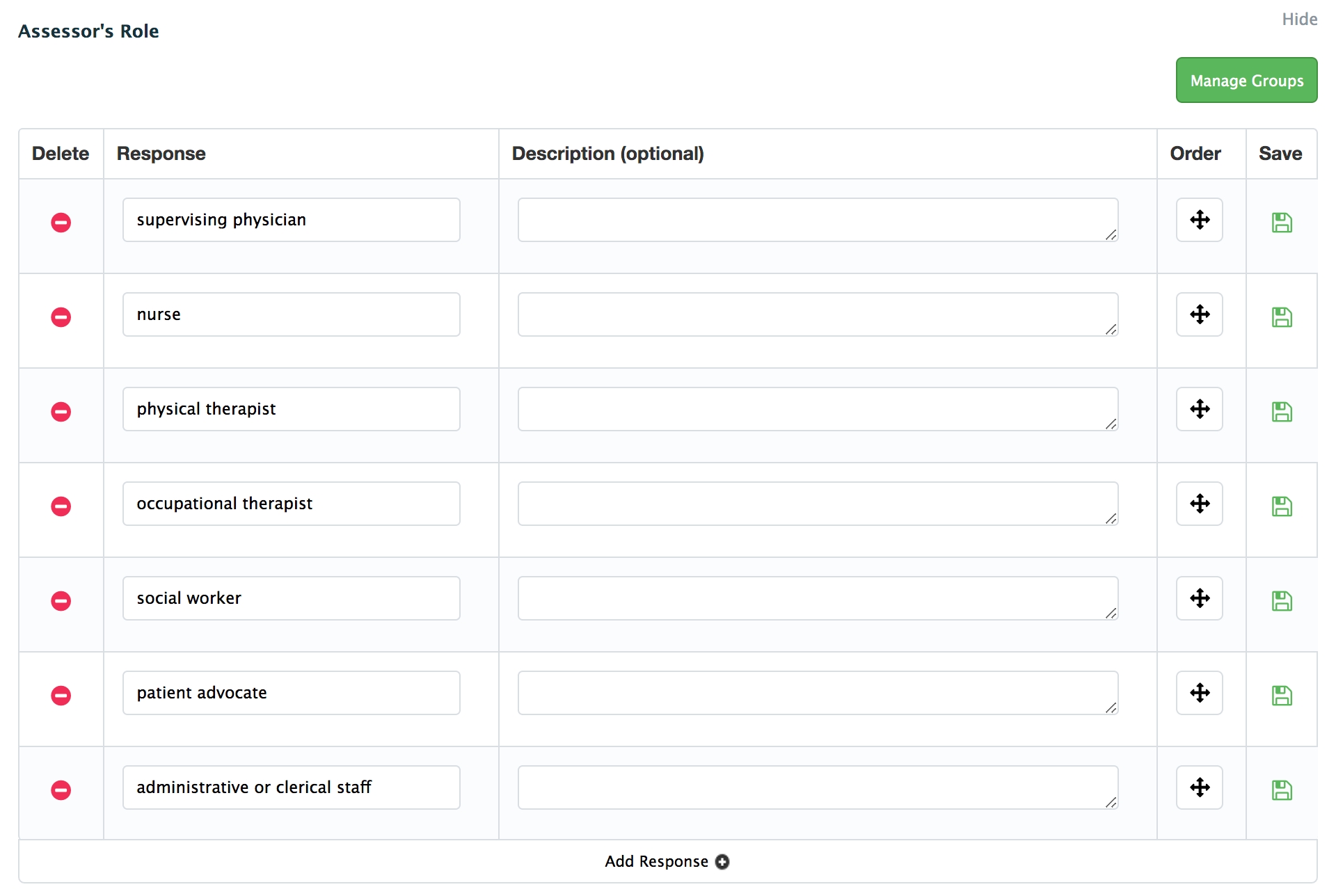

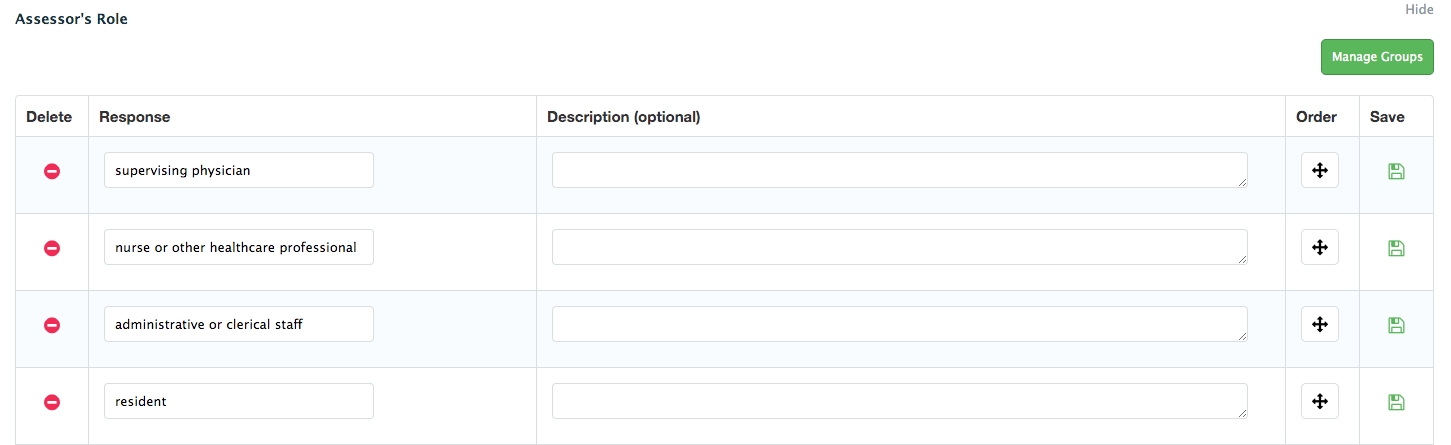

Within a given contextual variable, you are able to create groups of responses. This is leveraged in the assessment plan builder to allow you to specify exactly what requirements learners must meet.

You must be in an administrative role to manage contextual variables.

Click Admin > Manage Courses

Beside the appropriate program name, click the small gear icon, then click "CBME"

Click on the "CV Responses" tab

Click on any contextual variable category to expand the card. To add a new group within that contextual variable, click on the green "Manage Groups" button.

To add a new group, click "Add Group". To edit an existing group, click on the group title.

Add a title for the contextual variable group and an optional description. Check off all responses that you wish to add to the group and then click "Save Group".

After saving, you can now use the Group contextual variable types within the assessment plan builder.

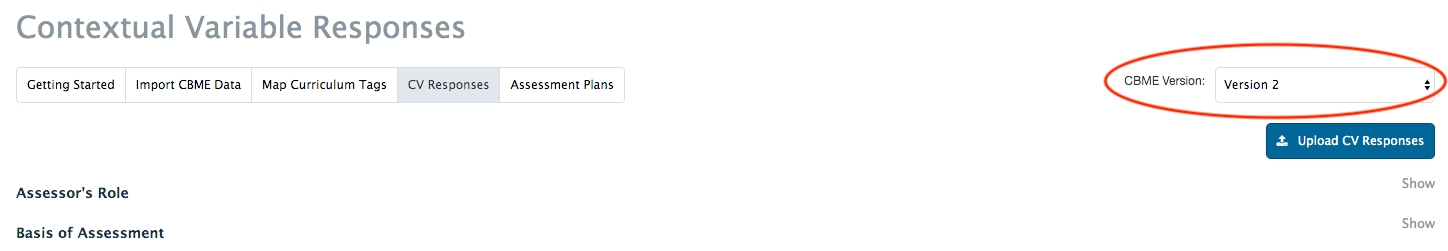

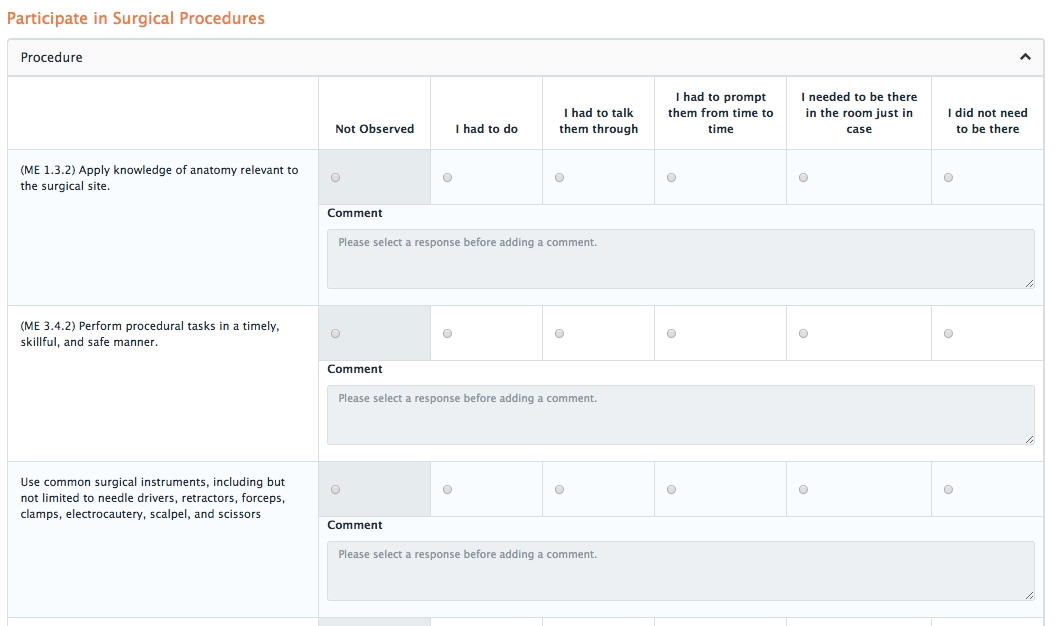

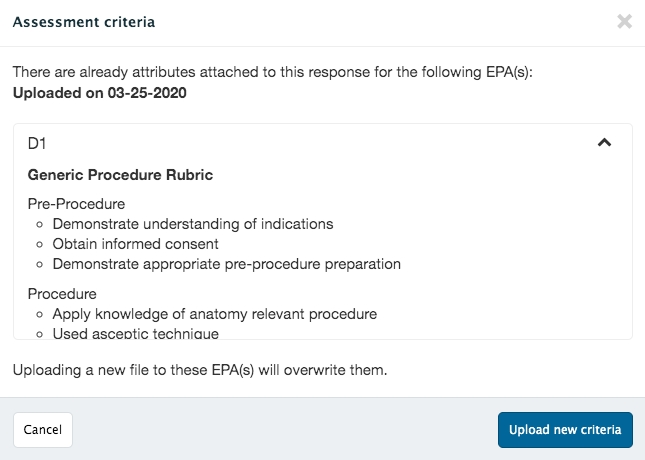

When importing data to create EPA maps, programs are required to upload a contextual variable template to provide contextual variable response options (e.g. case complexity: low, medium, high). In addition to this information, programs will need to add specific details about any procedures included in their contextual variable response options. This additional information, called procedure attributes, plays an important part in building procedure assessment forms. Think of the procedure attributes as assessment criteria (e.g. obtained informed consent, appropriately directed assistants, etc.) for each procedure .

For each procedure in your program, you must define headings, rubrics and list items that may be used to assess a learner completing the procedure. Headings appear at the top of a form, rubric becomes the subtitle of a rubric, and list items are the things that will actually be assessed on the rubric.

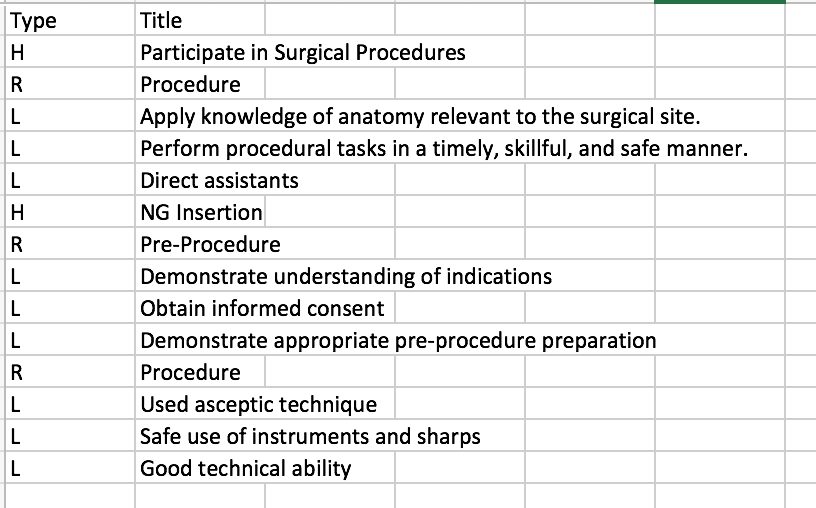

All this information must be stored and uploaded in a CSV spreadsheet that uses two columns: Type and Title.

Within ‘Type’ there are three things you can include: H to represent heading (e.g. Participate in Surgical Procedures, NG Insertion) R to represent rubric (e.g. Procedure, Pre-Procedure) L to represent list items (e.g. Apply knowledge of anatomy)

The three procedure criteria form a hierarchy. A heading can have multiple rubrics, and a rubric can have multiple list items. Arrange the criteria in your spreadsheet to reflect their nesting hierarchies.

In the 'Title' column enter the information to be uploaded.

When complete, save the file in a CSV format.

Sample Procedure Form On this sample form, the following information was uploaded as procedure attributes: H: Participate in Surgical Procedures R: Procedure L: (ME1.3.2) Apply knowledge... (ME3.4.2) Perform procedural tasks... Use common surgical instruments...

You can use the same file for multiple procedures if they share the same heading, rubric, and list information.

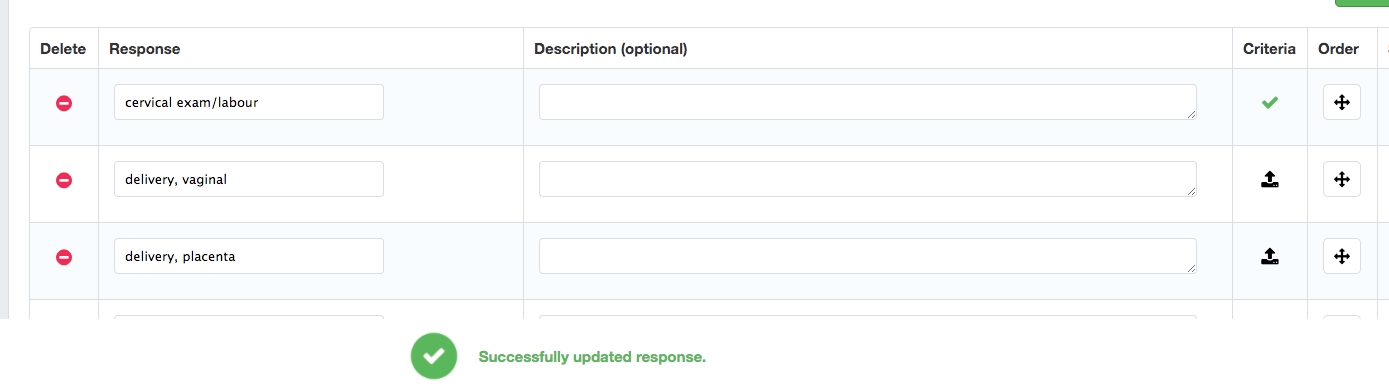

Navigate to Admin > Manage Courses.

From the cog menu beside the course name, select 'CBME.'

From the tab menu below the Competency-Based Medical Education header, click **'**CV Responses'.

From the list of contextual variables, click on ‘Procedure’.

Before uploading procedure attributes, make sure you are working with the correct CBME curriculum version and change the version as needed.

Beside each procedure you will see a small black up arrow under the Criteria column.

Click on the arrow to upload a .csv file of information for each procedure.

You must indicate an EPA to associate the procedure attributes with (note that you can select all as needed).

Either choose your file name from a list or drag and drop a file into place.

Click ‘Save and upload criteria’.

You will get a success message that your file has been successfully uploaded. Click 'Close'.

After procedure attributes have been uploaded for at least one EPA you will see a green checkmark in the Criteria column.

Click the green disk in the Save column to save your work. You will get a green success message at the bottom of the screen.

Repeat this process for each procedure and each relevant EPA. Remember, a program can use the same procedure attributes for multiple procedures and EPAs if appropriate.

You can view the procedure attributes already uploaded to a procedure by clicking on the green check mark in the criteria column.

You will see which EPAs have had procedure attributes added and can expand an EPA to see specific details by clicking on the chevron to the right of the EPA.

Navigate to Admin > Manage Courses/Programs.

Search for the appropriate course as needed.

From the cog menu on the right, select 'CBME'.

Click on 'CV Responses' from the tab menu below the Competency-Based Medical Education heading.

Click on a contextual variable to show its existing responses.

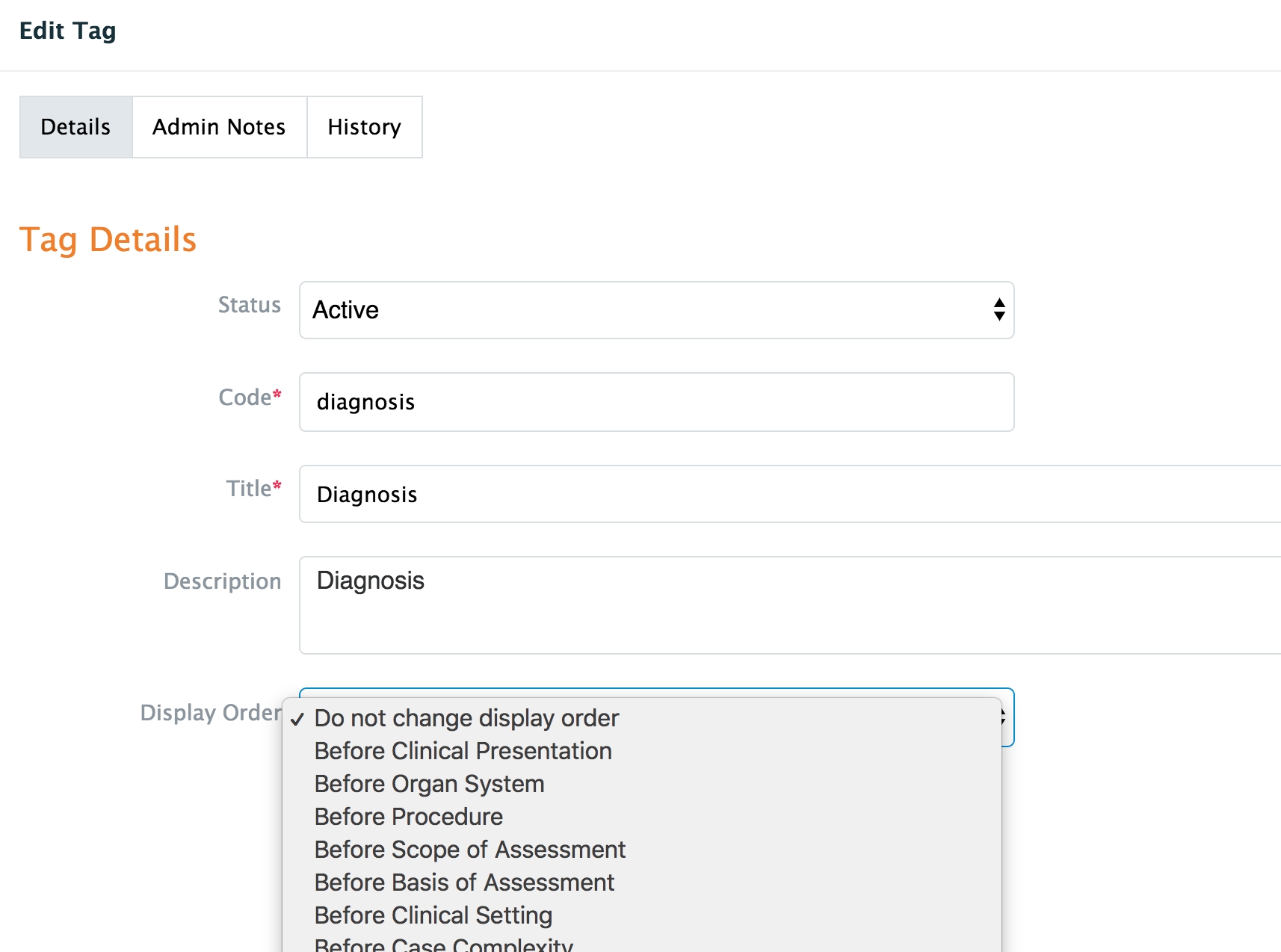

Click on the double arrow in the Order column to drag and drop a response to a new location.

You'll get a green success message that your change has been saved.

If users want to reorder the overall list of contextual variables they can do so from Admin > Manage Curriculum. To change a tag's display order you need to edit the tag by clicking on the pencil icon beside a tag name. Then you need to adjust the display order for each individual tag.

After a program has uploaded an initial set of contextual variable responses, they can be managed through the CBME tab.

Navigate to Admin > Manage Courses/Programs.

Search for the appropriate course as needed and from the cog menu on the right, select 'CBME'.

Click on 'CV Responses' from the tab menu below the Competency-Based Medical Education heading.

To modify the contextual variable responses, click the title of the contextual variable or click 'Show' to the right of the one you want to edit.

To edit an existing response, make the required change and click the green disk icon in the Save column.

To delete an existing response, click the red minus button beside the response.

To add a new contextual variable response, click 'Add Response' at the bottom of the category window. This will open a blank response space at the end of the list. Fill in the required content and save.

If you get a yellow bar across the screen when you try to modify contextual variables it means none have been uploaded for the program you are working in. Return to the Import CBME tab and complete Step 4.

This template organizes the information about Enabling Competencies and allows the information to be uploaded to Elentra.

Parent(s): This column is optional because you will provide the full parent path in the Milestones template.

If you do chose to populate this columns, indicate the parent(s) of the enabling competency by providing the stage, EPA, role, and key competency (e.g., C-C1-ME-ME1)

Code: Indicate the enabling competency code (e.g., ME1.1, CL2.3, HA3.4)

Name: Provide the enabling competency text.

Description: Not required but complete with CanMEDS information as you wish.

Detailed Description: Not required.

Save your file as a CSV.

When coding the Enabling Competencies remember these required codes for the CanMEDS stages and roles:

Transition to Discipline: D Foundations of Discipline: F Core Discipline: C Transition to Practice: P Professional: PR Communicator: CM Collaborator: CL Scholar: SC Leader: LD Advocate: HA Medical Expert: ME

This template organizes the information about Enabling Competencies and allows the information to be uploaded to Elentra.

Parent(s): In the parent column you must indicate the full parent path for all milestones. This should include stage, EPA, role, key competency and enabling competency (e.g., C-C1-ME-ME1-ME1.1)

Code: Codes should be recorded in uppercase letters. The format for the milestone code is: Leaner Stage letter, followed by a space, CanMEDS Role letters, Key Competency number, followed by a period, Enabling Competency number, followed by a period, Milestone number.

Note that there should be no space between the CanMEDS Role letter and the Key Competency number.

Creating and confirming your Milestones codes takes patience. You'll likely notice that in some Royal College (RC) documents there is only a two digit milestone code. For the purposes of mapping your curriculum in Elentra, you must have three digit milestone codes. We recommend you add the third digit in the order the milestones appear in the RC documents you're using. Make sure you check for duplication as you go so that unique milestones have their own codes but different codes aren't applied to repeating milestones (within one stage). Using the data organization tools to reorder the milestone code columns and title columns can help you identify unneeded duplication.

You may also notice that the RC allows programs to use milestones coded from one stage in another stage (so you may see an F milestone in a C stage EPA). How programs and organizations handle this is ultimately up to them but we recommend that you align the milestone code with the stage that it is actually being assessed in/mapped to. For example, if it’s a “D” milestone being mapped to an F EPA, rename it as an “F” milestone. This is because you likely have a different expectation of performance on the milestone in a different stage, even if it’s the same task.

Name: Provide the text of the milestone as provided by the Royal College.

Description: Not required but complete with CanMEDS information as you wish.

Detailed Description: Not required.

Save your file as a CSV.

When coding the Milestones remember these required codes for the CanMEDS stages and roles:

Transition to Discipline: D Foundations of Discipline: F Core Discipline: C Transition to Practice: P Professional: PR Communicator: CM Collaborator: CL Scholar: SC Leader: LD Advocate: HA Medical Expert: ME

Warning: Do not delete any contextual variables from the original list within Manage Curriculum or the CBE auto-setup feature will prompt you to re-add them the next time you use CBE.

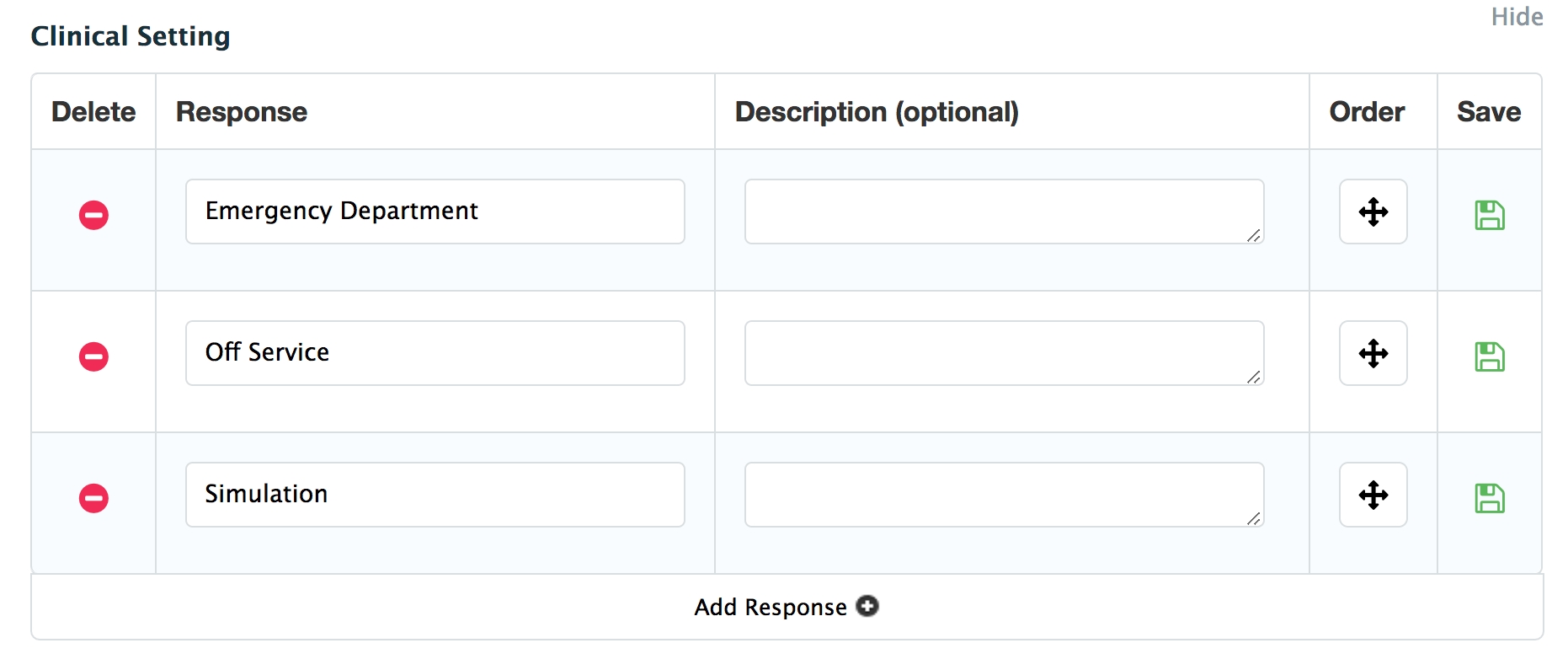

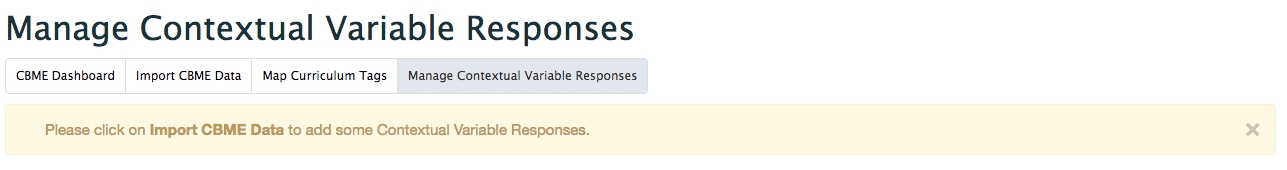

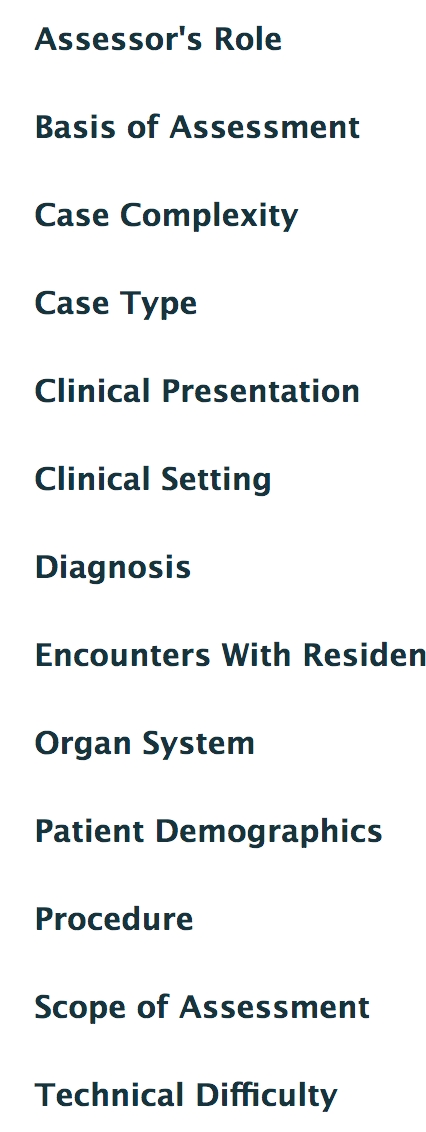

Contextual Variable Responses are used to describe the context in which a learner completes something. Examples of contextual variables include diagnosis, clinical presentation, clinical setting, case complexity, patient demographics, etc. For each variable, courses must define a list of response options. For example, under clinical presentation, a course might include cough, dyspnea, hemoptysis, etc.

Elentra will autosetup a list of contextual variables in the Manage Curriculum interface. Institutions can also add contextual variables (e.g., assessor's role) to this list before uploading contextual variable responses (e.g., nurse, physician, senior resident) via each course CBE tab. Elentra provides access to the same Contextual Variables for all courses. Courses can customize their list of contextual variable responses, but all courses will all see the same list of contextual variables. For this reason we strongly recommend that you work with your courses to standardize the list of contextual variables loaded into Elentra. If you do not, you risk eventually have hundreds of contextual variables that all course administrative staff have to sort through, whether they apply to their course or not.

Elentra will auto-create the following Contextual Variables for a CBE-enabled organization. Do not delete any of these or your users will be prompted to run the auto-setup tool again.

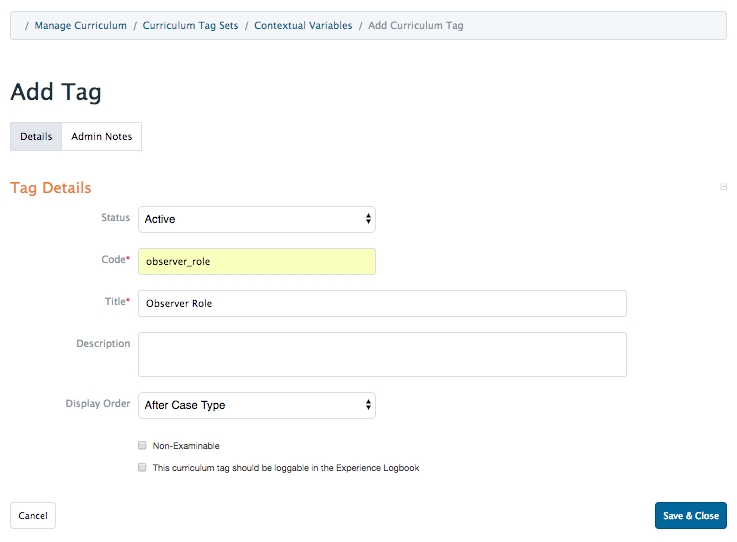

Institutions can add additional contextual variables to make available to their programs through Admin > Manage Curriculum. Group and role permissions of medtech:admin or staff:admin are required to do this. If you add a contextual variable to the organization wide tag set, courses will be able to upload their own contextual variable responses for the tag.

Navigate to Admin > Manage Curriculum.

Click on 'Curriculum Tags' from the Manage Curriculum Card on the left sidebar.

Click on the Contextual Variables tag set.

Click 'Add Tag' or 'Import from CSV' to add additional tags (more detail regarding managing curriculum tags is available here).

Any new tags you add to the Contextual Variable tag set (e.g. observer role, consult type, domain) will be visible to and useable by all programs within your organization.

When you add new tags to the tag set, you'll be required to provide a code and title. It is recommended that you make the code and title the same, but separate the words in the code with underscores. For example: Title: Observer Role Code: observer_role

Note that there is a 24 character limit for curriculum tag codes and a 240 character limit for curriculum tag titles. If you enter more characters than the limit, the system will automatically cut off your entry at the maximum characters.

After you have added Contextual Variables to the existing tag set, programs will be able to add the new response codes (and responses) to the contextual variable response template and successfully import them.

Once you have built out the list of all contextual variables you want included in the system you can import specific contextual variable responses for each course.

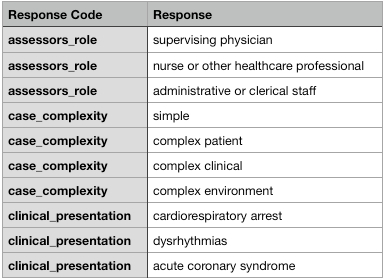

First, build a spreadsheet that includes the CV response category and individual CV responses. You can use this file as a starting place:

Note that responses will display in the order they are in in the csv file you upload. You can reorder the responses later but you may save time by inputting them in the order you want users to see them.

The contextual variable response spreadsheet should have the following information:

Response Code: This column should include all contextual variables applicable to the course/program (e.g., assessor’s role, basis of assessment, case complexity, case type). Make sure to use the exact response code all contextual variable tags in the response_code column.

Response: In this column, you can add the response variables required for a course (e.g. Case complexity response variables: low, medium, high). To add more than one response variable per response code, simply insert another line and fill in both the response code and response columns.

Description (optional): This field is not seen by users at this point and simply serves as metadata.

Save as a CSV file.

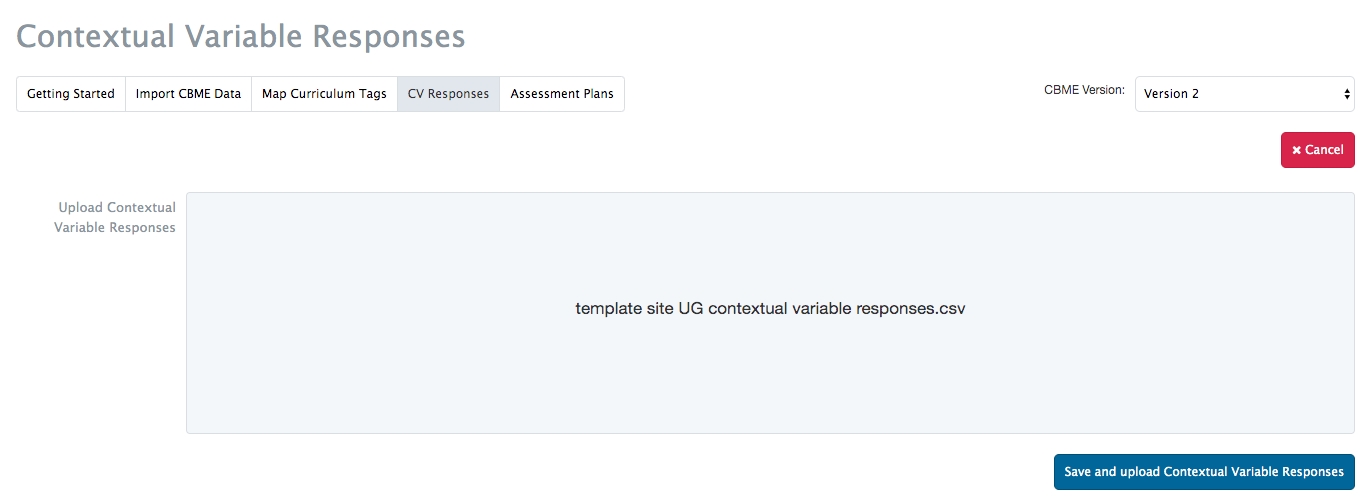

Navigate to Admin > Manage Courses.

From the cog menu beside the course name, select 'CBE.'

From the tab menu below the Competency-Based Education header, click 'CV Responses'.

Click 'Upload CV Responses'.

Drag and drop or search your computer for the appropriate file.

Click 'Save and upload Contextual Variable Responses'.

When the upload is complete you'll be able to click on any contextual variable and view the responses you've added.

Periodically an organization or course's CBE curriculum may be updated. Elentra can store multiple versions of a CBE curriculum and associate different learners with different versions as needed.

When a new curriculum version is introduced, administrators have the option of versioning an organization tree or a course tree.

For organizations using CBE outside the context of a Royal College curriculum framework, if a new curriculum version is added, you'll need to use the option to assign the new version to any existing user whose user-tree you want to update to point to the new curriculum version.

The remainder of this information is for programs using a Royal College (Milestones) curriculum framework.

For Royal College Programs, new curriculum versions will be applied to each CBME-enabled learner at the stage level. This means that if a learner is currently in Foundations, they will retain the previous curriculum version in Transition to Discipline and Foundations of Discipline, but will be given the new curriculum version in Core and Transition to Practice. Please ensure that all of your learners are in the correct stage (on their CBE Dashboards) prior to publishing a new curriculum version. Remember: a green checkmark on the stage indicates that the learner has completed that stage. A learner is currently in the stage that comes after the last green stage checkmark.

After an administrator has published a new version, they will need to build tools for all of the new or changing EPAs before learners can be assessed on them.

If you are a Royal College program, make sure learners are flagged as CBE learners before you complete the versioning process. A developer can do this directly in the database, or you can use a database setting (learner_levels_enabled) to allow course administrators to on the course enrolment page.

If learners are not flagged as CB(M)E learners, they will be skipped in the versioning process.

Prepare required CSV files

Add new curriculum version

Create any new Contextual Variable responses as needed and update Procedure criteria (per EPA) if applicable

Contextual variable responses are not specific to curriculum versions, with the exception of procedure criteria uploaded per specific EPA. When a new curriculum version is added you’ll see a version picker display on the CV responses tab, but edits made to CV responses in any version will be reflected across all versions.

Build new assessment tools for changed or added curriculum tags

Build an assessment plan for the new curriculum version

Update mappings, and priority and likelihood ratings on rotations if applicable

If a course uses the Clinical Experience Rotation Scheduler and has mapped curriculum tags to specific rotations and assigned priority and likelihood ratings, these will be copied forward for all unchanging curriculum tags. If a new curriculum tag has been added in a version, you’ll need to map it and provide a priority and likelihood rating if required.

Reset any user-trees as needed

For Royal College programs, learners will be assigned the new curriculum version for any upcoming stages they still need to complete.

Learner Dashboard

Completed and current stages for a learner will retain their previous curriculum version(s).

Future stages for a learner will be updated to the new curriculum version.

The version applied to each stage and viewed to the right of the stage name (small grey badge) should be updated to reflect the new curriculum version for future stages

Any assessment tasks completed on a learner in a future stage for EPAs that have been updated in the new curriculum version should move to "Archived Assessments"

The "What's Left" modal showing the Assessment Plan requirements per EPA will only update for a learner after a new assessment plan is published.

Program Dashboard

Each learner's list of EPAs will reflect their curriculum version (so, one user may show D1, D2, and D3 and another user may show D1, D2, D3 and D4 if they are on version 2 for stage D and D4 was added in version 2).

Visual Summary Dashboards

More information coming soon.

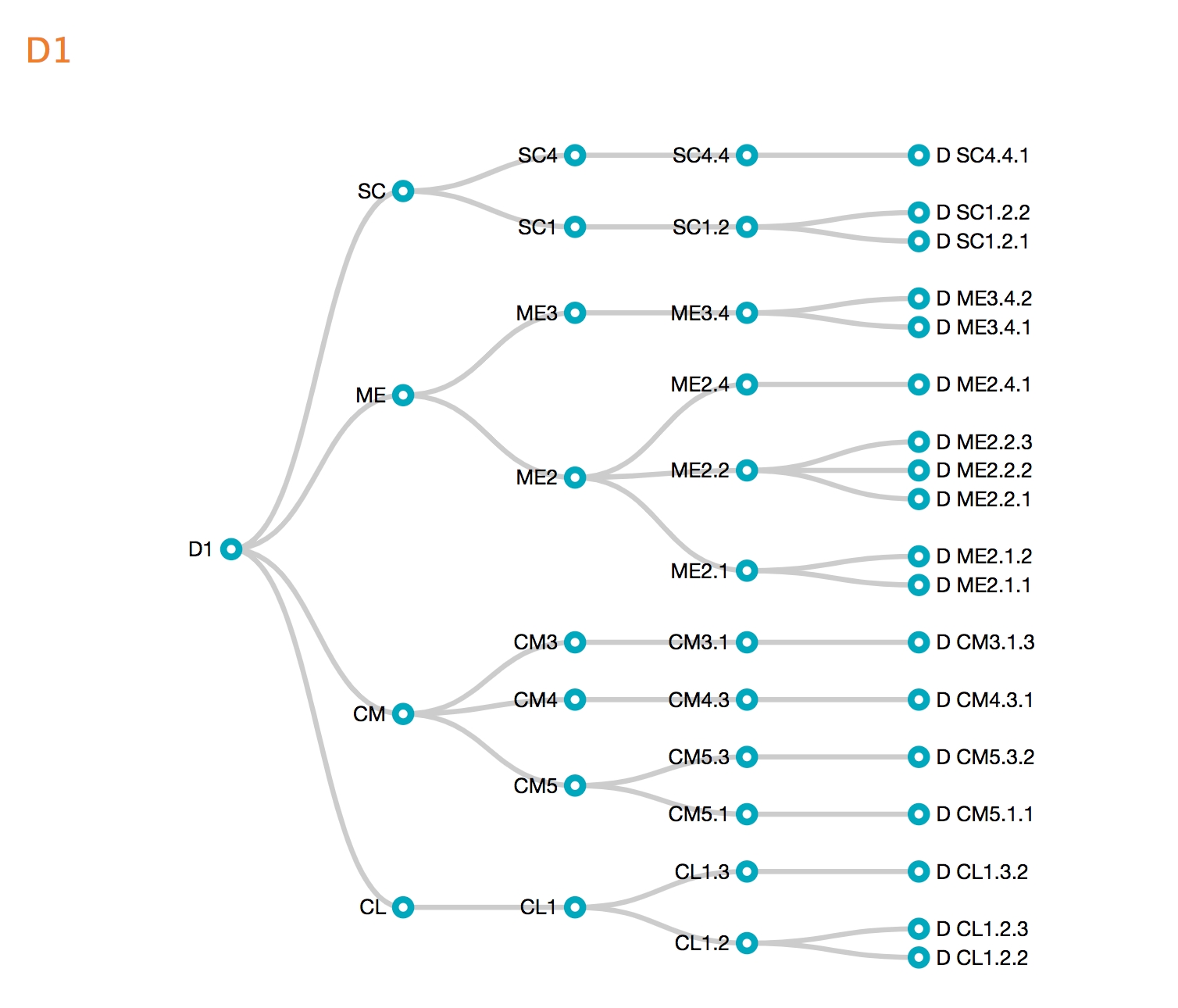

EPA Encyclopedia

Updates in Elentra ME 1.26 mean that learners should see their unique user-trees when viewing the EPA Encyclopedia (i.e., they'll see different curriculum versions applied to different stages).

Completing Assessments

When initiating an assessment, only the EPAs and associated forms relevant to a specific learner will be displayed. Faculty or admin will not have to choose between multiple versions of on-demand forms for a given learner.

Setting learner CB(M)E status (via the or directly in the database) is a prerequisite for Royal College programs using curriculum versioning and who want residents to move to a new curriculum version when they begin a new stage of training.

REMINDER: Please ensure that all of your learners are in the correct stage (on their CBME Dashboards) prior to publishing the new curriculum version. The green checkmark on the stage indicates that the learner has completed that stage. A learner is currently in the stage that comes after the last green stage checkmark. Failing to ensure that learners are in the correct stages will result in those learners receiving (or not receiving) new curriculum in stages when they shouldn't have.

Please ensure that you have documented any changes that will need to be made, in addition to creating (or modifying) new CSV templates for upload. From the user interface, you will be able to indicate:

Whether an EPA is changing, not changing, or being retired

Within each EPA that has been marked as changing, indicate if any KC, EC, or Milestones will be changing, not changing, or retired

If you are adding milestones to an EPA, mark it as changing so that the uploader will add the new milestones

Entirely new EPAs will be detected by the uploader without you needing to indicate anything. For example, if you currently have D1, D2, and D3, but will be adding a D4 and D5, the system will detect these in your spreadsheet and add them to the new curriculum.