Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

The Evaluation Reports section will allow you to generate reports based on evaluations completed via distributions. Evaluation reports will not include commenter names, even if you check off the commenter name option when setting the report options.

This report is relevant only if your organization uses the Clinical Experiences rotation schedule. If you distribute rotation evaluations through a rotation-based distribution, you can use this report to view results. The exact format of the report will depend on the form it is reporting on.

Select a course, date range, rotation, curriculum period and form.

Set the report parameters regarding displaying comments and averages.

Click 'Generate Report'.

This report will not include learner names, even if you check off the commenter name option when setting the report options.

For use with distributions completed by event type.

Set a date range.

Select Individual Events: Check this off if you want the ability to select individual events (otherwise you will have to report on all events).

Select the event type, distribution by a curriculum period, learning event and form.

This report will not include learner names, even if you check off the commenter name option when setting the report options.

Use this to report on feedback provided by participants when a feedback form is attached to an event as a resource.

Select a course, date range, event type, form and learning event (optional).

You will notice some extra report options for this type of report.

Separate Report for Each Event: This will provide separate files for each report if multiple events are selected to include.

Include Event Info Subheader: This will provide a bit of detail about the event being evaluated (title, date, and teacher).

This report can include an average and an aggregate positive/negative score. This report will not include learner names, even if you check off the commenter name option when setting the report options.

For use in viewing a summary report of learner evaluation of an instructor.

Select a course and set a date range.

Select a faculty from the dropdown menu by clicking on his/her name. Note that only faculty associated with the selected course in the given time period will show up on the list. Additionally, they must have been assigned as an assessor in another distribution in the organization. Please see additional information below.

Select a form and distribution (optional).

Set the report parameters regarding displaying comments and averages.

Click 'Download PDF(s)'.

This report will not include an average, even if you check off Include Average when setting the report options.

The way faculty names become available to select for this report is when the faculty member is also an assessor on a distribution in the organization. This is designed in part to protect the confidential nature of faculty evaluations and prevent staff from being able to generate reports on any faculty at any time. If your organization does not use assessments or you require reports on faculty whose names aren't available, please reach out to us and we can help you put a work around in place.

You may also be able to report on faculty evaluations by accessing an aggregated report from a specific distribution. Please see more detail in the Weighted CSV Report section in Distributions.

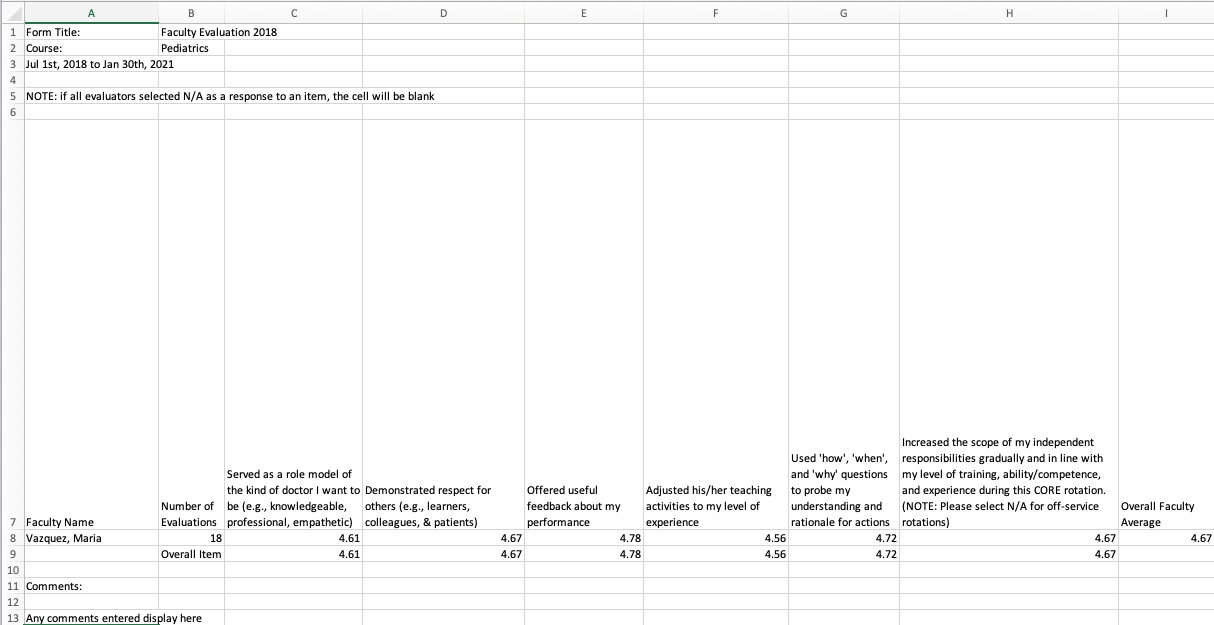

Displays the average of each selection that was made by evaluators for each item in the form for each target on whom the form was completed. Commentary for each target is listed below the table of total selections for each form item.

Select one or more courses.

Set a date range.

Optionally include external assessors by checking off the box.

Select the relevant faculty.

Select the relevant form.

Select the relevant distribution(s).

Click Download CSV.

Takes a total of all evaluations and splits the rating scale into low and high responses. For each faculty target, the report indicates the highest score that they received for each question and also shows the lowest low score and the highest high score across all faculty for each question.

Select one or more courses.

Set a date range.

Select the relevant faculty.

Select the relevant form(s).

Select the relevant distribution(s).

Include Target Names - Check this box if you want to include the target names in the report.

Include Question Text - Check this box if you want to include the question text in the report.

Click Download CSV.

Aggregates the results of the standard faculty evaluation form type on a per faculty basis that have been delivered via distribution only. Optionally include any additional program-specific questions.

Select one or more courses.

Set a date range.

Select the relevant faculty.

Include Comments - check this box to include comments.

Unique Commenter ID - check this box to apply a commenter id to comments so you can look for patterns from one evaluator.

Include Description - check this box and enter text that will be included at the top of the PDF report.

Include Average - check this box to include average ratings.

Click Download PDF.

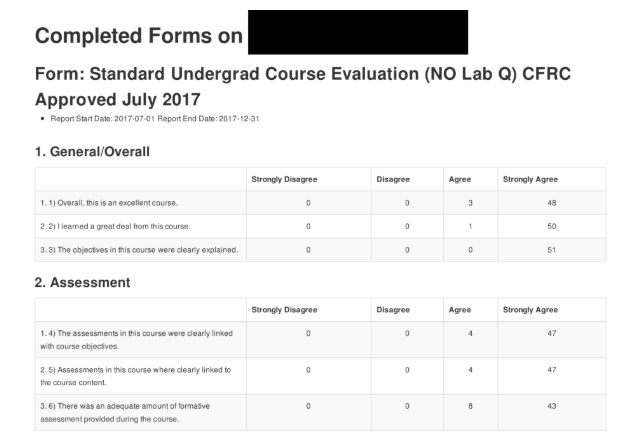

For use in viewing a summary report of learner evaluation of a course.

Select a course and set a date range.

Select a form and distribution (optional).

Set the report parameters regarding displaying comments and averages.

Click 'Download PDF(s)'.

This report will not include learner names, even if you check off the commenter name option when setting the report options. This report can include an average and an aggregate positive/negative score.

Select a Course

Set a date range for the report.

Select the event types.

Select the appropriate distributions.

Select the relevant learning event (optional).

Optionally select to download as one file.

This report will only be populated if you are using automated rotation evaluations enabled via the Clinical Experiences Rotation Schedule. To use automated rotation evaluations you must also be using a standard rotation evaluation form type.

Select a course/program

Set the date range

Select a form (if you had multiple published forms you might be able to pick them)

Select a rotation schedule (the options available will be based on the set date range)

If you select multiple rotations, results will be aggregated (this can function a bit like a program evaluation if desired)

Choose whether to view results as a CSV or PDF

This report will not include commenter names.

Improved in ME 1.20!

Updated Learner Assessments (Aggregated) Report so that administrative staff running the report can optionally see commenter names (previously ticking that checkbox had no effect).

The Assessments Reports section mostly allows you to generate reports based on assessments completed via distributions. There are some exceptions, however most reports are for distributed forms.

This report allows you to compile all assessments completed on a target in one or more courses into one or many files. It does not aggregate results, just compiles multiple forms.

Select a course, set a date range and select a course group (optional).

Select a learner.

Select a form (optional).

Click 'Download PDF(s)'.

Choose whether to download as one file (all forms will be stored in one file) or not (you'll download a file for each form).

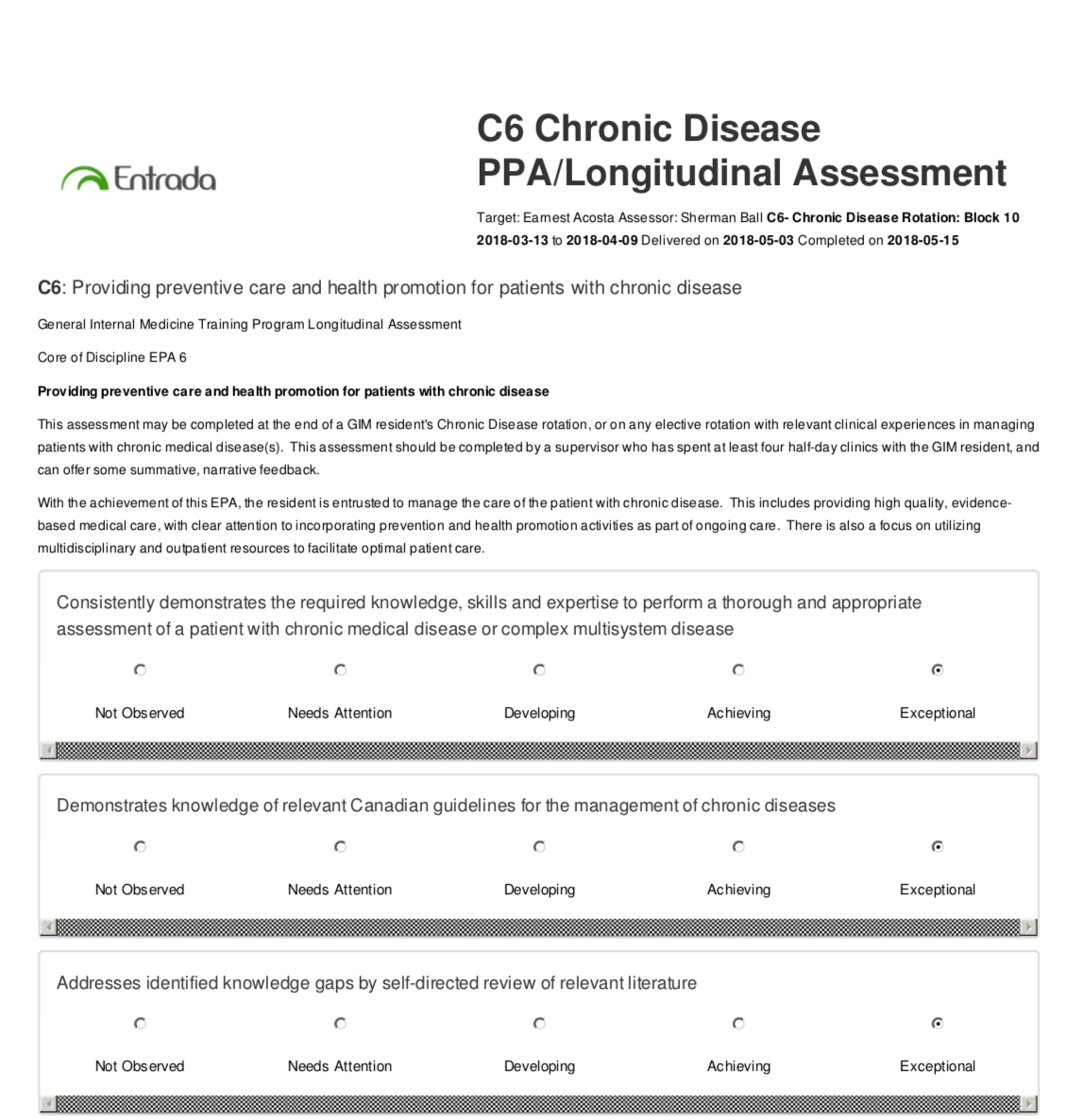

A file will download to your computer with the appropriate forms included. Each form will include the target and assessor, delivery and completion date, form responses and comments, etc. The file names will be: learnerfirstname-learnerlastname-assessment-datereportrun-#.pdf. For example: earnest-acosta-assessment-20181005-1.pdf

Use this report to create an aggregated report on learner performance on a single form that may have been used multiple times and completed by multiple assessors. For this report, the list of learners available will depend on someone's affiliation with a course/program if they are a staff:pcoordinator or faculty:director.

Set the date range.

Select a learner.

Select a form.

Set the report parameters regarding displaying comments and averages.

Include Comments - Check this to include any narrative comments from tasks included in the report.

Unique Commenter ID - Check this to identify the authors of comments using a unique code instead of the name.

Include Commenter Name - Check this to include the name of comment authors.

Include Description - Check this to include a narrative description that will display at the top of the report.

Include Average - Check this to include averages across each item. Note: This option assigns a numeric value to the item response options.

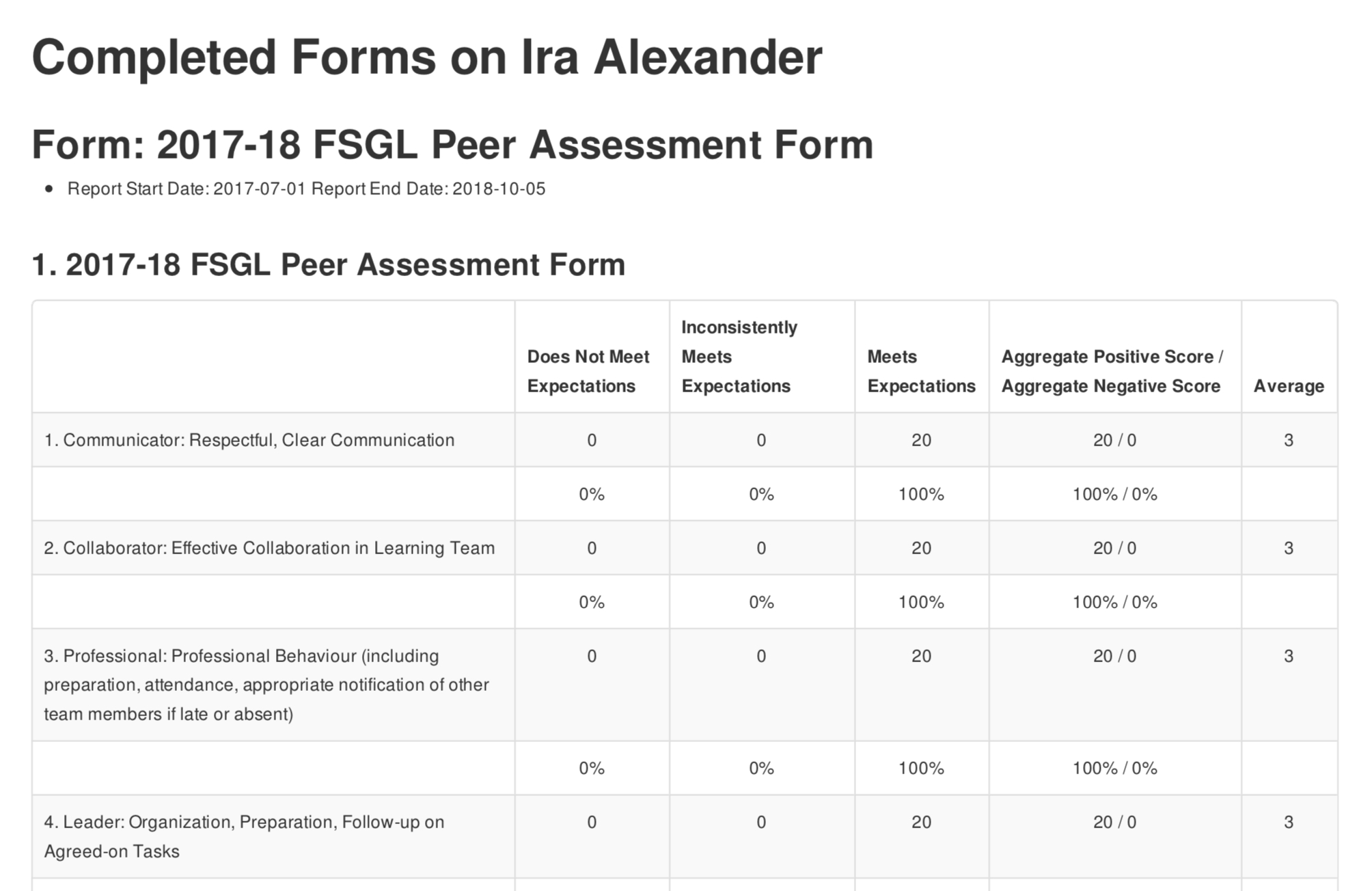

An average and aggregate positive and negative score are available with this report.

Click 'Download PDF(s)'.

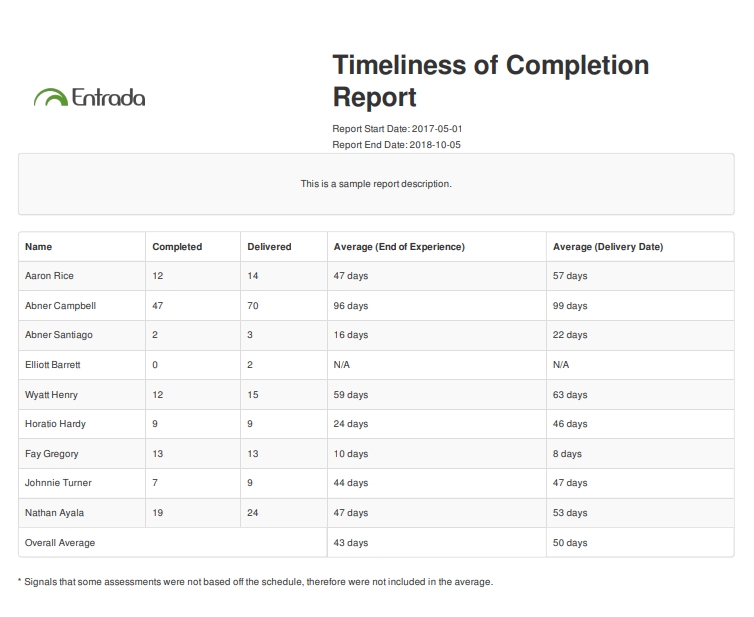

For use in reporting on tasks delivered to and completed by faculty. Report columns include the number of tasks delivered and completed as well as the average time to completion from delivery date and average time to completion from the end of the experience (e.g., a block) per user. It also provides an overall average across all users. Available as a PDF or CSV.

Additional details on this report:

shows all tasks delivered to an assessor based on the parameters set when requesting the report (i.e., course, date range, faculty member)

importantly, the report displays progress records per target not just a single assessment task. So if a distribution generates 15 tasks for a faculty member to complete, that might show as ‘1’ on the A&E badge, but in the Timeliness of Completion report it will show as 15 delivered tasks

tasks that are delegations will not display in the report (i.e., when the faculty member is the delegator)

tasks that are the result of delegations will show in the report (i.e., if a clinical secretary is the delegator, once she sends a task to a faculty member, it will show in the report on that faculty member)

if a task has expired it is included in the delivered column but not in the completed column

if a task has been deleted it is not included in the report

if a faculty member forwards a task, it is removed from their delivered count

if a task has been forwarded to the faculty member, it is included in the delivered column and the completed column (once applicable)

if a task allows for multiple assessments on the same target, the initial task will register a count of 1 in the delivered column. If 1 task is completed, the completed column will increase by 1. If additional tasks are completed beyond the first one, the delivered and completed columns will increase by 1.

To generate the report:

Select a course.

This restricts the results of the report to assessments associated with this course. If a faculty member is an assessor across multiple courses, you'd need to run multiple reports (one per course) to get an overall view of their total assessments delivered and completed.

Set a date range.

If there were external assessors used you will have the option to include externals in the report or not.

Select one or more users by clicking the checkbox beside each required name. Please note that if you select all faculty it can take some time for all names to appear. Please be patient! To delete a user from the report click the 'x' beside the user's name.

Include Average Delivery Date: Enable this if desired.

Click 'Download PDF(s)' or 'Download CSV(s)'.

The report should download to your computer.

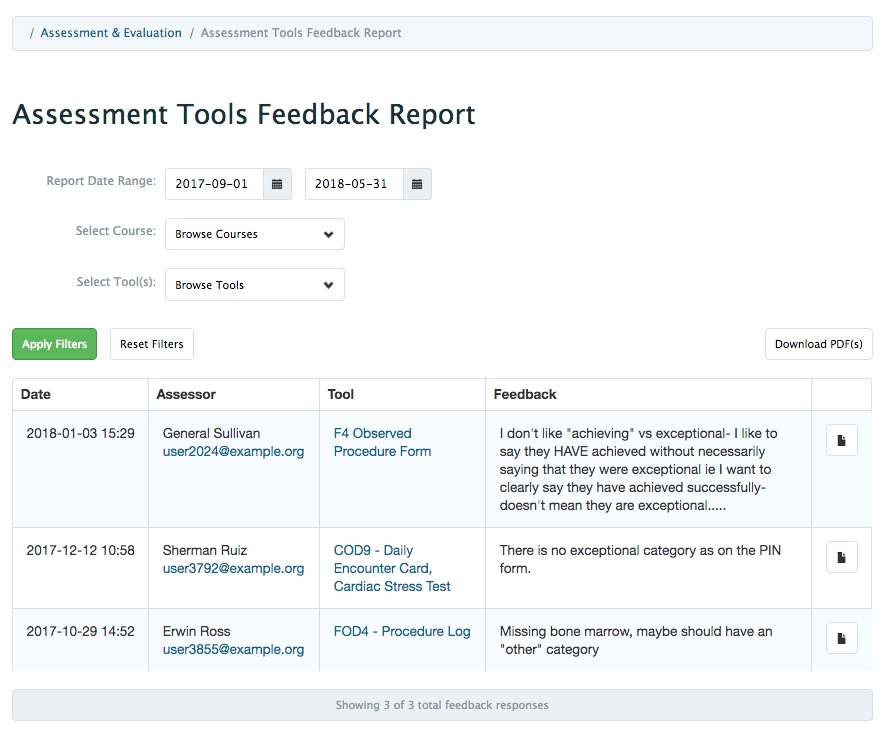

For use in collating responses provided by faculty completing forms produced through form templates in the competency-based medical education module. This report aggregates comments from forms that are sent out using a distribution. When logged in as an admin. you'll see a full list of all form feedback provided thus far display on the screen.

Set a date range.

Select a course from the dropdown options.

Select a tool from the dropdown options.

Click 'Apply Filters'.

Results will display on the screen and you can click 'Download PDF(s)' if you need to download a copy.

To begin a new search be sure to click 'Reset Filters' and select a new course and/or tool as appropriate.

You can click on the page icon to the right of the feedback column to view the form being referenced.

An organization must be using the rotation scheduler to use this report. This report displays the number of completed assessments over the number of triggered assessments per block for all students in a course during the selected curriculum period. Data is grouped by block, based on the selected block type. The learner is considered the target of the assessments.

Select a course (you can only select one).

Select a curriculum period.

Select a block template type (e.g. 1 week, 2 week). The block templates available will depend on the setup of the rotation schedule for the course.

Click 'Download CSV(s)'

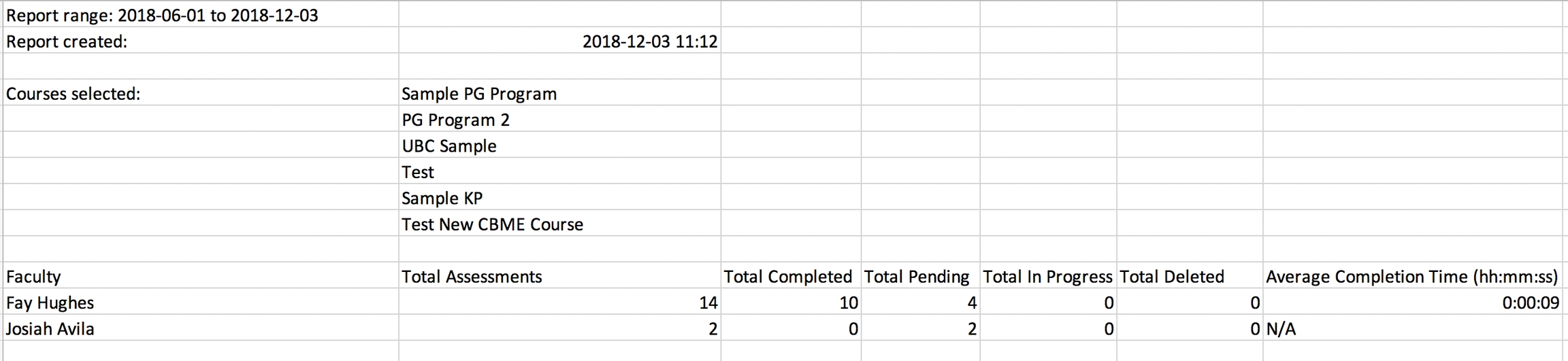

For use in monitoring the progress of faculty in completing the tasks assigned to them.

Select a course.

Set a date range.

Decide whether or not to include External assessors. (This is only relevant if you allow users to send tasks to external assessors, e.g., users without an Elentra account.)

Select the relevant faculty (you can select more than one).

Click 'Download CSV(s)'.

A csv file will download to your computer.

Generates one CSV file per tool (you can select multiple tools at the same time) and lists all completed instances of that tool across all learners within a custom date range. The report displays the encounter date, delivery date, and completed date for each form, as well as each form item and its associated response scale in the column header. If comments were entered for a selected response, they are included in a subsequent column. Users can opt to view only completed instances of a form or to view all instances of a form (pending, in progress, completed, and deleted).

Provides an inline overview or allows you to generate a CSV that displays all/selected assessment responses for a specified course, form, assessment status and date range.

Note that assessor and evaluator names will display in this report unless a form have been set as confidential.

This report can be used by administrative staff to keep an inventory of distributions.

Select a report type. You can see an overview of distributions or individual tasks.

Select a course.

Set a date range.

Select a task type.

Select a distribution or an individual task (your option will depend on the first selection you made on the page).

Click 'Generate Report'.

From here you can search within the results or click on any distribution to see its progress.

Use to see an overview of who is set as a reviewer for distributed tasks. (When you create a distribution you can assign a reviewer who serves as a gatekeeper of completed tasks before they are released to be seen by their target. This is completed during the final step of a distribution. For additional information please see the Assessment and Evaluation>Distributions help section.)

More information coming soon.

This report functions like the report above but offers users a view of the report in the interface without requiring them to open a PDF.

Please see the next page for information on the Weighted CSV Report.

Elentra includes a variety of report options through the Admin > Assessment & Evaluation tab. Please read report descriptions carefully as not all reports necessarily include data from both distributed forms and user-initiated on-demand forms.

Additional reporting tools for user-initiated on-demand forms used with the CBME tools can be viewed form the CBME dashboard.

A&E Reporting is an administrative reporting tool. Note that individual users may have access to their own reports via their Assessment & Evaluation button, but the availability of such reports depends on how distributions were set up.

When you create most reports you will have some additional options after selecting the appropriate course/faculty/learner/form, etc. These options allow you to customize the reports you run for different audiences.

Please note that not all these options will actually display on all reports, despite the fact that you have the option to select them through the user interface. Please see each specific report for additional detail about what will or will not be visible.

Include Comments: Enable this option if you'd like the report to include narrative comments made on the selected form. Unique Commenter ID: If you select to include comments you'll see this option. It allows you to apply a masked id number to each assessor/evaluator. This can be useful to identify patterns in comments (e.g., multiple negative comments that come from one person) while protecting the identity of those who completed the form. Include Commenter Name: If you would like to display the names of commenters click on the checkbox. Include Description: If you click this checkbox you can give a description to the report. The text you enter will be displayed at the top of the report generated. Include Average: Click this checkbox to include a column showing the average score. Include Aggregate Scoring: If you enable the average, you'll have the option to also include a column with aggregate positive and negative scoring in some reports. This gives a dichotomous overview of positive and negative ratings.

For use when you have clinical learning courses with block schedules and want an overview of those learners who had an approved leave during a specific block.

Select a curriculum period.

Select a block.

Click 'Download PDF'.

For use when you have clinical learning courses with rotation schedules and want an overview of those learners who had an approved leave during a specific rotation.

Set a date range.

Select one or more learners.

Set the report parameters regarding displaying description and comments.

Click 'Generate Report'.

Note that once generated, this report is available to download by clicking 'Download PDF'.

Users with access to Admin > Assessment and Evaluation will be able to view Assessment & Evaluation tasks and reports. Generally this will include medtech:admins, staff:admins, and staff:pcoordinator and faculty:director assigned to a specific course or program.

To view A&E Reports:

Click Admin > Assessment & Evaluation.

From the tab menu, click 'Reports'.

Reports are categorized under evaluations, assessments, leave and distributions. Please read report descriptions carefully as not all reports necessarily include data from both distributed forms and user-initiated on-demand forms.

Additional reporting tools for user-initiated on-demand forms used with the CBME tools can be viewed form the CBME dashboard.

Due to privacy requirements, some organizations choose to restrict access to completed evaluations in some way. The existing options are to:

Set specific forms as Confidential. If you enable this at a form level, any time that form is used the name of the assessor/evaluator will be shown as Confidential. This can help protect learner privacy, however it also restricts the ability to view who has completed their assigned tasks. If you monitor learner completion of evaluation tasks for the purposes of professionalism grading or similar, you may not want to set forms as Confidential.

Use a database setting (show_evaluator_data) to hide the names of any evaluator on a distributed or on-demand task. This means when an administrator or faculty member with access to a distribution progress report or the Admin > A&E Dashboard views tasks they will never see the names of evaluators but can see the contents on completed evaluation tasks.

Use a database setting (evaluation_data_visible) to restrict users' access to completed, individual evaluation tasks. Users can view a list of completed tasks but if they click on a task they will be denied access to view the task contents. Instead of seeing the task, they will receive an error message stating that they do not have access.

Warnings:

Note that medtech:admin users will still have access to all Evaluation data even with show_evaluator_data and evaluation_data_visible enabled. If this is not desired, set any Medtech Admins as “Assessment Report Admin", but have all the flags turned off instead of on in the database.

Show_evaluator_data and evaluation_data_visible do not cover Prompted Responses.

When the evaluation_data_visible setting is applied, admin and faculty users with access to Admin > Assessment & Evaluation will no longer be able to see the results of individually completed evaluation tasks or generate evaluation reports where evaluators could be identified.

On the Admin > A&E Dashboard users will still see the Evaluations tab on the Admin Dashboard, but they cannot click on individual tasks in any of the tables; the hyperlinks to those tasks are removed. (Maintaining visibility of the tasks themselves allows users to send reminders as needed.)

Under Assessment & Evaluation Reports users will be prevented from generating Individual Learning Event Evaluations since evaluators are identifiable in those reports.

For any distribution with a task type of Evaluation, users will be prevented from being able to open individual, in-progress or completed tasks on the Pending/In Progress and Completed tabs of the Distribution Progress page. Users will also be prevented from downloading PDFs of individual tasks on the distribution progress page.

Under the Assessment & Evaluation Tasks Icon, if the user can access Learner Assessments via the My Learners tab, they will be prevented from viewing or downloading individual evaluation tasks from the Learner's Current Tasks tab.

If the user can access Faculty Assessments via the Faculty tab, they will be prevented from viewing or downloading individual evaluation tasks from the Tasks Completed on Faculty tab.

When a distribution is set up as an Evaluation, the Reviewer option from Step 5 is no longer available to prevent accidentally allowing a user to see individual, identified, completed tasks.

If evaluation_data_visible is in use, an organization may wish to designate a small number of users to view completed evaluations. For this purpose individual proxy ids can be stored in the database (with show_evaluator_data enabled and from the ACL rule 'evaluationadmin').

Even when show_evaluator_data is enabled, the Progress pages for Evaluation distributions and the admin dashboard will not identify the evaluators. Tasks will show Confidential Evaluator and no email address.

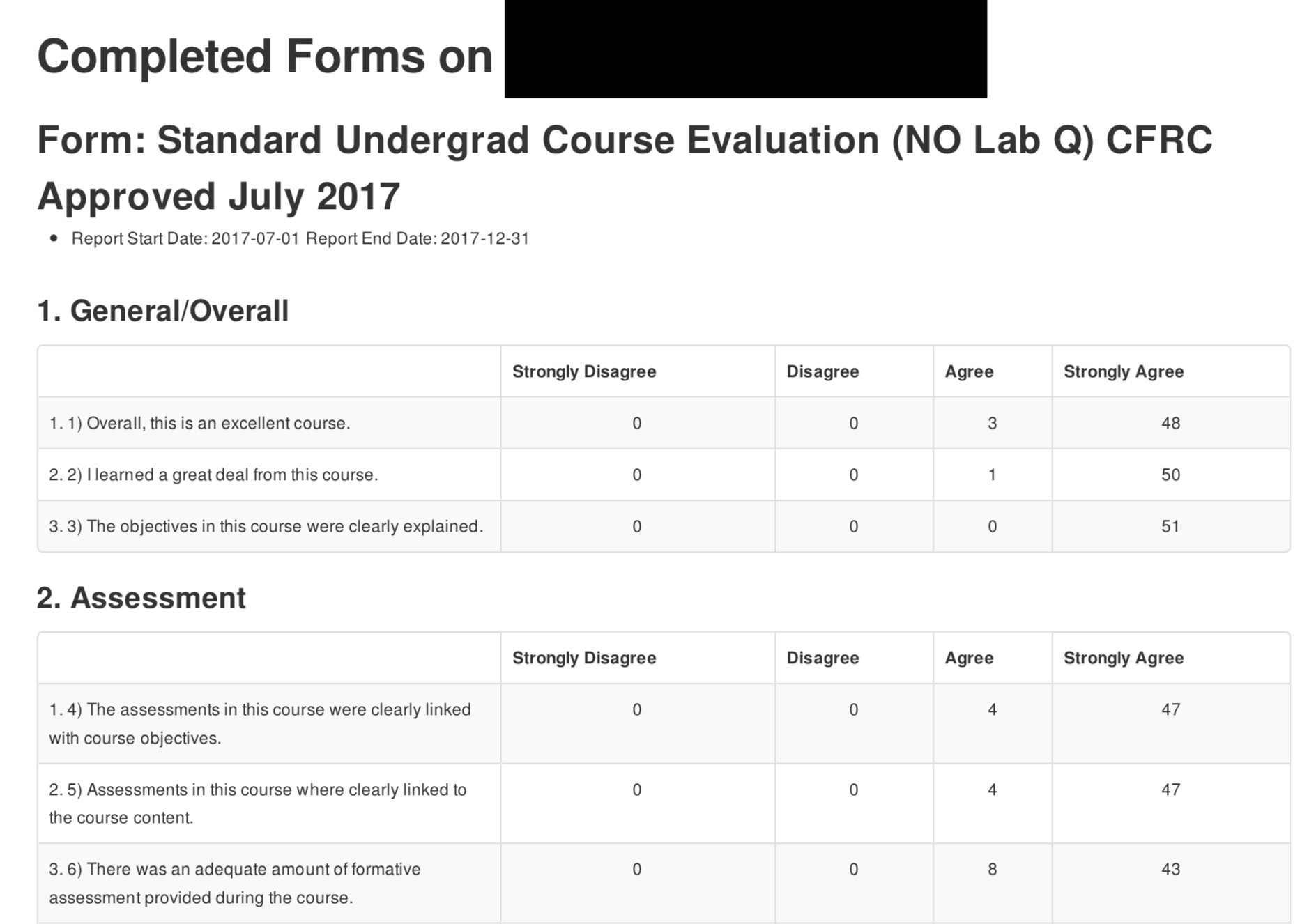

Most reports are currently available as PDFs.

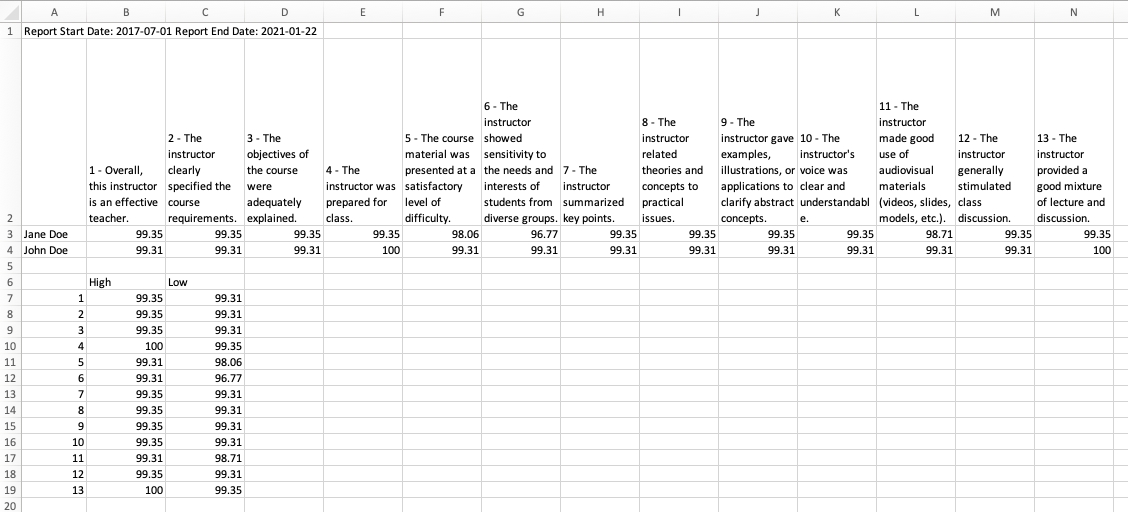

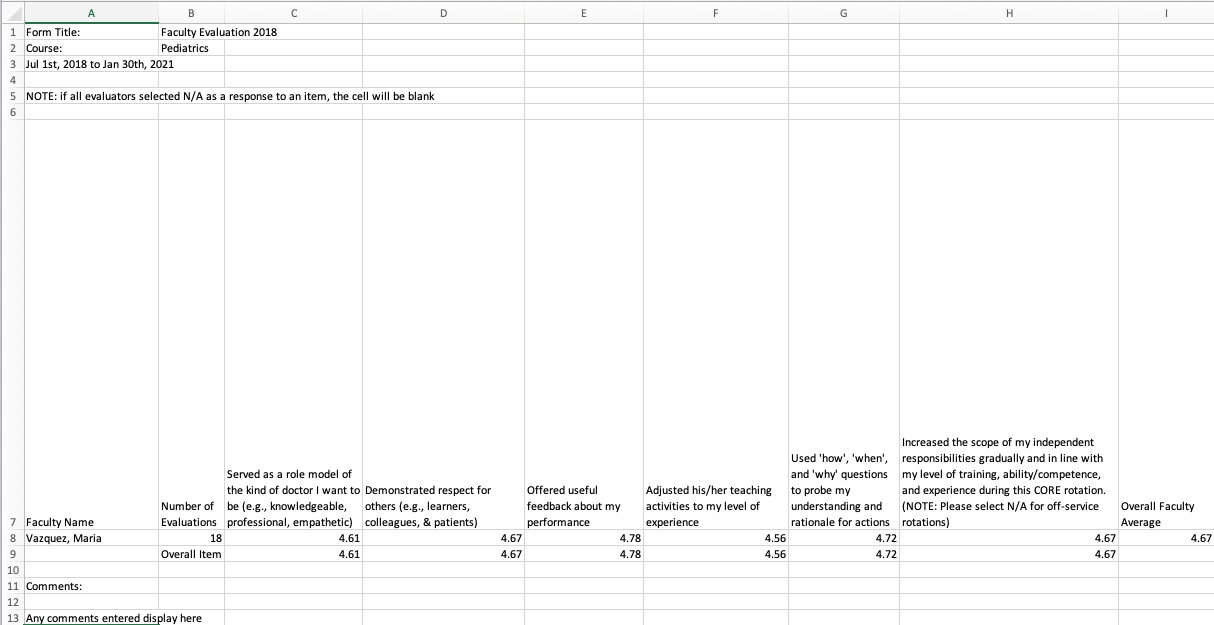

Some screen shots of sample reports are posted below but remember that your reports will contain the items relevant to the forms you've designed and used. In some cases information has been redacted to protect users' privacy.

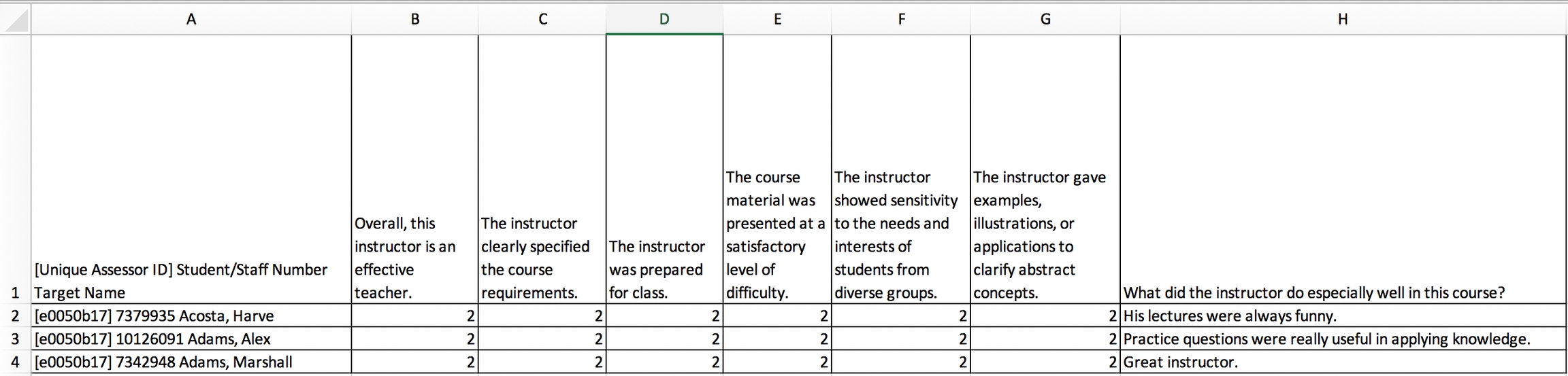

The Weighted CSV Report provides a csv file that includes the data collected through completed forms. It lists users who completed the form down the side and form items across the top. Inside each cell will be data from the form representing the scale ratings made by those who completed the forms. If your response descriptors include numbers (e.g. 1 - Overall, this instructor is an effective teacher.) note that those numbers will not necessarily be reflected in the csv.

It is important to note that the weighted CSV report was specifically designed to be used in conjunction with items using a rating scale (e.g. grouped items using a rubric), and allows you to create custom weights for scale response descriptors which get reflected in the report. There is currently no way to configure these weights through the user interface and you will need a developers help to assign weights to scale response descriptions in the database. (Developers, you'll need to use the cbl_assessment_rating_scale_responses table.)

If no weights are applied to the scale responses, the report defaults to assign a value of 0, 1, 2, 3, 4 to the responses in a left to right order. In effect, the Weighted CSV Report will work best if the rating scale you apply to the items mimics a 0-4 value (e.g. Not Applicable, Strongly Disagree, Disagree, Agree, Strongly Agree).

Please note that some rating scale values will be ignored in the Weighted CSV Report. Values that will be ignored are:

n/a

not applicable

not observed

did not attend

please select

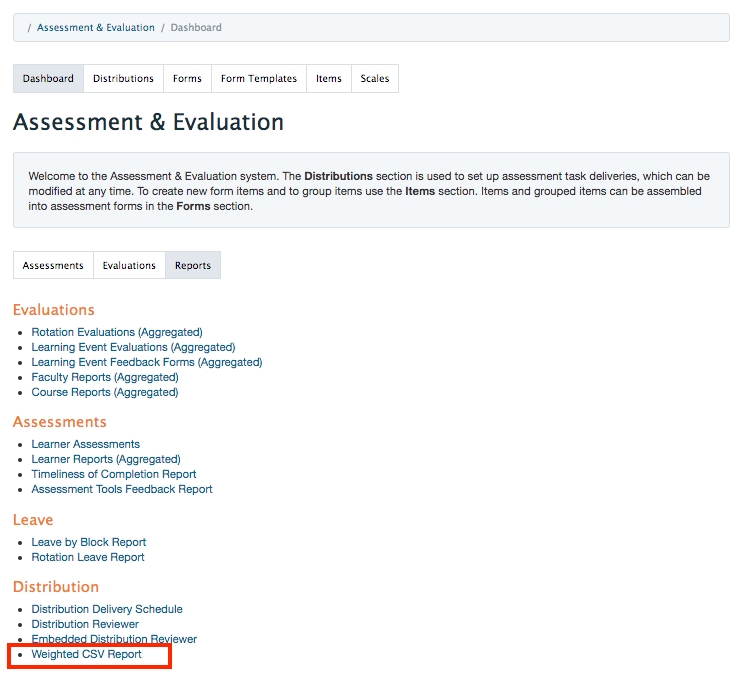

You can access the Weighed CSV Report from two places: from the Assessment & Evaluation Reports tab or from an individual distribution.

To access the Weighted CSV Report from Admin>Assessment & Evaluation you must have general access to the Admin>A&E tools. Such access will usually apply to staff:admin, staff:pcoordinator, and faculty:director users when the staff:pcoordiantors and faculty:directors are affiliated with a course/program.

The weighted CSV report is accessible from the Admin>Assessment & Evaluation Reports tab.

Click Admin>Assessment & Evaluation.

From the second tab menu, click on 'Reports'.

Scroll to the bottom of the list and in the Distribution section and click on 'Weighted CSV Report'.

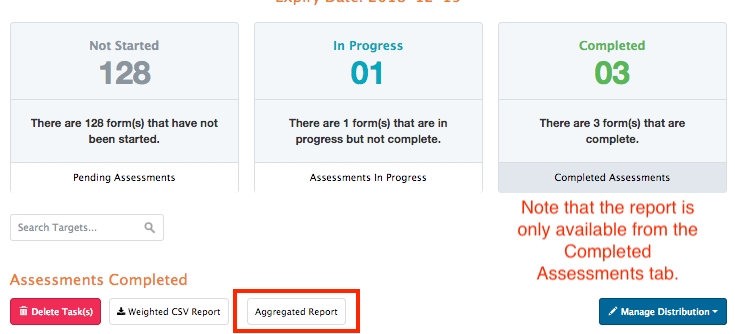

To access the Weighted CSV Report from an individual distribution you must have access to that distribution.

Click on Admin>Assessment & Evaluation.

From the first tab menu, click on 'Distributions'.

Search for or click on the title of the relevant distribution.

Click on the Completed Assessments card (far right).

Click on the Weighted CSV button under the Assessments Completed heading.

A file should download to your computer.